This article was written by Amine Amri

Story

This plug-in is based on TensorFlow examples for android (tensorflow/tensorflow/examples/android at master · tensorflow/tensorflow · GitHub)

It uses 2 neural networks for :

-

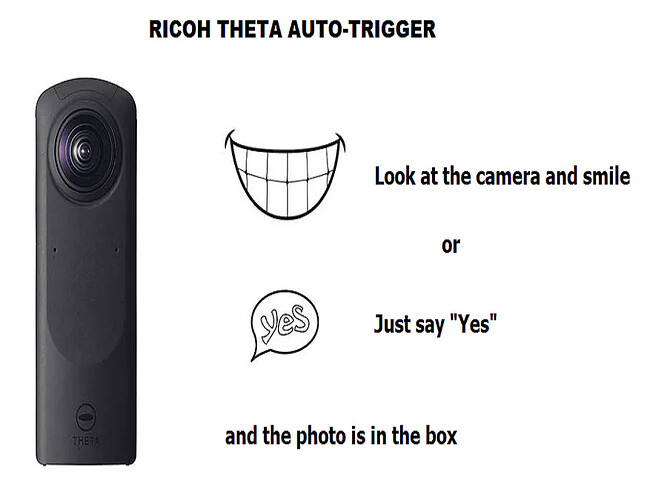

voice recognition : a basic speech recognition network will recognize the “yes” command (see https://www.tensorflow.org/tutorials/sequences/audio_recognition

for explanations on model training and how recognition works) -

smile detection : for smile detection, I used YOLO algorithm.

YOLO (You Only Look Once), is a network for object detection. The object detection task consists in determining the location on the image where certain objects are present, as well as classifying those objects. Previous methods for this, like R-CNN (Regional Convolutional Neural Network) and its variations, used a pipeline to perform this task in multiple steps. This can be slow to run and also hard to optimize, because each individual component must be trained separately. YOLO, does it all with a single neural network. So, to put it simple, you take an image as input, pass it through a neural network that looks similar to a normal CNN, and you get a vector of bounding boxes and class predictions in the output.

Training Yolo?

For training Yolo, I’ve used the darknet implementation. Darknet is an open source neural network framework written in C and CUDA. It is fast, easy to install, and supports CPU and GPU computation

As a training DataSet, I used the FDDB-360 Dataset.

FDDB-360 is a dataset derived from Face Detection Dataset and Benchmark (FDDB, http://viswww.cs.umass.edu/fddb/). It contains fisheye-looking images created from FDDB images, and is intended to help train models for face detection in 360° fisheye images.

FDDB-360 contains 17,052 fisheye-looking images and a total of 26,640 annotated faces.

The dataset is available from Ivan V. Bajić (J. Fu, S. R. Alvar, I. V. Bajić, and R. G. Vaughan,“FDDB-360: Face detection in 360-degree fisheye images,” Proc. IEEE MIPR’19 ,San Jose, CA, Mar. 2019).

Training was performed on a GPU (https://www.nvidia.com/fr-fr/geforce/products/10series/geforce-gtx-1080-ti/) and lasted about 50 hours.