Hello.

I would like to ask about official stitching algorithm.

If someone know good information, please teach us!

I used Theta Z1 (R02022).

Now I tried “180 degree panning check” like as follows.

- Shot one photo. (RAW + JPEG mode)

- Pan 180 degree.

- Shot one photo. (RAW + JPEG mode)

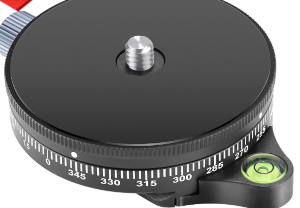

For panning, I used following tool. (panorama pan base)

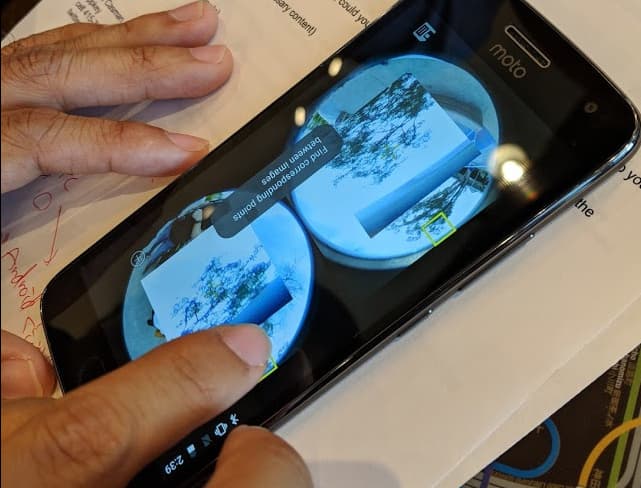

After that, I compared fisheye images generated from RAW (DNG) data

between No.1 and No.3 shots that are facing the same direction.

The result is following.

Please compare using “WinMerge” tool for example.

I could see some small difference (lens distortion?) between

two fisheye cameras but the camera direction is almost same, I think.

From this result, it seems that “panorama pan base” seems to pan Theta Z1 camera somewhat accurately.

After that, I generate equirectangular image using

RICOH THETA Stitcher Windows application.

Top/bottom correction setting set to manual, and Pitch/Roll are set to zero.

For Yaw setting, 0 deg for No.1 shot, and 180 deg for No.3 shot.

The result is following.

Please compare using “WinMerge” tool for example.

I think that on equirectangular comparison, Pitch direction difference was much bigger than fisheye comparison.

I’m not sure why…

Was RICOH THETA Stitcher app added some user-unknown distortion??

If someone know the reason, please let me know…

I tried same trial on movie mode too.

The behavior looks very similar to above.

In fact, I tried to use Theta camera generated equirectangular image for 3D reconstruction.

(Equirectangular image is very useful for robust SfM (Structure From Motion), I supposed.)

But for such purpose, user unknown distortion can be affect to the 3D reconstruction quality.

So I would like to know in detail…

Note that I tried this only our one Z1 camera, so the behavior can be changed if you use other Z1 camera, I supposed.

If someone has information, please help us!!