This blog was originally written by Atsushi Izumihara in Japanese. He uses the ID AMANE.

This is a community translation into English.

Introduction

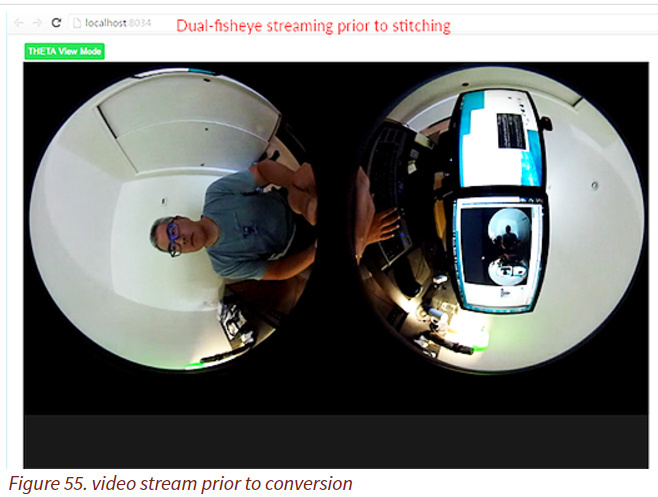

When THETA S came out, I tried to stream it to WebRTC and use it as a USB camera. However, I had trouble mapping the dual-fisheye stream onto a spherical surface.

Editors note:

for most people, this was solved with THETA UVC Blender and UVC FullHD Blender. This is the problem the author is trying to solve:

Alternate solution is here

After a while, I saw this article, thank you! I cried out

Editor Note: English translation of the article above is here.

This was exactly what I was looking for.

However, when I accessed the page, the THREE library was not being read correctly, some code was reversing on git, and it did not work properly. So I decided to fix it so that I could move on my own.

Today’s article is a project I tried just a little dancing on the shoulder of the giant. Thank you [to the original author mechamogera]

Finished project

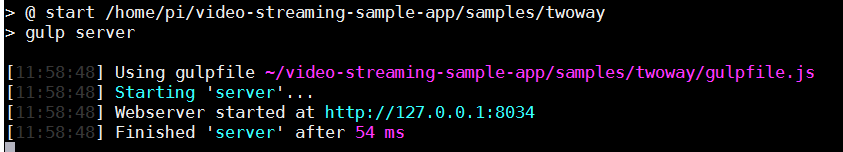

Here’s the code on GitHub. The original was hard to handle with Gist, so I moved things to a git project

Recently getUserMedia is not compatible with https. Use npm http-server. Get the code from GitHub and try it with npm http-server etc

I’ll show you what I recorded

https://www.instagram.com/p/BArmbUMw_L4/

Fixes

Environment

- three.js v 73

- RICOH THETA S

- Google Chrome

Texture mapping to sphere

Although the basic is as the original article, with the latest revision, the code which is doing the main theta-view.js of theta-view.js is theta-view.js deleted. (Is it a commit mistake?) Since there is a code in the previous revision, I will use this.

Adjustment of Stitching Seam

If you keep the original code, I cut it a bit, so adjust the numbers to make it feel good. Please see the code for details. Smoothing is not done. I want to do!

Get THETA S’s USB live streaming

For this test, I will display the movie with the THETA S locally connected. Acquire the WebRTC 's getUserMedia and use it with the <video> tag. The <video> tag is not displayed directly. The image is mapped by Three.js and displayed in the Canvas created with js.

Bonus: FOV adjustment

Make it possible to widen or narrow the viewing angle. OrbitControl.js the mouse wheel of OrbitControl.js is devoted to another event, you can OrbitControl.js control the FOV with the up and down keys

function keydown(e) {

var keyCode = e.keyCode;

switch (keyCode) {

case 40:

// zoom out

camera.fov += 1;

if(camera.fov >= 130) camera.fov = 130;

camera.updateProjectionMatrix();

break;

case 38:

// zoom in

camera.fov -= 1;

if(camera.fov <= 30) camera.fov = 30;

camera.updateProjectionMatrix();

break;

}

}

document.addEventListener("keydown", keydown);

Conclusion

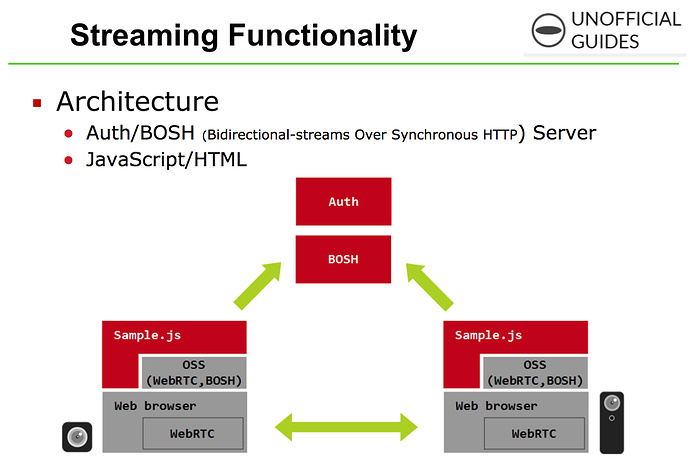

Bi-direction

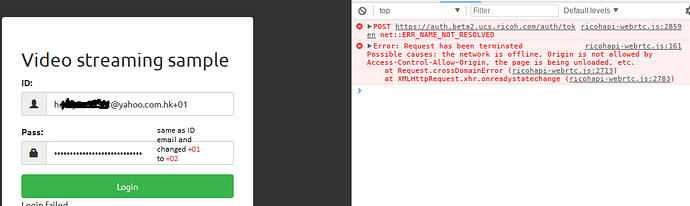

This time it was awkward to set up an https server so I stopped displaying local cameras, but just by using the video sent remotely by WebRTC as a texture, I can finish the interactive 360 web chat.

Continuous operation and usage

I have tested the THETA S in the USB live streaming state continuously for more than 8 hours. It seems to be OK for now, but there were a lot of twists and turns in my earlier tests. I will try using it a bit more continuously.

I thought that it could be used as a surveillance camera all the time but it seems a little inconvenient because it is necessary to press the current hardware key with the special manners of making live streaming status.

Resolution

When mapping to the spherical surface, I feel that it is not enough at USB 1280x720 at all. (Editor’s Note: Current resolution is now 1920x1080. 4K was announced recently for a future THETA model.) Even if you use HDMI live streaming FullHD with HDMI capture, it probably will not be enough. I thought that there is still room for further development in this area. I am looking forward to the future! (Editor’s Note: See announcement of 4K live streaming.)