Modeling from 360 Degree Camera Images

Originally posted by @yukimituki11 on Qiita (in Japanese). I have used Google Translate and my own Japanese language skills to translate and make the information available here, since I believe modeling and measurement is important in construction and real estate 360 degree applications. I have not tested this type of modeling personally. Yet! The article uses Blender which is a free and open-source 3D computer graphics software tool set used for creating animated films, visual effects, art, 3D-printed models, motion graphics, interactive 3D applications, virtual reality, and, formerly, video games. Available for Linux, macOS, Windows, BSD, and Haiku.

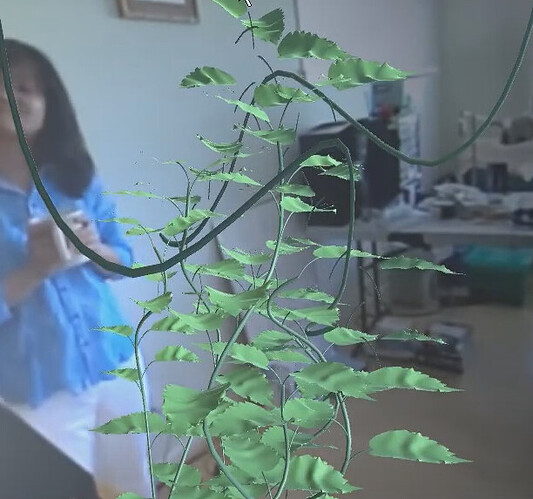

Spherical cameras like RICOH THETA allow you to shoot in 360 degrees: up, down, left and right all at once.

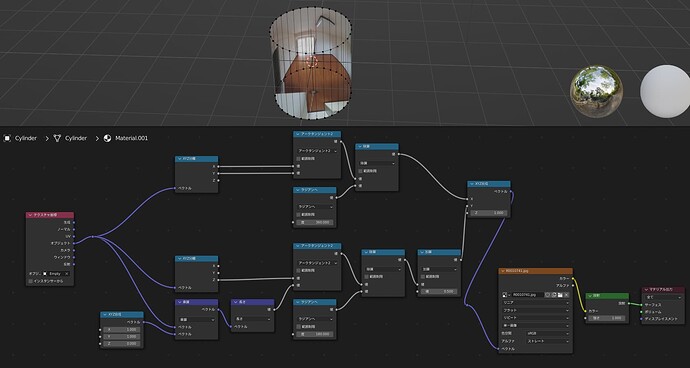

I would like to explain how to roughly reproduce the shooting space using Blender’s shader.

Object-based coordinates and coordinate transformations

The shooting data of the 360 degree camera can be obtained as image data of equirectangular coordinates.

Also, the Blender shader has a function to obtain coordinates based on a specific object.

In other words, if you can successfully calculate the shooting coordinates and object positions, you can recreate the shooting space.

The formula to convert arbitrary coordinates x, y, z in space to equirectangular projection [u, v] is

u = atan(x/y)

v = atan(z/sqrt(x^2+y^2))

If you reproduce this with Blender’s shader node, it will look like this

u,v respectively are divided by 360 degrees and 180 degrees by radian conversion. This is because the angle unit in the shader is radian and the texture coordinates are expressed as values between 0.0 and 1.0.

Subtracting 0.5 in the y coordinate is an offset that makes the camera position represent the center of the image.

The capture is with the cylinder face reversed and the back side hidden.

Modeling

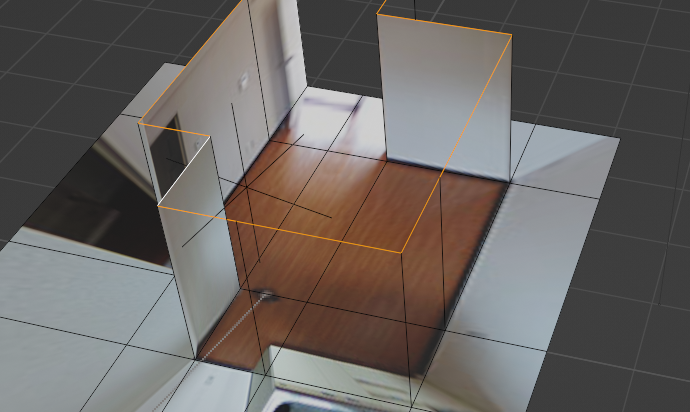

When pseudo-reproducing the shooting conditions, it may be sufficient to just project onto a cylinder or sphere.

I used one of the advantages of Blender, which allows you to edit the model without changing the shading state.

I would like to give an example of how to create a more detailed model.

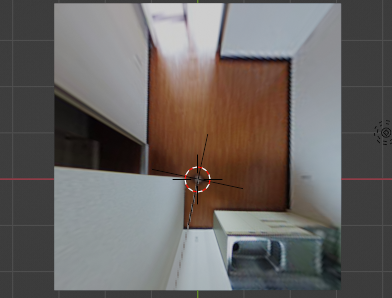

First, adjust the Empty object that we set earlier as a reference to the height of the camera at the time of shooting.

I tried creating a surface that matches the ground position.

The general shape of the floor seems to match.

You can create a wall by creating a vertical plate polygon at the boundary with the floor. In this way, it is possible to recreate the space, including areas that have not been measured, based on known data from the shooting location.

When creating the video at the beginning, the walls were known from the floor plan, so I was able to place the walls. More specific details are created by adding edges to the wall and extruding them.

Usage Example

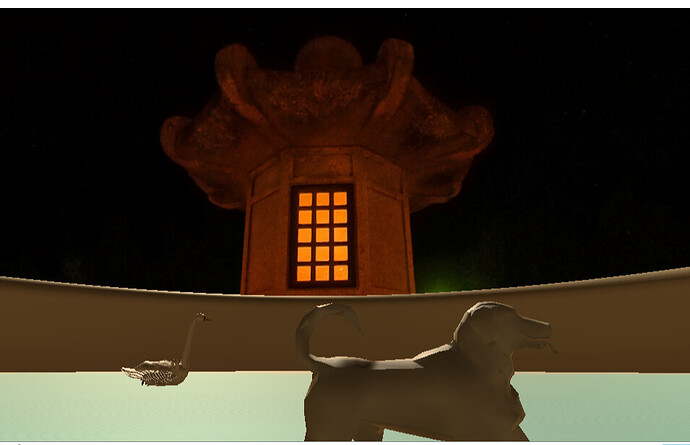

This example is from a previous location I was considering moving to, and I used it to see what would happen if I placed furniture there.

By mixing the results of multiple cameras, I was able to create a wider area than the examples shown so far in about 2 hours.

Blender also has an add-on that displays views in VR using a VR headset. So I also checked how the furniture would fit in a VR space.

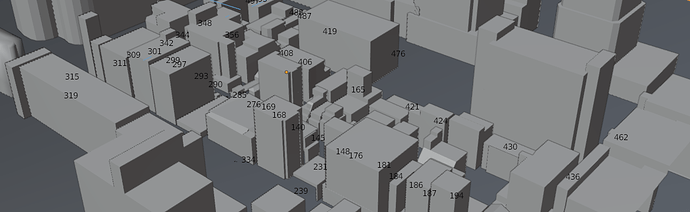

I also used this method to photograph a built-up area and recreate how it would look from the ground.

Although this is a simple method, I think it can be applied effectively.

Furthermore, images captured by a 360 degree camera are not actually accurate cylindrical coordinates due to optical characteristics.

I created calibration data and used it for work. Please refer to the previous article for an overview of how to create and use calibration data: Blenderでレンズの歪み補正データ(STmap)を作成してみる #Blender - Qiita

I hope it will be of some help!