Working project of latency at 200ms lag by @Jake_Kenin

https://ideas.theta360.guide/blog/award201905/

Other approaches

Such you read through Jake’s thread first as it discusses the latency issue and different techniques to get around it.

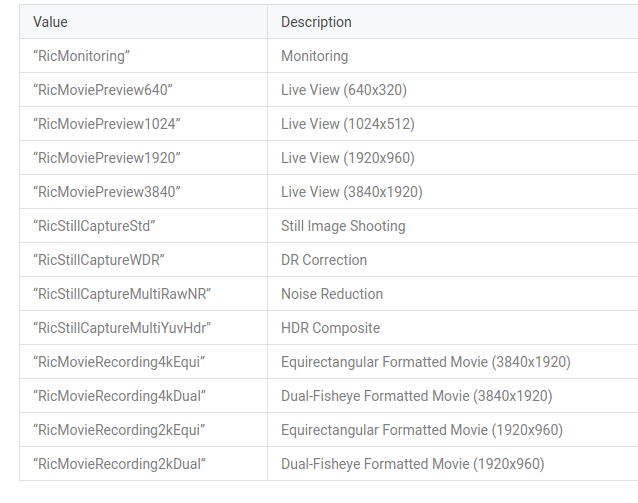

I have not tried this, but you can experiment with the Camera API to get direct access to the Android OS commands for the camera.

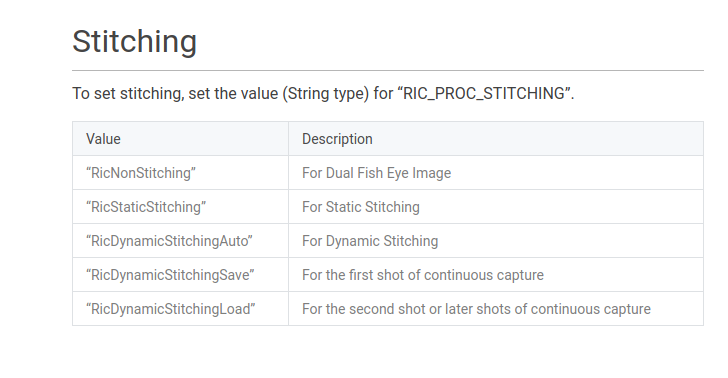

I do not think you can turn off stitching for Live View, but I have not tried.

You can use this GitHub repo as a base:

Note that even when the RICOH THETA is connected directly to Unity with a USB cable, I am getting latency of at least 0.4 seconds on Windows 10 with the USB driver supplied by RICOH with 4K video stream.

You may have lower latency with RTSP