@codetricity, my testing revealed that RTMP and RTSP could deliver smooth and high resolution video (tested using the WebAPI plugin and VLC), but it was too laggy (greater than 1 second) to be used safely with a drone moving at high speeds. Meanwhile, if I use MJPEG with the Ricoh API I can prioritize the most recent frame to make sure I minimize the delay (~250 ms at 1920x960 @ 8fps at 0.25 miles, ~100 ms at 1024x512 @ 30fps at 0.25 miles). Sorry I don’t have any actual benchmarks on hand. If I were to write the H.265 plugin I would make sure there was a way to synchronize the clocks so I could properly timestamp each frame and accurately measure the delay. Also, thanks for promoting this on the ideas page ![]()

- Why did you choose to build this? You say it’s for your RIT Senior Design Project. Can you tell me a little bit more about that?

For our senior year, engineering students participate in a senior design project. I participated in the multidisciplinary senior design course, where students from different engineering majors work together on a sponsored project. Projects are proposed by either students, faculty members, or outside companies. Then the faculty assigns students to projects based on their co-op / internship experience. This project was proposed and sponsored by Lockheed Martin, where I am sure this project will sit on a shelf while smaller and faster companies perfect it.

- Do you have more pictures of the rig? I’m interested in seeing how the THETA is housed in the gimbol. And also the base station antenna.

Here is a GIF of the gimbal with the camera adapter in it.

https://i.imgur.com/U2E7WJB.mp4

Here it is from another angle.

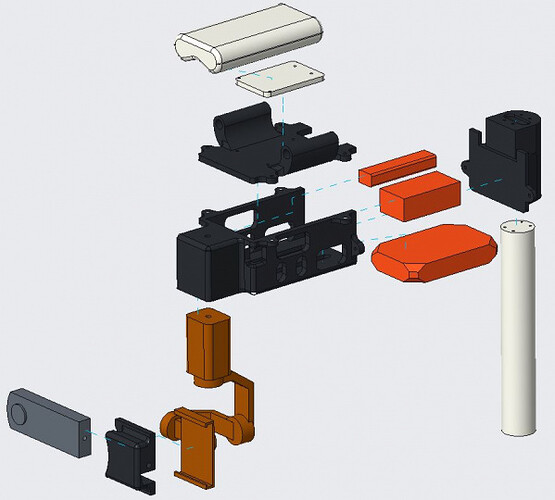

Here is the exploded view of the payload.

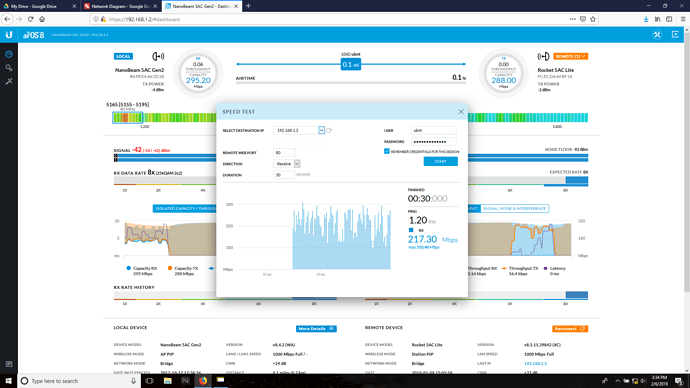

The base station setup is realllly simple. It consists solely of a NanoBeam 5AC connected to the base station via an ethernet cable. We literally just had a team member aim the antenna at the drone. Since the entire setup was made to work offline, a simple DHCP server was ran on the base station and that DHCP server assigned an IP to the camera.

- Is it both recorded to the THETA and live streaming to the VR headset? Or is it one or the other at a time?

Unfortunately no, you can only do one or the other. If you are streaming video via the Ricoh API, you cannot also be recording video to the camera’s storage. But that is another limitation of the using the Ricoh API. You should be able to do both simultaneously with a custom plugin. That said, the stream that is seen in the VR headset is automatically recorded to the base station PC.

- You stated that it gets “less than 200ms of lag.” Is that mostly due to the Ubiquiti Omni antenna? What software components would you say are most important for reducing lag?

From my VERY amateur research there are two things that go a long way to reduce lag:

- Protocol and implementation: This goes a long way. Some implementations have a lot of overhead. The usual tradeoff is video smoothness vs resolution vs framerate vs latency. I can’t think of any “specific” software components that would speed things up, because my software implementation is pretty basic. I just receive video from the Ricoh API treated as a black box, grab each frame, and then update an A-Frame videosphere. I haven’t done any specific testing regarding delays on the A-Frame side, since I was short on time and sort of stuck with the Ricoh API.

- Transmission Hardware: You want as much gain as possible regardless of the orientation of the drone with respect to the ground antenna (hence the omni antenna on the drone). The latency across the network was only ~ 1ms. You want minimal network latency with as much bandwidth as possible.

Getting this kind of signal strength at a distance on a moving target is difficult. So in effect we usually only saw a throughput of ~40 Mbps, and the latency would vary. Ubiquiti uses custom comms protocols to establish high throughput at range on stationary targets, so that explains why the performance varies so much on our moving target.

I didn’t test any other transmission hardware unfortunately, but I would imagine most other back-haul network hardware would perform comparably to the Ubiquiti hardware. When I tested my streaming software using just the built in WiFi adapter on the Theta, I got similar performance to when I used the Ubiquiti hardware. That said, the built in WiFi on the Theta wouldn’t have been able to get the range we needed.

- Did you test with more than one person viewing at a time? It’s capable of that?

Nope. But you should able to, but due to VR system limitations you won’t be able to do it all from one PC. I haven’t thought through the details.

- In the flight test video, i noticed the guy in yellow (not controlling the drone, as far as I can tell) starts sitting/lying down at around 4:22. That’s not related to the test in anyway, correct? Or is it?

Nope, I think he was going to pick up a camera being used for filming lol.