Alright, so I have made some headway, sort of.

Currently I am able to authenticate with the Theta via JS using an electron app. I hit some snags though.

First, if I want to be able to use A-Frame to connect to my Occulus Rift, I need to actually host the page on a live webserver, not just load the file in to the browser directly (ie. using file://my_web_page.html). To do this I just used an npm app called live-server. But this led me to another issue that didn’t present itself until I was actually hosting the web page: CORS issues.

CORS errors occur when you try to access an API on a domain different from the one that is hosting the code trying to access the API. So if I was loading the JS directly in the browser using file://, hosted the webpage on the Theta V’s webserver, or used a node app this wouldn’t happen. But again, I needed to use A-Frame, so I needed to actually host the web page. So I browsed the forums and found an old post that mentioned using a reverse proxy so that all the requests and responses appeared to be coming from the same domain. To accomplish this, I used another npm application called cors-anywhere, which setup a simple reverse proxy in the node app. This way anytime I want to access the Theta’s API, I simply access http://0.0.0.0:8080/api_endpoint/goes_here. In the end this ends up being a pretty gnarly URL: http://0.0.0.0:8080/192.168.50.227/osc/info, where 192.168.50.227 is the IP of the Theta on my network.

Finally I had to implement my own digest authentication code so that way I can authenticate with the Theta. Without this code, I would just get constant 401 Unauthorized errors when trying to access the API. This is a work in progress, and I am looking to see if I can use some other in-browser digest authentication code, since mine doesn’t currently have any actual session functionality and JS is new to me.

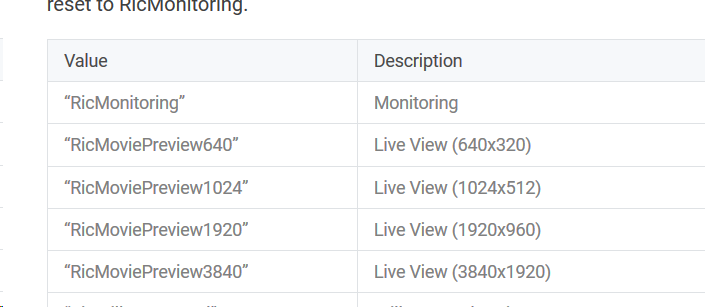

Next step for me is to figure out the whole process of fetching the MJPEG stream, getting each stream as a frame, and using that to update the A-Frame scene. There are some issues with this, most notably that the MJPEG stream is initiated immediately upon request the preview via camera.getLivePreview.I don’t know how to do a multithreaded application in JS, or if it is even possible. So I am looking at using the MJPEG endpoints generated using Device WebAPI which can initialize an MJPEG stream and gives you a URL endpoint at which you can access the stream. I have seen code where you can just set the src tag of an A-Frame element to the MJPEG URL and it will stream the video. Unfortunately that means I have to reverse engineer the API used by the plugin, because it isn’t very well documented for the Theta (or at least for the live preview / stream functionality) and I haven’t managed to find the source code for the plugin anywhere.

But for now I will manually initiate the stream by going to the Device WebAPI web page and starting the stream, and then manually set the A-Frame element’s src to the generated MJPEG URL. By doing this I can at least verify that A-Frame is working in Electron. From there I can figure out how I will automate this process, or perform my own decoding of the preview stream from the Ricoh API. One option if I can’t do all the parsing in the browser, I can do the processing in the Electron app and use Electron’s interprocess communication to send the frames of the video to the A-Frame window.

Lots of work to go. My source code is available in GitHub.