Hi Craig! Long post incoming…

Are you using something like OBS to stream the THETA V to Glitch?

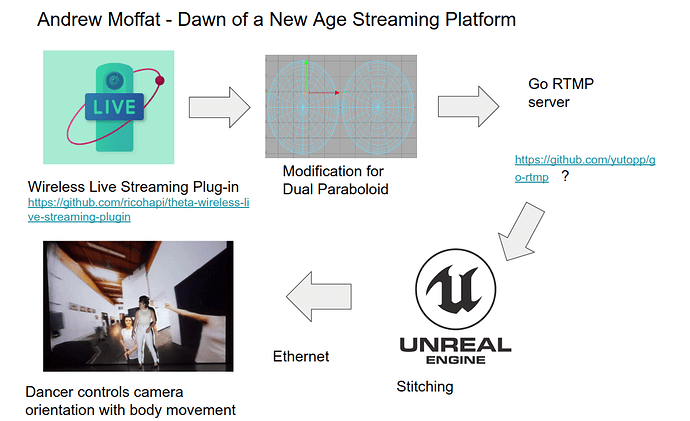

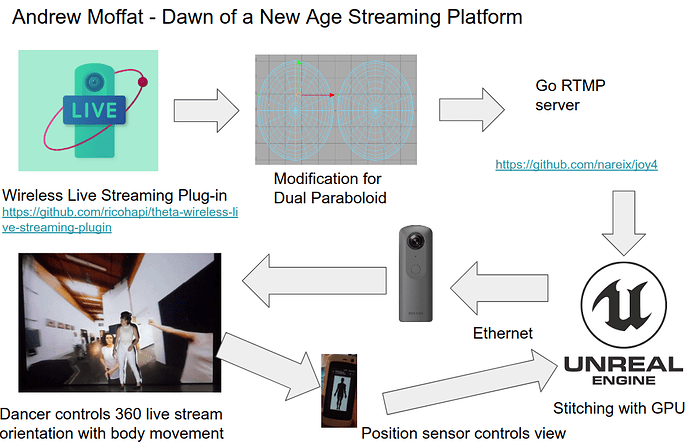

I used a custom setup. The basics are: modified Theta livestreaming app → Golang RTMP server → UE4 plugin

The modified plugin produces raw dual paraboloid images (vs stitched equirectangular images) because of temperature issues. I wrote a Go RTMP server that runs locally on a private network, and the camera streams to that rtmp address. A custom client plugin for UE4 connects to the RTMP server and feeds the frames into textures. The textures are then post-processed in a UE4 material to stitch the paraboloid halves back together again. The stitching isn’t perfect (and not as good as the Theta’s internal stitching), as you can see by this image:

But doing the stitching later in the rendering engine cuts down on the heat that the Theta produces substantially, and makes it less likely to shut down after 5 minutes of streaming during a performance

You may have noticed in the video that I am using a long (obnoxiously long) ethernet cable for the streaming. The performance venue had many competing wifi signals, and streaming over wifi became impossible…frequent disconnects and slow reconnects. Craig, I used information from your post to help get that working, so thank you!

I was able to control the camera almost entirely over the network via automated curl requests, including starting the streaming plugin, starting streaming, and stopping streaming. But not stopping the plugin. Controlling the camera in this way seems like a simple task, but this is where 90% effort on the UE4 plugin code went, because it needed to be bulletproof. Since performers can accidentally shut off the camera (which would be detrimental to the performance), I needed a finite state machine that knew how to navigate between the different states to put it back into a “desired” state (like streaming). So if the ethernet cable fell out, or they shut the plugin off, the system would heal itself to the correct state again.

Is the performer in the front reacting to the video only using visual cues from the screen?

It is mostly improvised, but some of it is choreographed.

What type of interaction is going on below?

Are you referring to her turning? With the turning, she is controlling the orientation of the the live feed with the orientation of her body…you can see that when she turns, the view turns as well. You’ll notice that when she is facing the audience, the view from the camera is always towards the person holding the selfie stick. This is possible because the dancer is “inside” of a virtual sphere, and her rotation in the real world controls her rotation in the game engine.

How did you get the black and white “shadow” effect?

Are you referring to the silhouette around the performer? Since the projector is in front of the performer, the black shadow you see around her is from the projector itself. I am projecting white onto her outline so that it looks like she is “in front of” the projection. This allows us to do things like put the performer “in” water (the last section of the piece) and have it look somewhat convincing. Tracking the dancer in order to do this is part of a larger framework I’ve written (of which the Theta V client/server code is a part of). I’m happy to share more information if you have specific questions about it!

Would love to learn how you put this together.

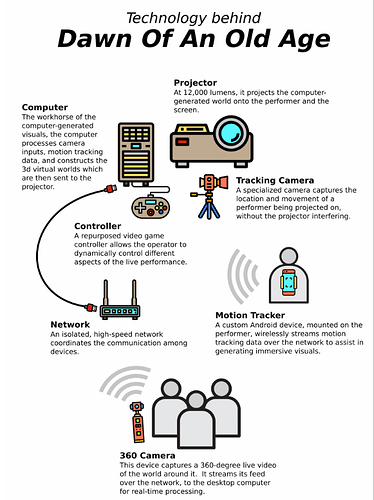

I am in the process of putting together more comprehensive documentation about how tech-artists can put together work like this. I am currently trying to gauge interest for the kind of framework I have built, so that I can share it with others. I will be happy to share more information about it with you (or here, or both!) if you think it is something that will be valuable to others. In the meantime, here is the pdf file for the program for our show, which has a very high-level overview of the hardware involved: