Kieran Farr started programming just for fun when he was a kid. And even though he never got a CS degree in college, he eventually went on to do a lot of work in his primary area of interest, video. “I have an addiction to online video streaming technologies” he told me. His current passion is virtual and augmented reality using open source web-based tools.

While he doesn’t consider himself a great developer, his interests are centered around image processing and trying to connect pieces together in a way that adds value. Case in point, the Ricoh Theta S camera, which he heard about from a friend who follows the latest in new technologies. At the same time, Kieran saw some 360 panning videos on FaceBook and decided to get the camera and experiment with making his own 360 videos.

To begin, he needed to learn how the camera worked, and about 360 optics and geometry. Then through online searches he discovered that a number of people had already developed quite a lot of software to leverage the Ricoh API for the Theta S camera. And since it’s a web services API that connects through WiFi, it seemed it would be easy to connect and function the device to do all the things that Ricoh’s proprietary app could do. “What was interesting was seeing all different ways people had used the API”, he explained.

Now Kieran works for the video streaming company Brightcove, so his immediate interest was live streaming in 360. And while the technology isn’t all there yet, and it isn’t a perfect experience, the Theta S camera does bring it closer.

So he thought about what he could do to make this happen. First step was to play with some of the code people had published on the web from various hackathons. It seemed that he could take some of the preview imagery available through the API over WiFi and paste it onto the inside of a sphere so you could look around in real time. Problem is that the quality over WiFi is very poor. So a next step might be 720p through USB. But would it be possible to make it into a live stream?

Kieran found a number of projects online, and especially a software kit using MacOS Quartz Composer developed by an Australian, Paul Bourke. Bourke developed software to take a two dimensional image and display it over the surface of a planetarium. Kieran used Bourke’s approach, but starting instead with the two fisheye images coming from the Theta S camera.

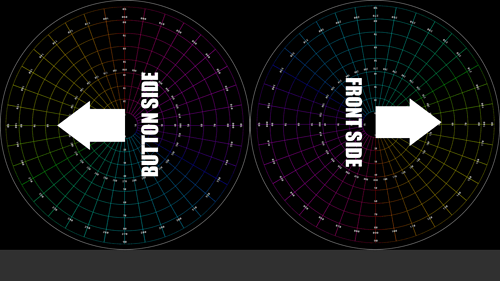

Input:

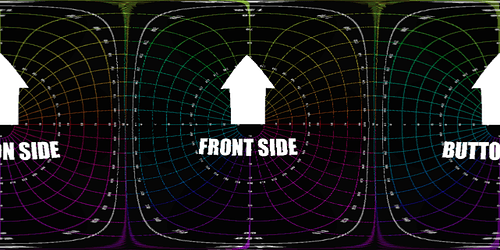

Output:

Example:

The result stitched the images together in a reasonable way. But making it perfect seemed beyond reach. So he put his code up on GitHub and promoted it so lot of people saw it, some with more math and programming chops than he had. They modified the code, added reference images and other tweaks, and made it usable. Kieran calls this “open source Nirvana”.

Kieran’s recommendation to other developers is to start small and simple, and add simple features one step at a time, rather than trying to tackle the whole project all at once. He still wants to be able to hook up a 360 camera to his computer and have it render live images that you can view through a web browser or on mobile devices. But this will have to wait for open source standards for 360 video transmission solutions.

You can find Kieran’s GitHub project, along with references to additional respources, at http://github.com/kfarr.