The RICOH THETA API v2.1 SDK for iOS is available for iOS developers. It provides a helpful sample app and access to code that simplifies development. It is a key tool for iOS developers making mobile apps that take advantage of the unique hardware strengths of the RICOH THETA 360 degree camera line.

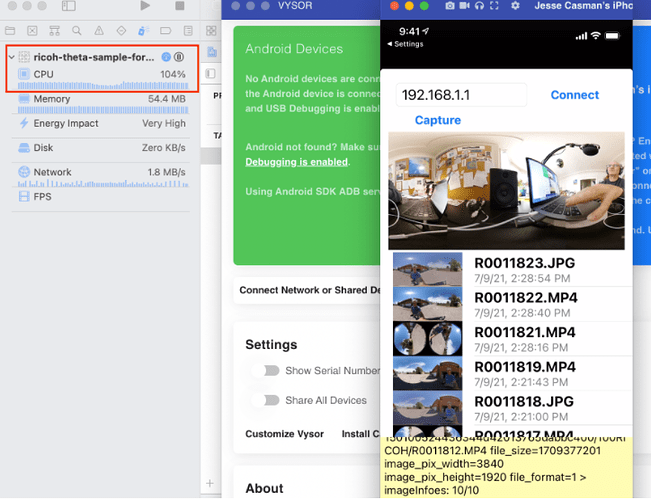

NOTE: In my tests, the CPU usage is consistently high, over 100%. If you are going to assess the iOS SDK, it may not work for you. Ideally, this warning saves you time. It would help the community if you report back here your feedback. Does the SDK work for you? Is CPU usage high for you?

My environment

- On a MacBook Air (M1, 2020) laptop

- MacOS Big Sur 11.5.2

- iPhone 12

- iOS 14.7.1

- USB-C to Lightning cable

- RICOH THETA Z1

- Firmware 2.00.1

There are two main items that you want to pay attention to when you first get the SDK:

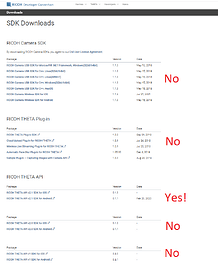

Download the right SDK

There is a RICOH Camera SDK. This is NOT what you want. The download page had multiple SDKs for different RICOH cameras. Make sure it says RICOH THETA clearly. You want RICOH THETA API v2.1 SDK for iOS.

This link has a URL anchor that will point you to the correct part of the downloads page: https://api.ricoh/downloads/#ricoh-theta-api

Download the right API version

There are three main API versions. They are based on Google’s open source Open Spherical Camera API with some additions specific to THETA.

One main difference between 2.0 and 2.1 is a streamlined way of checking the state of the camera before sending a command. 2.0 requires more steps.

You want the newest version 2.1.

Main Components Needed

- Xcode (30GB download)

- An Apple Developer account (free version available)

- RICOH THETA API v2.1 SDK for iOS

- Includes both source and a project: ricoh-theta-sample-for-iosv2.xcodeproj

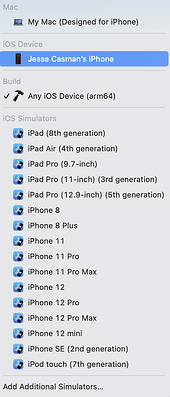

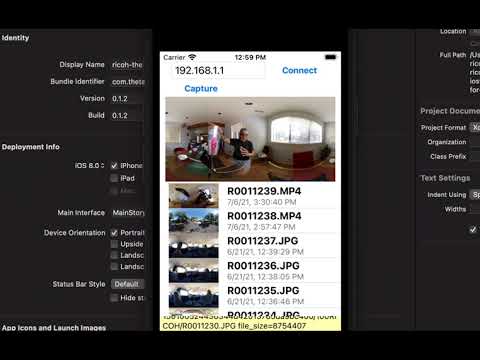

Xcode includes iOS Simulators. You may want to test on a physical device, preferably your target platform. In this screenshot I am going to build ricoh-theta-sample-for-iosv2.xcodeproj and run it on my iPhone 12.

Build the project.

In my case, I had to go into Signings & Certificates to set the Team and Bundle Identifier.

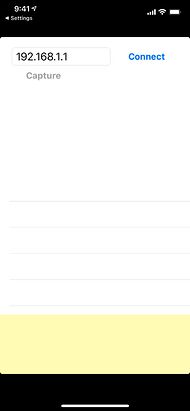

It’s running on my iPhone!

I press Connect. Nothing happens.

Duh! I need to connect to my THETA Z1 through Wi-Fi. On the THETA, the Wi-Fi icon blinks if it’s not connected. Solid when it is connected.

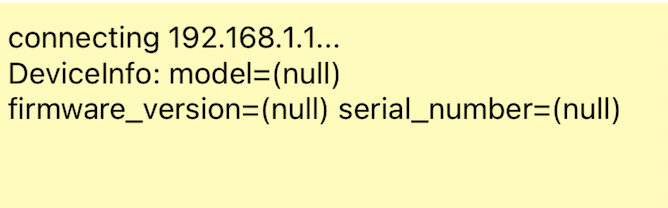

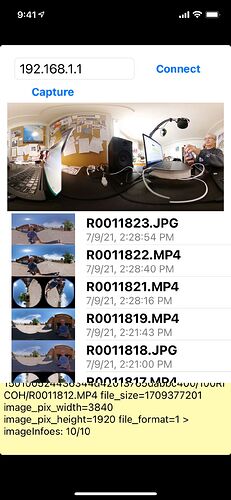

I try again. It’s connected and running. I can see a live preview. I can see a list of 360 degree images on my iPhone. Cool!

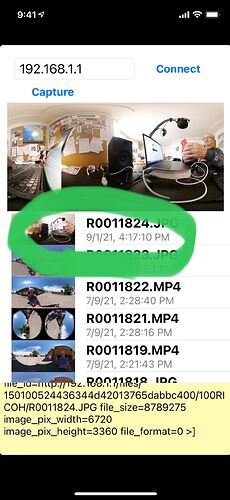

I take a test capture. I press Capture. I hear the shutter noise from the THETA. The new picture is listed.

I open the new picture. It’s me giving a big thumbs up.

Issue with CPU Usage

The SDK may not be usable in your situation. This may make the SDK unusable. In my limited testing, the CPU usage was over 100%.

Next Steps

The full API documentation is here: https://api.ricoh/docs/theta-web-api-v2.1/

theta360.guide had many useful docs, how-tos, videos and more. Just two examples: Capture Video using theta iOS SDK and Auto-connect to the camera using Ricoh Theta iOS SDK

If you are interested in contributing your experience using the iOS SDK, we need the help! The community can benefit from your knowledge. Please reply to this post.