how can we perform live streaming using python code over usb

hi!

I really love live streaming, addicted to it, just wanted to be the first one who replies! ![]() Any way you will get details for sure, I’m more focused on live streaming wirelessly through WiFi routers or cellular network…

Any way you will get details for sure, I’m more focused on live streaming wirelessly through WiFi routers or cellular network…

How do you mean “over usb”? To live stream as webcam there are windows/linux drivers and in this scenario encoding happens on the other side on dekstop PC or pocket like Raspberry PI, Nvidia Jetson Nano, etc.

Which protocol would you like to use for the stream? RTMP, RTSP, else?

@Susantini Welcome to the theta360.guide community! There are quite a few projects and companies that do live streaming over USB. The FOX Sewer Rover co-founder @Hugues posted about using Python as a relay for the live stream feed. I think that was an early prototype and they have gone through many generations of development since then. But it may be a useful reference:

Fox Sewer Rover company here:

Register here and get detailed info.

RICOH THETA360.guide Independent developer community

Basic example in Python using OpenCV for processing.

import cv2

cap = cv2.VideoCapture(0)

# Check if the webcam is opened correctly

if not cap.isOpened():

raise IOError("Cannot open webcam")

while True:

ret, frame = cap.read()

frame = cv2.resize(frame, None, fx=0.25, fy=0.25, interpolation=cv2.INTER_AREA)

cv2.imshow('Input', frame)

c = cv2.waitKey(1)

if c == 27:

break

cap.release()

cv2.destroyAllWindows()

more complex example in Python.

import sys

import argparse

from tokenize import String

import cv2

import numpy as np

def parse_cli_args():

parser = argparse.ArgumentParser()

parser.add_argument("--video_device", dest="video_device",

help="Video device # of USB webcam (/dev/video?) [0]",

default=0, type=int)

parser.add_argument("--gsttheta", dest="gsttheta", help="nvdec, auto, none", default="none")

arguments = parser.parse_args()

return arguments

# Open an external usb camera /dev/videoX

def open_theta_device(device_number):

print(f"attempting to use v4l2loopback on /dev/video {device_number}")

return cv2.VideoCapture(device_number)

# https://github.com/nickel110/gstthetauvc

# example uses hardware acceleration

def open_gst_thetauvc_nvdec():

print("attempting hardware acceleration for NVIDIA GPU with gstthetauvc")

return cv2.VideoCapture("thetauvcsrc \

! queue \

! h264parse \

! nvdec \

! gldownload \

! queue \

! videoconvert n-threads=0 \

! video/x-raw,format=BGR \

! queue \

! appsink")

# without hardware acceleration

def open_gst_thetauvc_auto():

return cv2.VideoCapture("thetauvcsrc \

! decodebin \

! autovideoconvert \

! video/x-raw,format=BGRx \

! queue ! videoconvert \

! video/x-raw,format=BGR ! queue ! appsink")

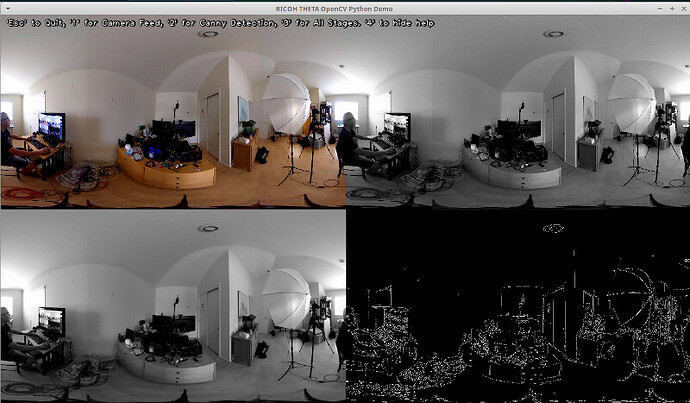

def read_cam(video_capture):

if video_capture.isOpened():

windowName = "main_canny"

cv2.namedWindow(windowName, cv2.WINDOW_NORMAL)

cv2.resizeWindow(windowName,1280,720)

cv2.moveWindow(windowName,0,0)

cv2.setWindowTitle(windowName,"RICOH THETA OpenCV Python Demo")

showWindow=3 # Show all stages

showHelp = True

font = cv2.FONT_HERSHEY_PLAIN

helpText="'Esc' to Quit, '1' for Camera Feed, '2' for Canny Detection, '3' for All Stages. '4' to hide help"

edgeThreshold=40

showFullScreen = False

while True:

if cv2.getWindowProperty(windowName, 0) < 0: # Check to see if the user closed the window

# This will fail if the user closed the window; Nasties get printed to the console

break;

ret_val, frame = video_capture.read();

hsv=cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

blur=cv2.GaussianBlur(hsv,(7,7),1.5)

edges=cv2.Canny(blur,0,edgeThreshold)

if showWindow == 3: # Need to show the 4 stages

# Composite the 2x2 window

# Feed from the camera is RGB, the others gray

# To composite, convert gray images to color.

# All images must be of the same type to display in a window

frameRs=cv2.resize(frame, (640,360))

hsvRs=cv2.resize(hsv,(640,360))

vidBuf = np.concatenate((frameRs, cv2.cvtColor(hsvRs,cv2.COLOR_GRAY2BGR)), axis=1)

blurRs=cv2.resize(blur,(640,360))

edgesRs=cv2.resize(edges,(640,360))

vidBuf1 = np.concatenate( (cv2.cvtColor(blurRs,cv2.COLOR_GRAY2BGR),cv2.cvtColor(edgesRs,cv2.COLOR_GRAY2BGR)), axis=1)

vidBuf = np.concatenate( (vidBuf, vidBuf1), axis=0)

if showWindow==1: # Show Camera Frame

displayBuf = frame

elif showWindow == 2: # Show Canny Edge Detection

displayBuf = edges

elif showWindow == 3: # Show All Stages

displayBuf = vidBuf

if showHelp == True:

cv2.putText(displayBuf, helpText, (11,20), font, 1.0, (32,32,32), 4, cv2.LINE_AA)

cv2.putText(displayBuf, helpText, (10,20), font, 1.0, (240,240,240), 1, cv2.LINE_AA)

cv2.imshow(windowName,displayBuf)

key=cv2.waitKey(10)

if key == 27: # Check for ESC key

cv2.destroyAllWindows()

break ;

elif key==49: # 1 key, show frame

cv2.setWindowTitle(windowName,"Camera Feed")

showWindow=1

elif key==50: # 2 key, show Canny

cv2.setWindowTitle(windowName,"Canny Edge Detection")

showWindow=2

elif key==51: # 3 key, show Stages

cv2.setWindowTitle(windowName,"Camera, Gray scale, Gaussian Blur, Canny Edge Detection")

showWindow=3

elif key==52: # 4 key, toggle help

showHelp = not showHelp

elif key==44: # , lower canny edge threshold

edgeThreshold=max(0,edgeThreshold-1)

print ('Canny Edge Threshold Maximum: ',edgeThreshold)

elif key==46: # , raise canny edge threshold

edgeThreshold=edgeThreshold+1

print ('Canny Edge Threshold Maximum: ', edgeThreshold)

elif key==74: # Toggle fullscreen; This is the F3 key on this particular keyboard

# Toggle full screen mode

if showFullScreen == False :

cv2.setWindowProperty(windowName, cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

else:

cv2.setWindowProperty(windowName, cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_NORMAL)

showFullScreen = not showFullScreen

else:

print ("camera open failed")

if __name__ == '__main__':

arguments = parse_cli_args()

print("Called with args:")

print(arguments)

print("OpenCV version: {}".format(cv2.__version__))

print("Device Number:",arguments.video_device)

if arguments.gsttheta=='nvdec':

video_capture=open_gst_thetauvc_nvdec()

elif arguments.gsttheta == 'auto':

video_capture=open_gst_thetauvc_auto()

else:

video_capture=open_theta_device(arguments.video_device)

read_cam(video_capture)

video_capture.release()

cv2.destroyAllWindows()

Suggest you run Python on Linux, either x86 or ARM work. However, due to GPU processing support, most people use NVIDIA Jetson for ARM.

This is the output of the Python program.