I’m interested in writing software (plugins) that run onboard the camera that performs some image processing in real-time and logs some statistics that can later be retrieved by web or USB interface.

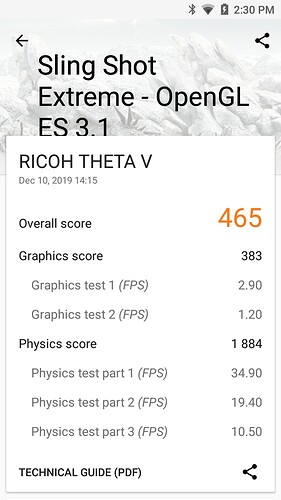

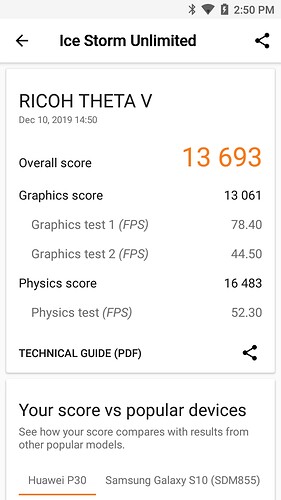

Depending on how much processing power is available, I’d ideally like to have all the processing onboard the Theta V but would likely end up offloading some processing to RaspPI and/or an Android device.

What I’d like to do:

- Capture time-lapse of entire sky (1 frame every 10 to 30 seconds)

- Track local markers (ie. some orange dots … maybe at 30Hz)

- Compute depth maps (if processor can do it)

- Compute motion flow vectors

- Assemble small lowres video clips of objects in scene

- Calculate distance to objects in scene using GPS and sensor data

- lots more stuff

The tricky bit is that the camera will always be in motion and oriented off-axis so one of my initial challenges is to use the orientation data to create a normalized (level, north-facing) spherical images so that feature correlation across images can be done.

The camera will be running on an aircraft. To get an idea of the sort of environment its running in check out this video …

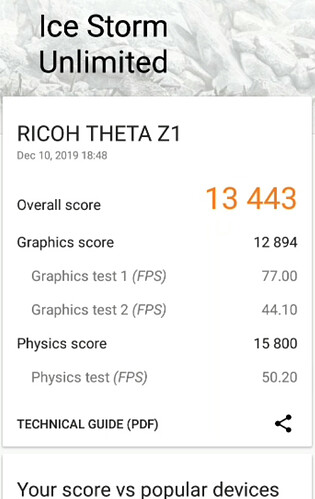

I’m sure the camera will struggle to achieve some of the computationally expensive tasks but I’m hoping I can get it working mostly on the camera without having to resort to using a second computer.

I started doing development using an Insta360 and doing all the processing on an Android tablet but the idea of having a self-contained camera like the Theta V that does it all onboard is very appealing.

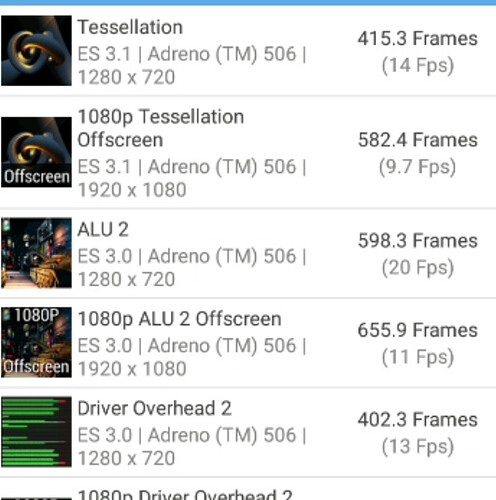

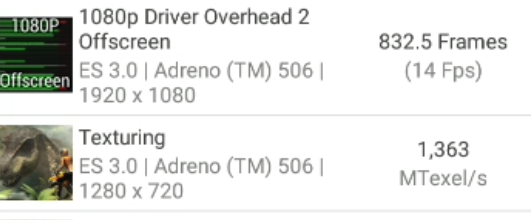

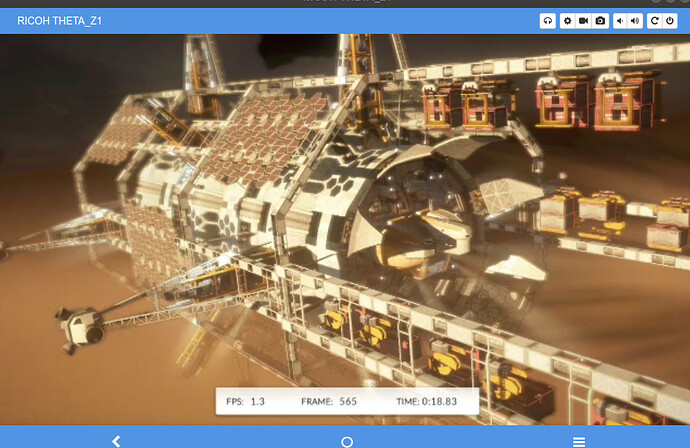

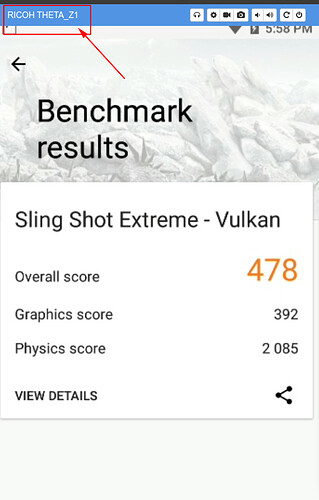

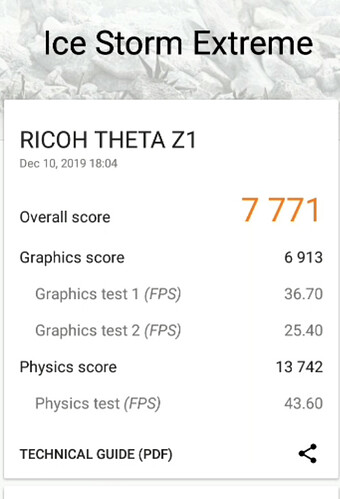

If you have any suggestions on how to tackle this in terms of which camera resolution, libraries, FastCV vs OpenVC vs OpenGL shaders, etc are likely to give the best overall performance I’d be very interested. I’ve skimmed most of the posts here and I didn’t spot much info about performance benchmarks.

It would be good to know how much time per frame is needed for capture at various camera settings and any tricks there are for speeding things up. Also, (I know this is probably a stretch) but some estimates for time to apply a simple GL shader operation or FastCV or OpenCV operation for various image sizes. If anyone has some ballpark estimates it would save a ton of time and help make design decisions easier.

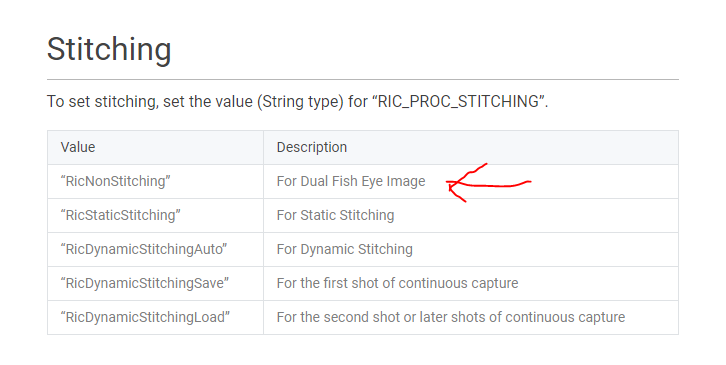

I have seen a lot of very interesting posts about running TensorFlow or applying CV operations, etc but often there wasn’t much mention of how quickly these could be performed. I also didn’t notice any posts about optimization. (I’m leaning toward using GL shaders for everything … not sure how much overhead there is with CV libraries). Can camera features be disabled to speed things up (ie. I saw posts about saving dual-fisheye vs rectilinear … is this faster?)

I have a ton of other questions but for now, if anyone has suggestions on how produce normalized (level, north-facing) dual-fisheye images that could run on the camera, that would be a great help. In my earlier Insta360 app I did it with GL shaders but it was messy. Hopefully someone has come across a clever approach to this for moving time-lapse videos.

Thanks in-advance.