Do you have a THETA V or a THETA Z1.

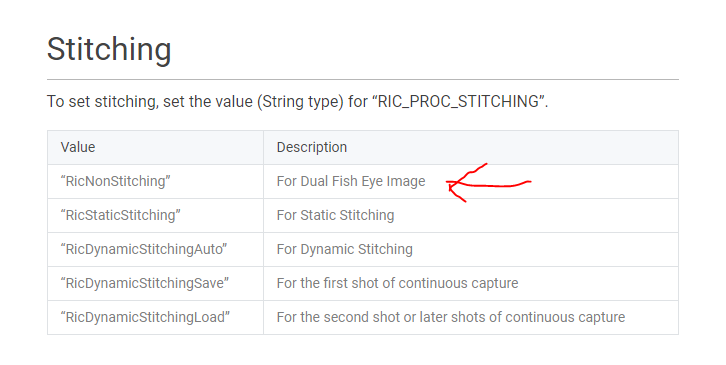

The stitching internal to the camera is computationally expensive. You can disable the stitching with either the WebAPI or the internal CameraAPI running on the internal Android OS. Although I don’t have benchmarks, I suspect that the fastest way will be to use the CameraAPI.

Another hit to performance using the WebAPI will be when you switch back to the Android OS, which you’ll need to do to process the image with either OpenCV or OpenGL.

https://api.ricoh/docs/theta-plugin-reference/camera-api/

OpenGL information is here:

Code is here:

Note that I was not able to get the surface to a Bitmap to save to the SDcard. I’ll put a note in the comments of the original Japanese article to the author Meronpan to ask about performance of OpenGL versus OpenCV.

Regarding FastCV versus OpenCV, although FastCV uses hardware acceleration, most people are using OpenCV because there is more examples.

I don’t think anyone has timed the processing, but you could try and do some tests with this code base:

and swap out the FastCV library calls for OpenCV.

This presentation has some interesting ideas.