Its cool to see that this was continued! Last year was sort of a shit show because it was a whole lot of work and bootstrapping a bunch of different elements all on an incredibly tight budget, but now you guys can really focus in on improving certain elements and making it less janky.

We are not using the RIOCH API

Just a heads up, you are in fact using the API @codetricity linked. ricoh_api.js is just a file I made that interfaces with that API lol.

as we’ve verified that the camera is capable of output the bit rate for 4k @ 30fps

Whoa, what? You got receipts for that lol? Is this a reference to the 120 Mbps number you mention earlier? Is that derived from “live streaming” stat in the Theta V tech specs on their website? That number is regarding streaming over USB. Now, if the Theta V can encode 4K@30 and send it out over USB, then it may be possible to get the video out over the wireless controller as well. It depends on the wireless controller. If you wanted to do this, you are going to have to write your own plugin for the Theta V. And even if you do, it will probably be laggy as hell.

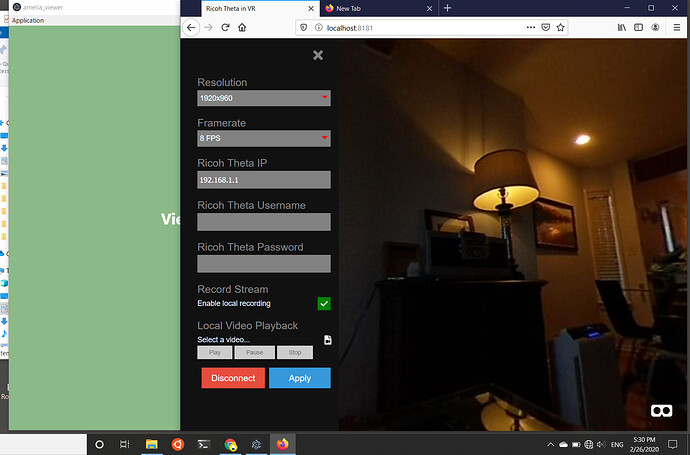

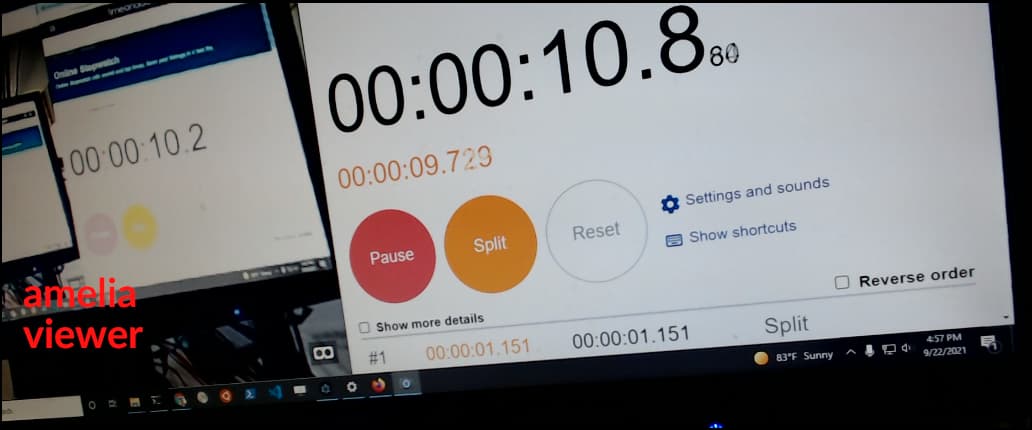

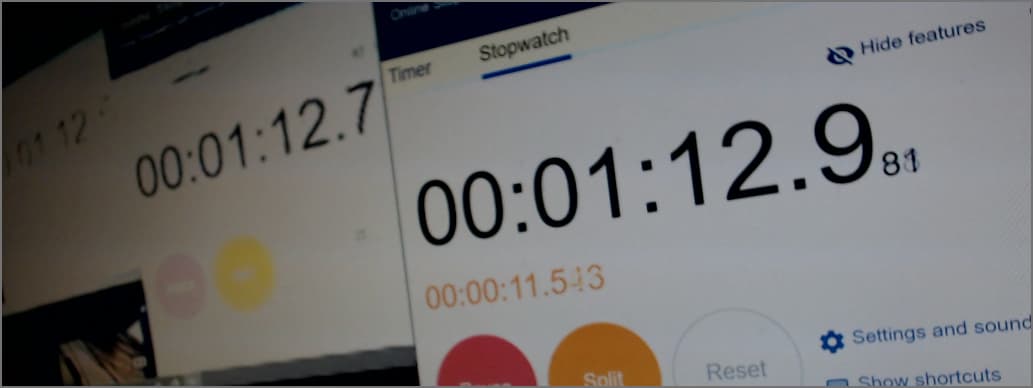

You should probably verify you can actually send 120 Mbps from the Theta V to the base station by writing some test software or using linux commands from the Android OS. But even then, I think the real issue is that you are bumping in to the limits of the live preview function of the Ricoh web API. The buffer isn’t filling up slowly because the base station can’t process the data fast enough, but because the Theta V isn’t sending the data fast enough.

I think the number your seeing, 15 FPS @ 22 Mbps, is in fact an accurate reflection of the system’s max throughput given the Ricoh web API. I wouldn’t be surprised if capturing, compressing, and sending 1920x1080 JPEGs @ 15FPS is actually limited by the Theta V’s own processing power and the wireless controller. The Theta V may simply not be able to generate and send you video frames faster than 15 FPS. So before embarking on optimizing the performance on the receiver side (base station), I would look to see what is going on with the Theta V itself. If you are going to want to increase the framerate, I think you are going to need to write your own plugin on the Theta V. This is all a hunch though, and you may want to verify this.

Personally though I think 15 FPS should be fine for most applications and I would focus on reducing latency, since it may be INCREDIBLY difficult to try and get a higher framerate. And even if you do get a higher framerate out of the camera, your data connection layer may not be able to pump it out fast enough without introducing some unacceptable latency. That said, it would be cool to see a 4K stream, even at just 8 FPS.

The current software you are using is sending an MJPEG (motion-JPEG), so its just a series of JPEG images, which are compressed images from the video being captured by the cameras. Note that if you reimplement this API, you can probably configure the quality of your JPEG compression and that may give you more frames/less lag. So currently you are sending the video as a series of images. The alternative is to send an encoded video stream, which uses something like H264 or H265 to compress the video with both temporal AND spatial analysis. You are no longer sending/receiving individual frames, but a series of encoded data that software on the receiver knows how to decode in to individual frames.

I would recommend you write your own Theta V plugin that establishes a WebRTC connection with the base station. WebRTC is a P2P media streaming standard that was designed for real time communication, and uses H.264 (which the Snapdragon 625 has hardware encoding for) for video encoding. It is well documented and is your best bet for low latency video streaming at high resolution and framerate. It is sort of complex and will take some time, but it would be well worth it. Here are some examples of WebRTC. Alternatively, you could try writing a plugin that still streams MJPEG, but converts the images to black and white to allow for higher resolution video at high framerates with less lag. Remember, as each frame requires less data, you will see a decrease in latency. This approach has the added bonus that it won’t require you to completely rewrite the base station software.

I will note that you may be able to simply write a plugin that streams H.264/H.265 streams and see some improvements. The processor on the Theta V has hardware encoding for both of those.

General notes after skimming your project site:

- I noticed you have color video no longer as requirement. That’s a good idea, you may be able to get better compression and streaming if its just black and white! But this would require writing your own plugin, and even then the Theta V Android camera API may not support that.

- In your math for required bandwidth you calculate throughput requirements for RAW video, and don’t mention that there is no way to even stream RAW. You will be getting JPEG or H.264 compressed images/streams, so those numbers aren’t valuable for anything except recognizing how magical compression is

- This is probably way too late to be mentioning, but they do sell MUCH smaller 5.8/2.4 GHz WiFi receivers that are made for embedded systems. Like <500g systems. But integrating it with the Theta would be a major undertaking, and you need to build/buy your own antenna and base station system.

- Bummed you didn’t go with the auto-tracking system, but I totally get why you opted not to. Auto tracking is something that can always be tacked on later once throughput at range is verified with a given sensor configuration.

- Good stuff setting up the retractable landing gear.

- Really impressive transmission setup. Really good numbers too. Have you actually tested it out doors yet, at range, and with pushing data? If that setup works that well, well hot damn. Good shit.

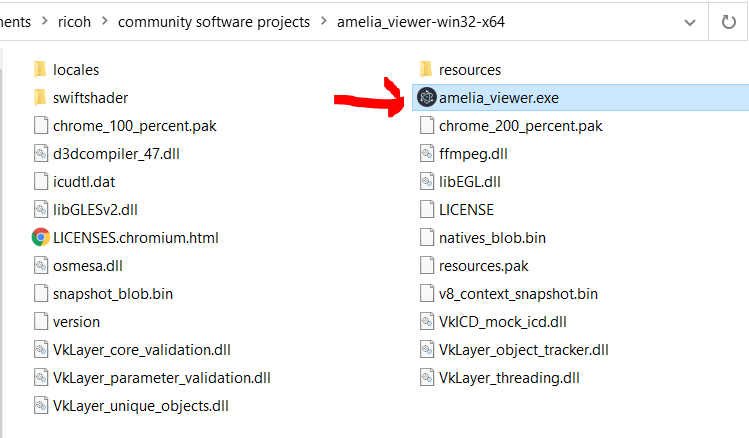

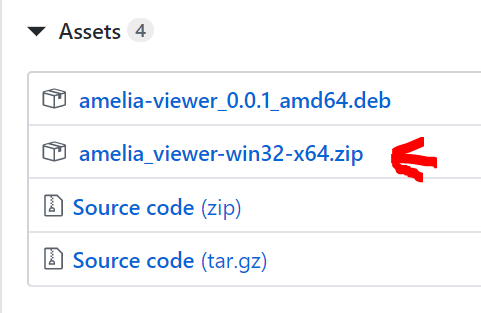

- The Electron app (at least where I left it) did not handle the DHCP work. That was all done by the TFTPD application and done manually. Did you update the electron app to use a DHCP Node module? I believe I mentioned that could be done somewhere. If so, good stuff.

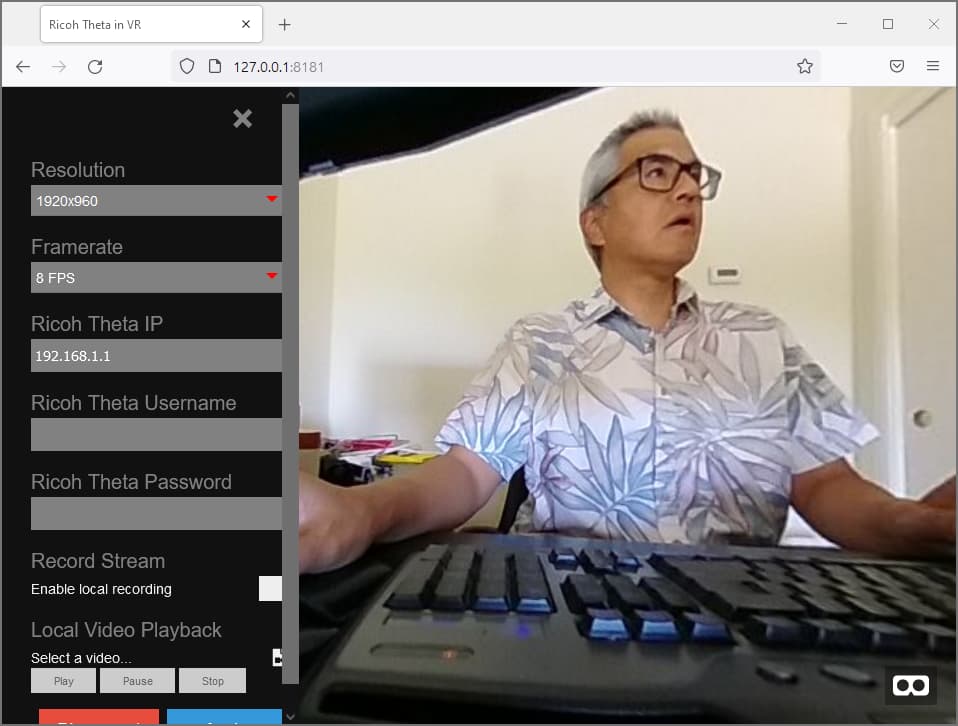

- I am unclear how you are getting 15 FPS at 1920x1080. I remember seeing this number, but figuring out the inner workings of the API wasn’t my top priority at the time. The default Ricoh web API v2.1 claims to only supports 8 FPS at 1920x1080. I think it is actually sending frames as fast as possible, and 8 is the guaranteed minimum FPS? Weird that the API documentation would be inaccurate/not mention this.

- IDK why the Cloud Key needed to be purchased. This kind of configuration should have been possible through the web interface for the switch. Sucks that this ended up being a blocking item.

- Use QGC’s waypoint based mission planner, so you don’t have to worry about a pilot crashing the drone (like I did lol). Obviously keep someone on the sticks in case something goes wrong, but the PX4 on the drone will be able to fly fast and accurately to and from waypoints along a planner mission. This will also make testing a lot easier.

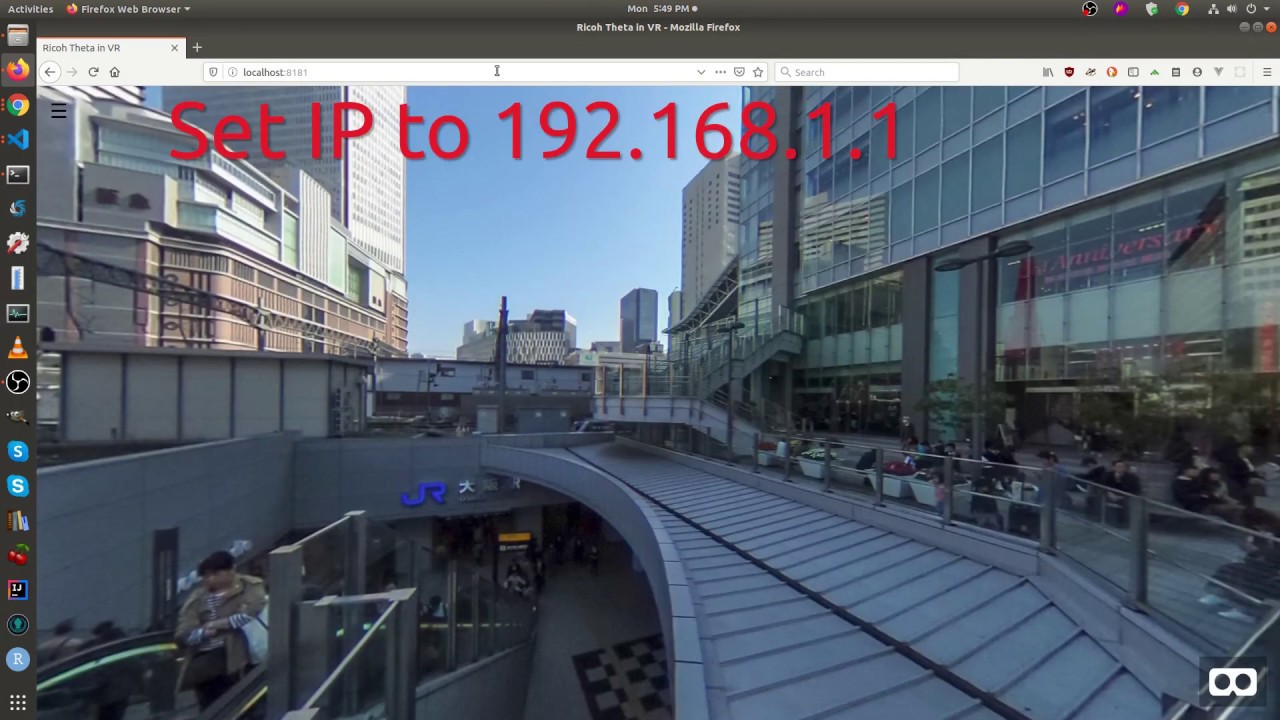

- There is a bug in the way the Ricoh web API fetches and sends the live preview that causes weird stuttering. You can see it in one of our old videos. It is IMMEDIATELY nauseating in VR. As in, it will bring you to your knees, almost instantly. This bug alone is reason enough IMO to write your own plugin.

I am excited to see how things go! Keep posting and asking questions, I am always happy to contribute.