Good morning, Craig. Thank you for all of the incredible information you share on this topic. My colleagues and I are initiating a similar project, employing a Theta X on helmet to live stream the work of an antenna inspection engineer (those crazy guys who climb the 500’ antenna towers) to a partner on the ground who is wearing a Quest 2. Are you aware of any significant differences in how we might approach this, given any new capabilities in the Theta X, or do you think we should essentially mirror the architecture of the Amelia Drone Project? Kind regards, Todd

The Amelia Drone Project uses a lower framerate livePreview or motionJPEG. On the X, the preview resolution is lower than the V.

The max preview resolution of the X is {"width": 1024, "height": 512, "framerate": 30}

option 1: motionjpeg

easy to implement, but lower resolution and Wifi from camera likely not strong enough

If this is sufficient, then the Amelia Viewer could work to show the preview inside a browser inside the Quest 2.

option 2: live stream over USB

A potentially better solution would be to use either the X or Z1 connected to a small computer like a Jetson Nano or NUC with a USB cable, then transmit the stream from the small computer to the base station. The person would need to have a small backpack to hold the computer, battery and wifi transmitter (connected to computer)

option 3: use a plugin like the RTSP or HDR Wireless Live Stream (Z1 only)

The HDR Wireless Live Streaming plugin is under active development by @biviel . The RTSP streaming plugin is no longer under development.

Let me know which option sounds feasible and I can provide more technical information on the implementation. Also feel free to ask more questions about each approach.

Thanks for the lightning fast reply, Craig. I think Option 2 may be the best for our purposes, as we are already looking at connecting our Theta X to a Jetson Nano to run local AI applications in real-time, while also live-streaming the feed out to remote viewers. I would certainly welcome your thoughts on two scenarios for approach: 1). What is the fastest/simplest path to get video streaming from the Theta X, into the Nano, then out to the Quest 2? (GStreamer, or OBS, perhaps?). 2). What path would provide the highest resolution stream from the Theta X through the Nano, and out to the Quest 2? Our ultimate intention is to connect from the Theta X / Nano side to the remote viewer by using a high-data rate radio that functions just like an ethernet connection (Trellisware TSM radio), so between the Nano and the Remote viewer we will need ultimately need to be on ethernet - but for now we can work with WiFi just to demonstate the remote viewing capability. Thank you, again, for your assistance!

Todd

Is the Quest 2 connected to a groundstation with a cable or in communication with something like a windows laptop? If so, you can use Unity and OBS between the Windows PC groundstation and the Quest 2 headset.

Between the Windows PC and the THETA X, you can use gstreamer with this software running on the Jetson Nano.

Go to the bottom posts of this thread for details:

Live Streaming over USB on Ubuntu and Linux, NVIDIA Jetson

You can get 4K 30fps from the USB cable and from the plugin. If you already have a Jetson Nano in the equipment on the tower, then most people would use the Jetson for the transmission. One of the considerations is heat generation inside the THETA if the THETA is handling the WiFi transmission. If the USB cable is used, you can turn off WiFi and reduce heat.

If you’re planning to use Ethernet eventually, I would connect the THETA X to the Jetson Nano with USB.

Examples:

Feel free to post more questions. Other people have walked down similar paths. The Quest 2 headset is a bit new and we’re still trying to figure out the best approach. However, it does seem to work with OBS → virtual cam → Quest 2. Unfortunately, I personally do not have a Quest 2 to test.

Ideally, I’d love to connect the Quest 2 directly to the radio, so it is functioning as though it were directly connected to the nano by ethernet…but, yes, we can certainly add a computer with Unity and OBS to the Quest 2 to serve as a ground station.

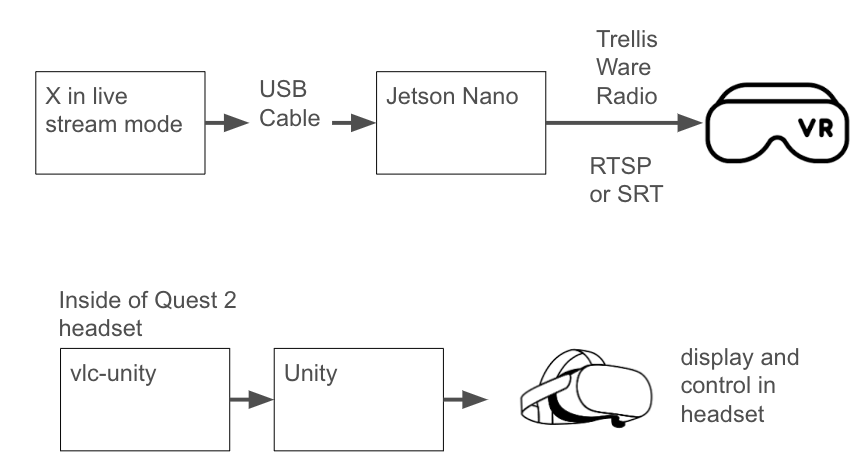

So - it sounds like our best bet to start is: Theta X - USB - Nano (GStreamer + Theta UVC Plugin) - ethernet - Windows (Unity/OBS - virtual cam) - USB - Quest 2. I’ll see if we can get this running.

While we do…do you think there is a way the Quest 2 could directly connect to the Nano over ethernet, and have the Nano directly stream to the Quest 2 over ethernet? If so, it would certainly be a more streamlined architecture.

Thank you, again, Rich!

Todd

option #1 with Unity and vlc-unity

If you do not have a base station, you can try and use VLC Unity running inside the Quest 2. At the current time, no one in the community has tried this. You would be blazing a new path.

The camera (with plugin) or the Jetson can output RTSP and the VLC library can ingest RTSP. The VLC Unity should then be able to get the stream into Unity. We do not have example code for vlc-unity running inside the headset.

vlc-unity appears to use vlcsharp (as in c#) which does have examples:

I do not know the quest capability with ethernet. However, if the Quest 2 supports Ethernet and you get vlc-unity running inside the headset, you should be able to be able to get it work.

If you get it working, please post tips.

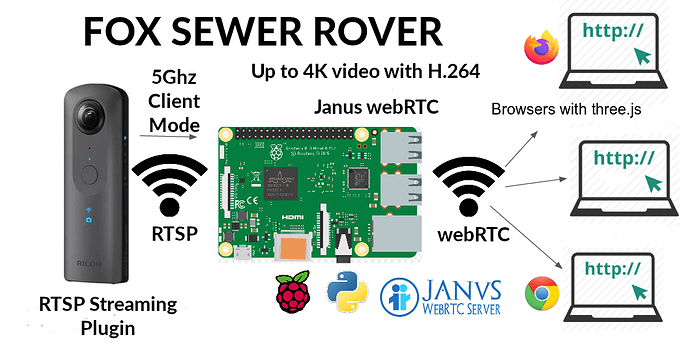

option #2: alternative to unity using webrtc and web browser technology

You can avoid using Unity if you have the Jetson stream webrtc to the headset and use browser technology inside the headset.

People have used the janus gateway with the theta.

https://janus.conf.meetecho.com/

You run Janus on the Jetson.

Hugues from the this forum built this:

BTW, if this is a potential high-volume sales opportunity for RICOH, please let me know either here or by DM. I’ll send you an email at the address you used to register for the forum. I can ask more people at RICOH their opinion on design options, but want to give them a heads up if this potentially a product that embeds the THETA or if it is research. Either way is fine for this forum.

This is the concept under discussion. Note that there are no success reports yet of eliminating the laptop connected to the headset and getting the 4K 30fps stream.

Alternate approach. I believe that @biviel has his plugin streaming to the VR headset using his cloud-based system at few second (or lower) latency using SRT. However, the stream would go out to his system first. It may be possible to use his plugin with vlc-unity.

Yes, I can confirm that my plugin is able to directly stream to VLC and it should work to Unity VLC too, that can be run in headset directly. I also must higlight that when using my plugin, the stream doesnt go to my server at all, but to the destination server that can be set manually to any destination that supports rtmp rtsp or srt .

An optio is to stream to my server too, as optional and alpha state for now.

@tkwoodrick if you assess the plugin from @biviel , you should use the Z1 as the X has some additional heat issues for streaming more than 15 minutes.

How long does the camera need to stream for?

Can you use video saved to file instead of streaming?

I forgot to mention that my updated plugin can start streaming or stop remotelly via internet. I really have to collect all changes I did and of course I will also let users remove my flow tours logo during stream. Also fast roaming between wifi mesh network is a cool feature. I improved heat optimizatins too, so it bow automatically lower a bit maximum fps when it starts to overheat, etc. Most important feture is using srt in top of existing rtmp and rtsp udp vs tcp transfer matters a lot. Its not yet submitted to store, but will do so soon. Im excited about streaming wirelessly with 200-300ms latency or less to local network VLC or obs or vmix.

@biviel when you stream directly into the Quest 2 using a browser, how do you control the navigation of the 360 stream? Is there some type of control that matches the view with the position of the head?

@tkwoodrick , this sounds cool! Here are some thoughts:

Definitely connect the Theta to the Jetson via USB, and then use use gstreamer to pump the video out however you want. Now that the Theta plays nice with gstreamer you can really do anything. For point to point streaming over wifi with minimal latency from gstreamer, use one of the many open source low latency libraries. Pretty much everything will be under 300 ms tx time, but use something that you know uses hardware acceleration on the Jetson (a ton of libs have ensured compatibility with the Jetson). If you pick a lib that is publishing a well known standard, then on the receiver you could probably just install an app that lets you connect to a WebRTC/SRT source and pump that in to the quest.

If you are really ambitious you could program a Unity app for the Quest to view the stream directly and cut out the receiver PC entirely. I haven’t done any development with it yet, but it looks like developers get a TON of control over the Quest, so I bet if you connect the Quest to the same network as the Nano you could develop an app that communicates directly between them. It might require some hackery, but def seems possible.

Also while you are at it you should measure the bandwidth usage over WiFi, because some of these low SWAP radio connections can easily be swamped by even simple video streaming. And it doesn’t help that the normal performance is always 1/2 whats on the datasheet.

Currently I’m not streaming directly into Quest 2, only via my server and web browser. Directly when using my plugin streaming into VLC-Unity should work, but I tested only streaming directly into VLC on desktop so far.

I think that the best quality and lowest latency would be streaming directly from camera. Via USB streaming the quality will be lower(no HDR preview) and encoding there in jetson nano takes time too, so I’m not sure what would be the benefits of doing so. Z1 has inbuilt h.265 hardware encoder…

Programming a Unity app, with using VLC unity module would do the job and best quality.

I was looking in to this, there is a WebRTC unity plugin… I might take a go at making a simple app for the Quest 3 that plays nice with WebRTC. I think the “dream” is the Theta streams directly to the Quest 3, but for performance applications the WiFi range of the Theta will never be enough, so developing around having the Theta connected to an SBC is probably worthwhile.

Thanks, Jake!! I was pulled into something else last 36 hours, but diving back into this project now. I really appreciate your proactivity and access to your brainpower as we work towards first prototype.

Todd

That would be awesome. For the antenna inspection, I think they’re willing to use an Jetson Nano in the backpack. They need to eventually connect the THETA to a Trellisware TSM radio, so I imagine that they’re okay with a SBC.

The original poster didn’t mention which Trellisware product they are considering. However, maybe it is this one:

or this

I remember that used used a Ubiquiti Rocket 5ac lite in your drone project with airMax omni 5GHz antenna.

maybe hire him as a part-time consultant? He’s the author of the Amelia Drone viewer.

Indeed! Would definitely be interested. Can I provide my direct email on this community site, or is that forbidden?

Todd

you can provide your direct email, but then you might be spammed by bots. You can send him a direct message.

I don’t know what Jake is involved in now. He does have relevant experience, so if he has any free time, definitely worth discussing things with him.

Perfect - please send his contact info and I’ll hit him up directly. Thanks, Craig!