I have some great news!

Following @craig post:

I recently conducted tests using the older Unity version (2020.3.5f1) to determine whether the issue lay with Unity or Windows 11.

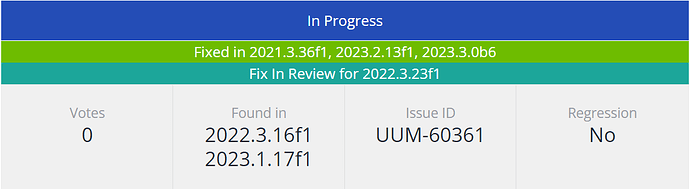

It turns out the problem stemmed from Unity itself. Upon further investigation, I stumbled upon this bug report: CRASH ON BASEVIDEOTEXTURE::INITVIDEOMEMORY WHEN CAPTURING THETA V’S OUTPUT TEXTURE

The good news? Unity has already developed a solution for it.

I proceeded to test it with the beta version (as I was unaware of other options at the time! ![]() ):

):

Unity 6000.0.0 Beta 11

Now, we no longer require OBS, we can directly utilize the UVC driver within Unity.

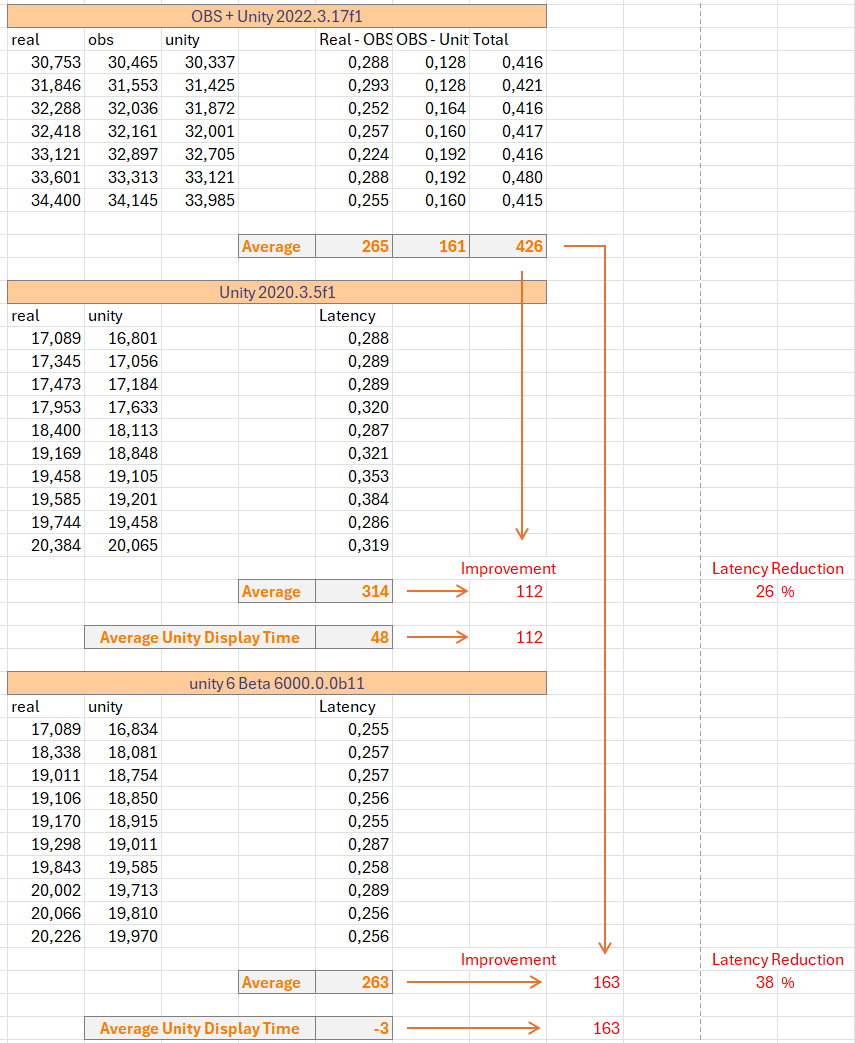

Here’s an update on my latency testing:

The screenshot indicates a notable enhancement, with a reduction in latency of 163 ms, equating to a 38% improvement.

I came across an interesting observation when comparing the 2020 version with the Beta release: the time Unity takes to display the camera feed. I’ve termed this the “Average Unity Display Time.” Since I don’t have a direct means to measure this time, I estimated it by subtracting the average latency of the current method from the average latency I obtained from the Z1 to OBS.

My assumption was that OBS instantly displays the feed, so the measured time was the duration it took for the Z1 to capture the image (265 ms).

With this in mind, here are my findings:

- In the 2020 version, Unity introduced 48 ms of latency.

- In the Beta release, we get -3 ms. This can be considered an oscillation in measurements, suggesting that Unity didn’t introduce any additional latency compared to OBS.

P.S. @jcasman, as I was browsing through Unity’s Forum, I stumbled upon one of your posts (Solution: Connecting RICOH THETA Z1 with Unity). I also left a comment there, sharing this update.