I’ve been working on a system for robotic control using the HTC Vive for a few months now. Part of this system involved live streaming 360 feed into a virtual reality headset, which was easier said than done. There are a lot of instructions/tutorials/guides out there but nothing that completely does what I wanted to do, so I figured I would write this up for anybody who wanted to do something similar using SteamVR or other VR API’s in Unity. I’ll update this post when my solution is posted to github with the link!

This solution provided me with the basis to move forward with, but left a lot of questions unanswered as they did not actually implement it with the live feed. This solution gave me the basis for getting a video onto the scene, but as most of us have found out the hard way, the UVC blender does not work in unity. Meaning in Unity, you can only use the dual fish eye feed, so you need to incorporate parts from both of the above solutions. This solution also works for the feed you get from a hdmi to usb capture card, as it is also dual fish eyed. You could also adapt this solution to work with Oculus.

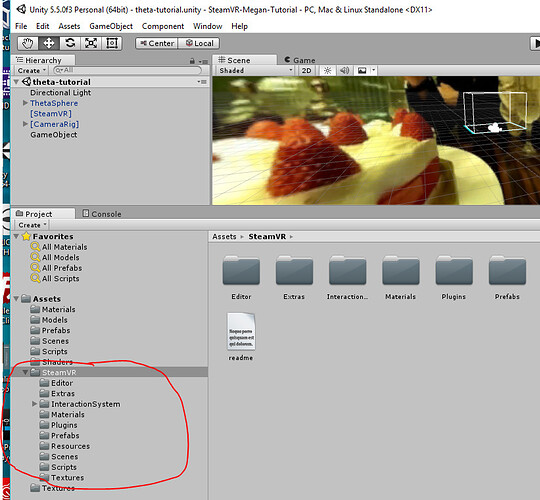

Note: I’m using Unity version 5.5.0f3 and the SteamVR assets version 1.2.0 (latest as of this post). You should try to save frequently when working with unity as it can crash (a lot).

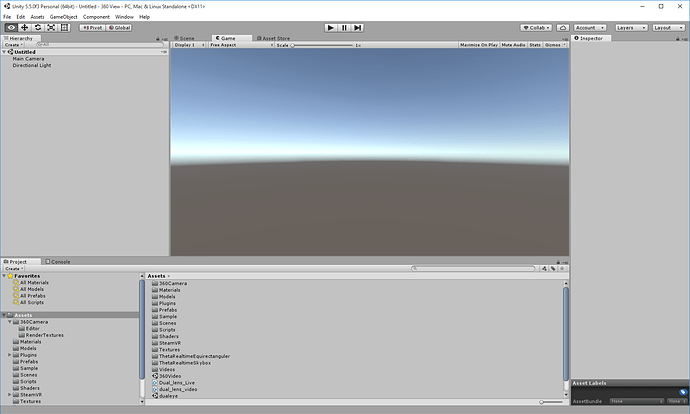

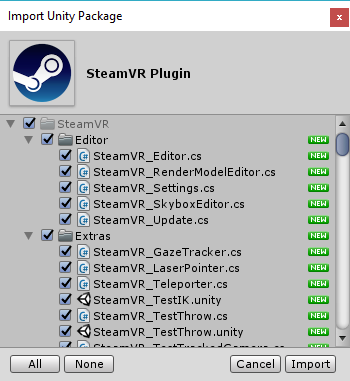

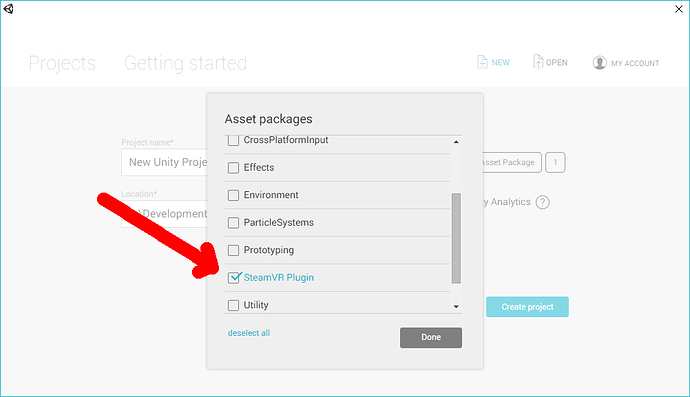

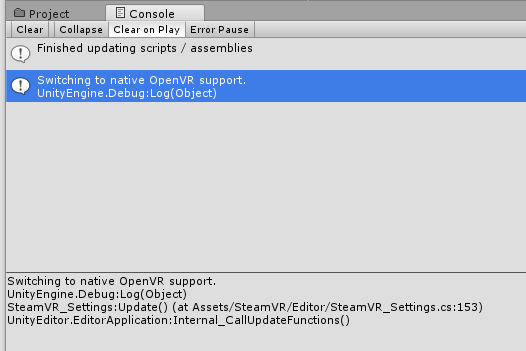

Download this unity package from this blog post. Open a new project in unity and import the package and the assets SteamVR.

Delete the main camera

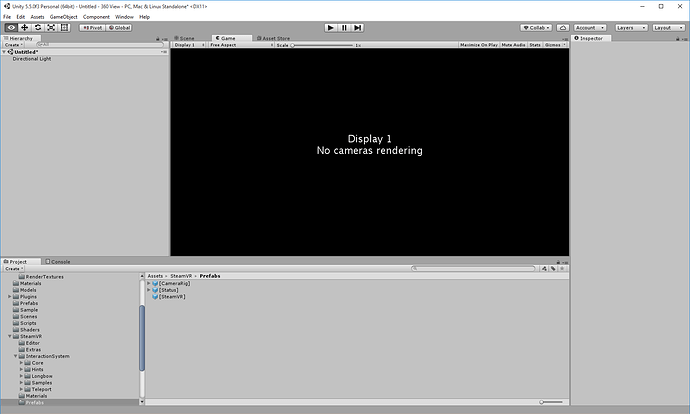

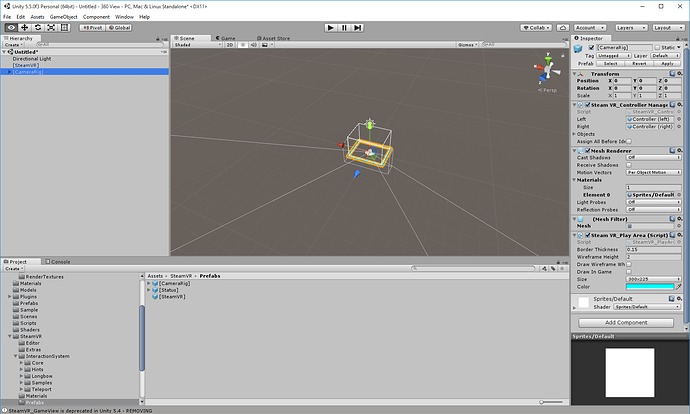

Add the SteamVR and CameraRig Prefabs to the scene. The CameraRig should be set to x:0,y:1,z:-10, and go ahead and unselect both hand controllers as we wont be using them for this project.

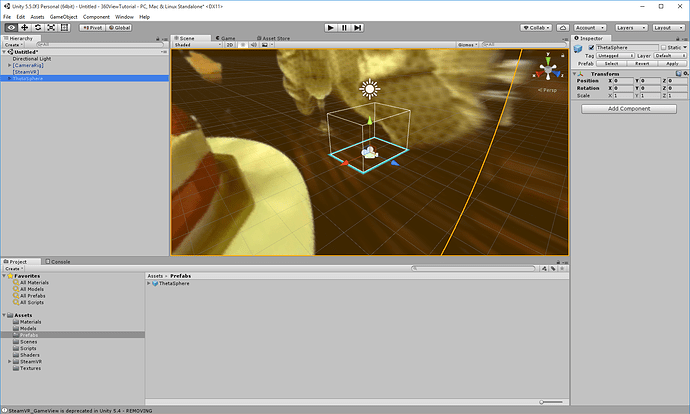

Now you can add the theta-sphere prefab from the assets of the package from the blog post

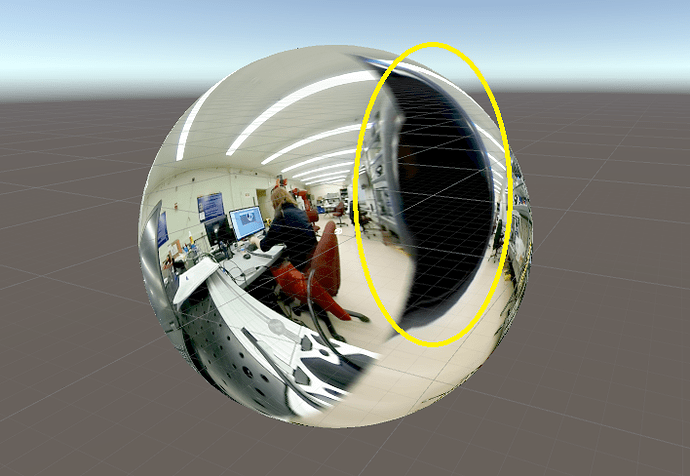

The sphere prefab has most of the things you want on it, however, I noticed when streaming from the USB, there are big gaps on the sphere where the stitching isn’t great. We will fix that later (as best we can).

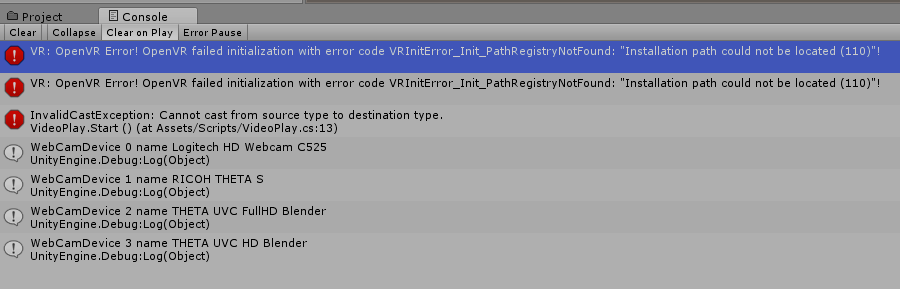

You’ll need to now make a script for projecting the camera feed onto the two spheres, this script is based off of the script from the second post I mentioned. Though, rather than taking an equirectangular form onto one sphere, you need to project each lens’s feed onto their respective spheres (because as of this post, Unity does not recognize the UVC blender as a webcam).

You will need two scripts, one for the webcam to be projected, and another to play it

For the webcam projection I made WebcamDualEyed, create a script and copy the code below.

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class WebcamDualEyed : MonoBehaviour {

public GameObject sphere1;

//Second Camera Added by Meg

public GameObject sphere2;

public int cameraNumber = 0;

private WebCamTexture webCamTexture;

private WebCamDevice webCamDevice;

void Start()

{

// Checks how many and which cameras are available on the device

for (int cameraIndex = 0; cameraIndex < WebCamTexture.devices.Length; cameraIndex++)

{

Debug.Log("WebCamDevice " + cameraIndex + " name " + WebCamTexture.devices[cameraIndex].name);

}

if (WebCamTexture.devices.Length > cameraNumber)

{

webCamDevice = WebCamTexture.devices[cameraNumber];

webCamTexture = new WebCamTexture(webCamDevice.name, 1280, 720);

sphere1.GetComponent<Renderer>().material.mainTexture = webCamTexture;

//Added by Meg

sphere2.GetComponent<Renderer>().material.mainTexture = webCamTexture;

webCamTexture.Play();

}

else {

Debug.Log("no camera");

}

}

}

To play the feed I made a script called VideoPlay, create your own and copy the code blow.

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class VideoPlay : MonoBehaviour {

public GameObject Sphere1;

public GameObject Sphere2;

void Start()

{

MovieTexture video360_1 = (MovieTexture)Sphere1.GetComponent<Renderer>().material.mainTexture;

MovieTexture video360_2 = (MovieTexture)Sphere2.GetComponent<Renderer>().material.mainTexture;

video360_1.loop = true;

video360_2.loop = true;

video360_1.Play();

video360_2.Play();

}

}

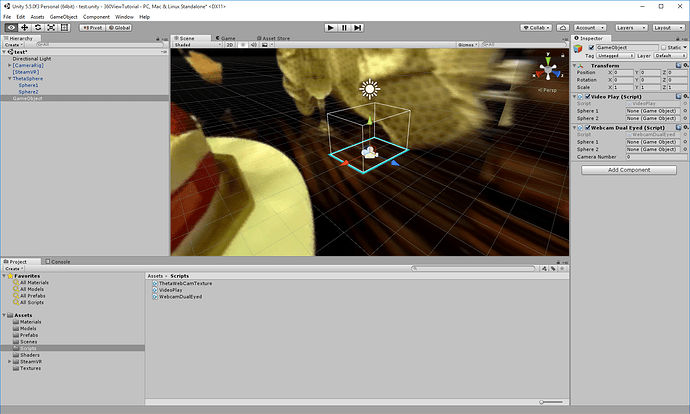

Now create an empty game object and attach the scripts onto the object

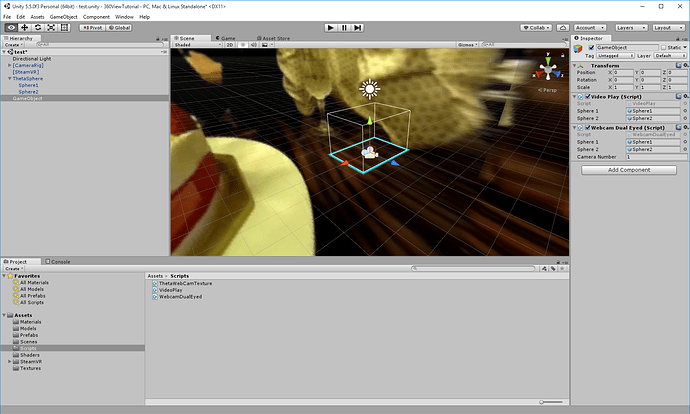

Now you want to set the variables for sphere 1 and sphere 2, select either sphere from the hierarchy and drag it to the variable slots for both scripts.

Now you’re going to want to plug in your theta and start live streaming mode.

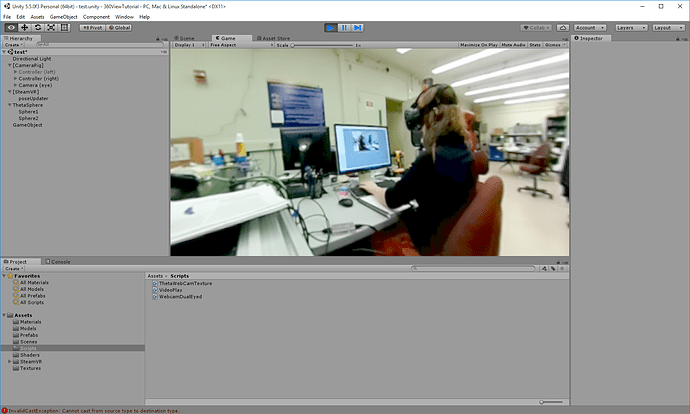

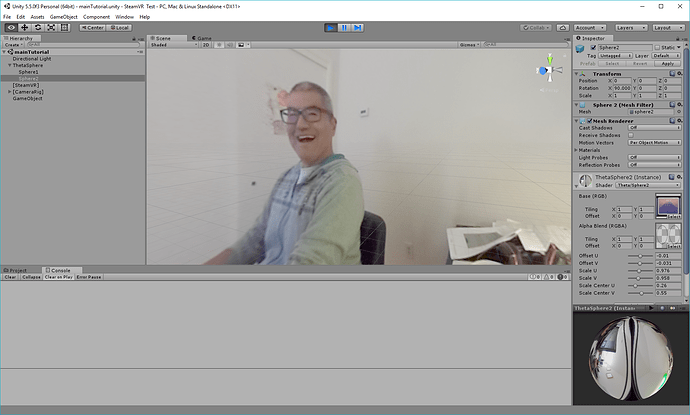

Set the camera number on the object to whichever number corresponds to the raw Ricoh Theta S camera feed. Mine happens to correspond to the number 1, which I’ve already set in the image above. When you go to play the scene, the console will show you which webcam corresponds to each number.

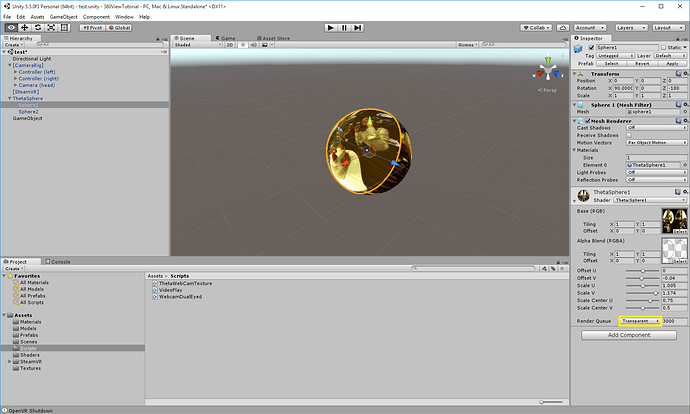

Now, you’re going to want to change the render queue of both sphere’s texture to transparent.

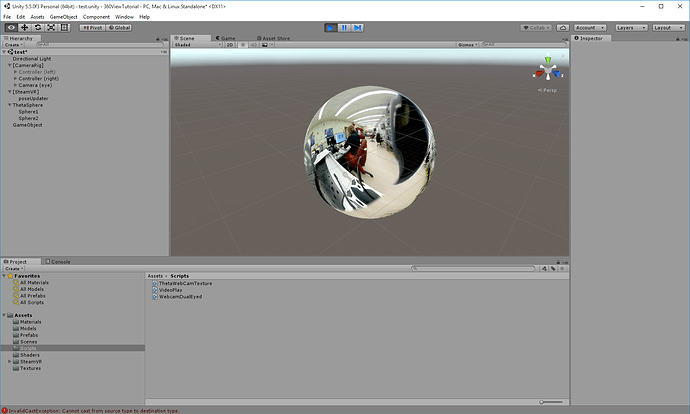

Now, plug in your vive, start up steamVR, and press play.

When you change from “Scene” to “Game” the feed should display in your headset!

You should be able to see most of the field of view, there are some big black chunks missing though.

This can be a little disorienting for virtual reality!!

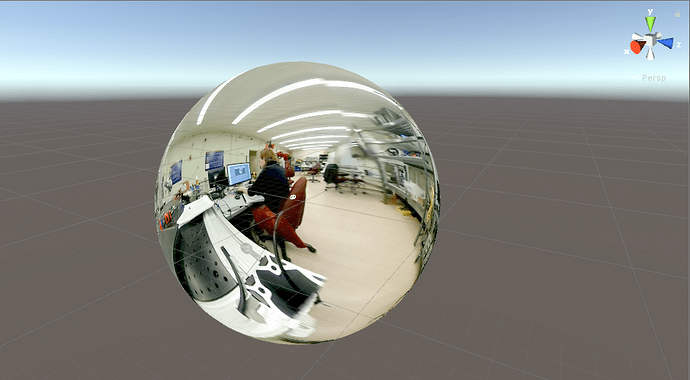

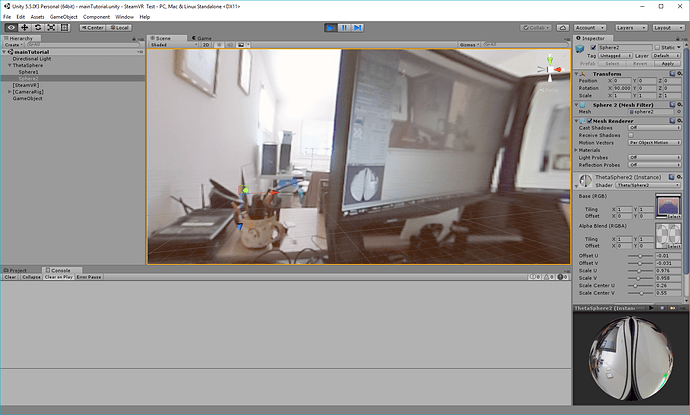

I haven’t had the chance yet to go into maya to recreate the template that the shader uses, but I’ve made some manual adjustments to make it less awkward and close up the holes a little bit (Its not perfect but its better than the holes! If anybody figures out a better way please let me know).

Go to the shader for each sphere and change the values to the following :

Sphere1:

Offset U: 0.013

Offset V: 0.007

Scale U: 0.983

Scale V: 1.149

Scale Center U: 0.0686

Scale Center V: 0.5

Sphere2:

Offset U: -0.01

Offset V: -0.031

Scale U: 0.976

Scale V: 0.958

Scale Center U: 0.26

Scale Center V: 0.55

This should close up the holes pretty well, and now you can view your 360 live feed using VR!

Let me know if you have any questions! (Didn’t quite get to capture the inception happening with the camera  )

)