Greetings,

Sorry for taking so long, but this last couple of weeks have been chaotic.

Ok, so How to use WebRTC for livestreaming with an Richo Theta to Unity.

Requirements – (Unity Page)

This version of Render Streaming is compatible with the following versions of the Unity Editor

- Unity 2020.3

- Unity 2021.3

- Unity 2022.3

- Unity 2023.1

Platform

- Windows (x64 only)

- Linux

- macOS (Intel and Apple Slicon)

- iOS

- Android (ARM64 only. ARMv7 is not supported)

NOTE

This package depends on the WebRTC package. If you build for mobile platform (iOS/Android), please see the package documentation to know the requirements for building.

Browser support

Unity Render Streaming supports almost all browsers that can use WebRTC.

| Browser | Windows | Mac | iOS | Android |

|---|---|---|---|---|

| Google Chrome | ||||

| Safari | ||||

| Firefox | ||||

| Microsoft edge (Chromium based) |

NOTE

It may not work properly on some browsers caused by depending on the status of support about WebRTC.

NOTE

In Safari and iOS Safari, WebRTC features cannot be used with http. Instead, https must be used.

SETUP – (Unity Page)

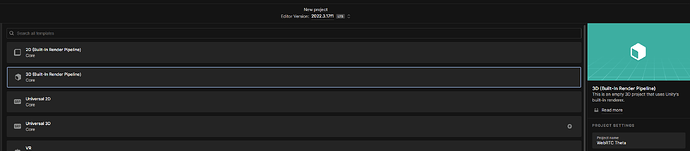

1º- Create a Unity project. We used Unity 2022.3.17f1 LTS.

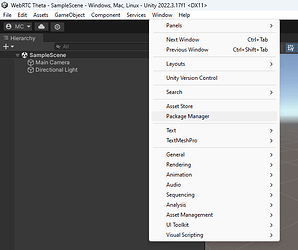

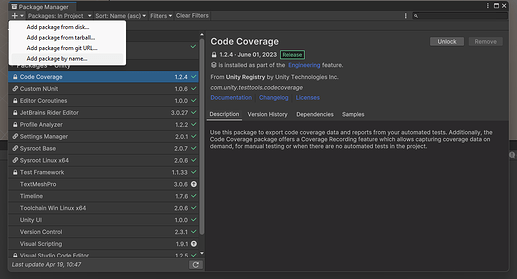

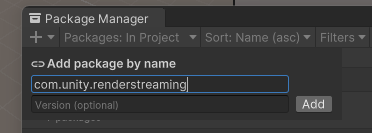

2º- Go to the Package Manager and Add by Name: com.unity.renderstreaming.

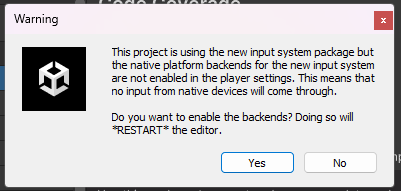

You will probably get a warning about the input system, press Yes and wait for Unity to restart.

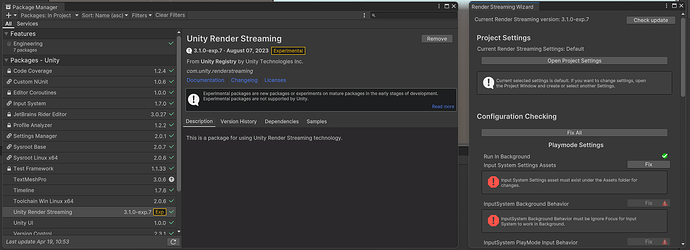

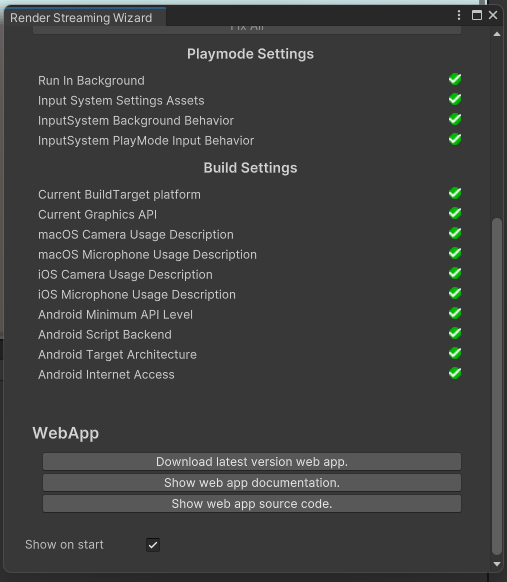

3º- After installing the package a new window is going to pop-up “Render Stream Wizard”. This is used to correct all configurations needed for this project. Every time you open up the project this window will apear and check if everything is OK. If not, just press Fix All, and Unity will handle the work for you. (Don’t close the Render Stream Wizard, you will need it in the next step)

4º- The next thing to do is to Download the WebApp. This is a JS script that you will run in one of the peers. In this case, because we are looking to implement this in a robot, we prefer using the JS in the robot side, and the Unity app in the User side. This is also interesting if you are aiming to use a Raspberry PI or a Jetson, that you put in your backpack. That being saied, the Theta camera will be connected by USB-C to the PC with the JS (PC_JS).

So, to download the WebApp scroll down to the end of the Render Stream Wizard window. There you will find an option “Download latest version web app”. Save the file, we suggest creating a “WebAPP Folder” in the Assets, the main reason being, in the future you may want to customize the app, and this way you already have everything organized.

(Now you can close the Render Stream Wizard window)

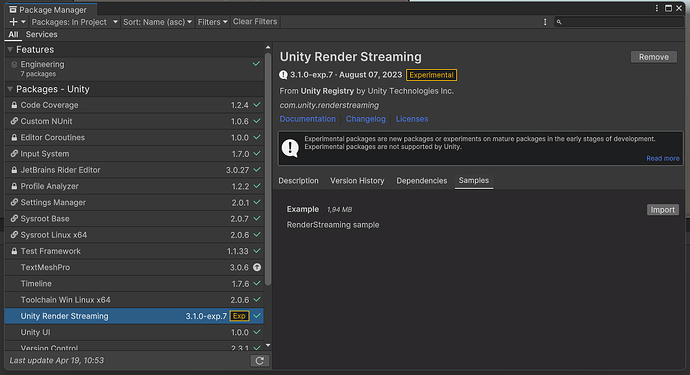

5º- In the Package Manager import the Unity Render Streaming Samples.

Implementing the Theta

As of this moment, by using any of the samples provided by Unity you will have a fully working WebRTC comunication channel.

So, how can we use this to livestream the 360º Livefeed from the theta?

The best way I found was to build upon the Bidirectional Sample. By doing this we can get a stream going with only 2 steps:

1º- Edit the Bidirectional script:

- Force the script to always connect to the same channal ID (useful for automation in the future). In this case, the default channel is going to be 00000.

void Awake()

{

(...)

setUpButton.onClick.AddListener(SetUp);

hangUpButton.onClick.AddListener(HangUp);

connectionIdInput.onValueChanged.AddListener(input => connectionId = input);

// Replace this line with the following

//connectionIdInput.text = $"{Random.Range(0, 99999):D5}";

connectionIdInput.text = $"{00000:D5}";

webcamSelectDropdown.onValueChanged.AddListener(index => webCamStreamer.sourceDeviceIndex = index);

(...)

}

private void HangUp()

{

(...)

// Comment this line, so the ID channel doesn't change when the call is over;

//connectionIdInput.text = $"{Random.Range(0, 99999):D5}";

localVideoImage.texture = null;

}

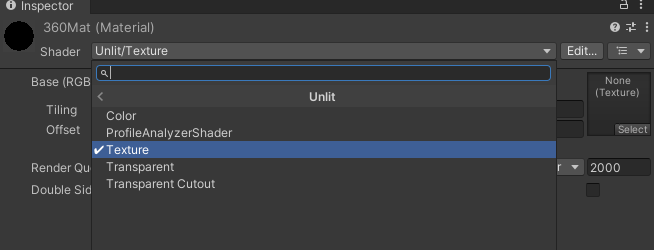

- Retrive the camera texture. In this case, we just need to create a variable for the material, and add a line of code to atribute the texture to the material.

Note: The material must be Unlit, or the image will be darkened.

// Create a new variable for the material

public Material cam360;

void Awake()

{

(...)

receiveVideoViewer.OnUpdateReceiveTexture += texture => remoteVideoImage.texture = texture;

// Copy the line above and edit it so that the texture is saved in the new material

receiveVideoViewer.OnUpdateReceiveTexture += texture => cam360.mainTexture = texture;

(...)

}

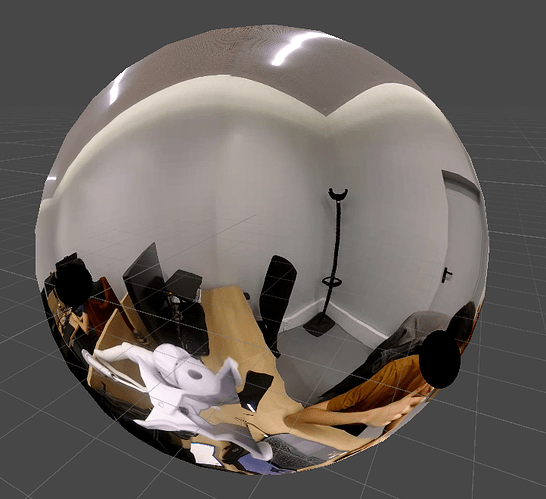

2º- Now that we have a material with the camera livestream, we can apply it to anything we need. In our case, we apply it to a sphere. For that, we must create a sphere and them apply the material to it. We also need to invert the sphere!

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class FlipNormals : MonoBehaviour

{

[SerializeField]

private Mesh mesh;

// Start is called before the first frame update

void Start()

{

flipNormals();

}

private void flipNormals()

{

Vector3[] normals = mesh.normals;

for (int i = 0; i < normals.Length; i++)

normals[i] *= -1;

mesh.normals = normals;

for (int i = 0; i < mesh.subMeshCount; i++)

{

int[] tris = mesh.GetTriangles(i);

for (int j = 0; j < tris.Length; j += 3)

{

//swap order of tri vertices

int temp = tris[j];

tris[j] = tris[j + 1];

tris[j + 1] = temp;

}

mesh.SetTriangles(tris, i);

}

}

}

Final Steps

Ok, at this moment you should have a fully working livestream going between the PC_JS and Unity. Although, before testing you need to check two things.

-

The first, and the easiest, you need to confirm that you have the Ricoh Theta UVC installed (download).

-

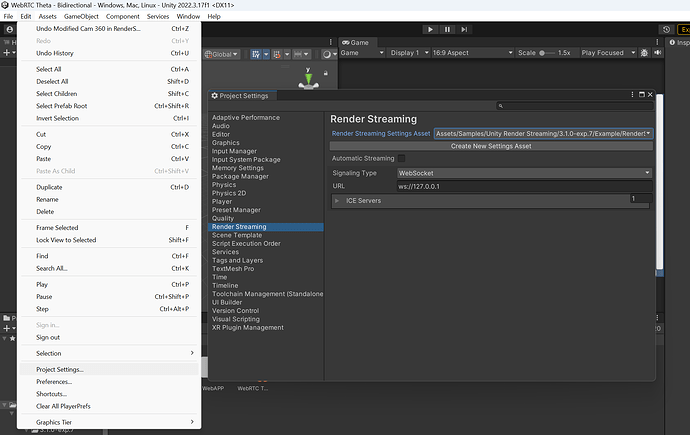

The second can be a little bit trickier, since it involves IP adresses. So, if you run the JS script in the same PC that you run the Unity there will be no problems. If not, you need to specify the signaling server IP. So, how can you do this?

In Unity, you can go to Edit > Project Settings > Render Streaming, in here you will find a URL, it should be something like “ws://127.0.0.1”. That’s the “internal IP of you machine”, it will only work if the JS is executed in the PC with the Unity project.

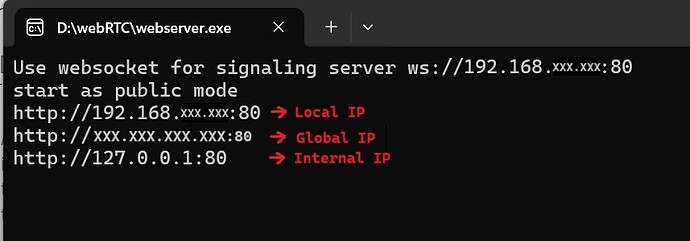

Now, grab the JS script and run it in some other machine, other that the one running Unity. When it starts, the first few lines will be the IP adresses associeted with the server. Here is an example:

If you have the 2 PCs in the same network use the Local IP (it’s better for safety and it should work right out of the box). If you have the PCs in different networks you have to use the Global IP. For both cases you will probably have to configure your firewall to open the port 80. For the Global IP, you will also have to configure the port-forwarding.

IMPORTANTE NOTE: Messing with IPs and all that stuff can be dangerous for people that don’t know what they are doing. Opening ports its always a big security risk. I’m no expert in the matter, so I can’t provide reliable information in this topic. What I can say is that using a VPN is better than using port-forwarding.

Alright, by now you should have a fully operational livestream set up between PC_JS and Unity. However, before diving into testing, there are a couple of key checks to ensure everything runs smoothly.

- Firstly, confirm that you have the Ricoh Theta UVC installed. You can download it from here.

- Secondly, you need to configure the signaling server IP. If you’re running the JS script on the same PC as Unity, you’re all set. However, if not, you’ll need to specify it. Here’s how you can do it:

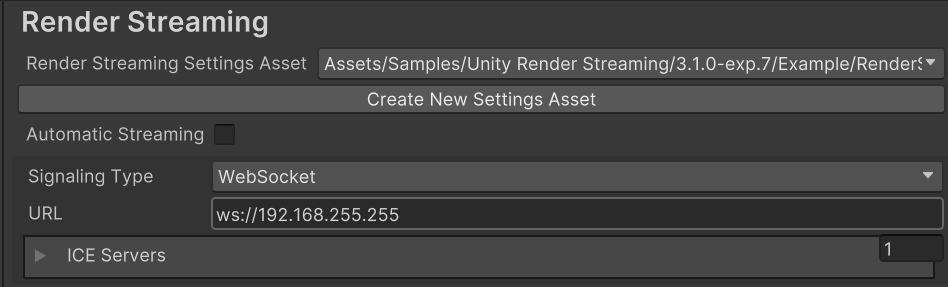

- In Unity, navigate to Edit > Project Settings > Render Streaming. You will find an URL resembling “ws://127.0.0.1”. This represents the internal IP of your machine and will work only if the JS script is executed on the same PC as the Unity project.

- Now, run the JS script on a separate machine from the one running Unity. Upon starting, the script will display the server’s associated IP addresses. Here’s an example:

- If both PCs are on the same network, use the Local IP (it’s safer and should work out of the box). If they’re on different networks, opt for the Global IP. In both cases, you may need to configure your firewall to open port 80. For the Global IP, additional configuration like port forwarding is required.

- After choosing you IP, go back to Unity and replace the “127.0.0.1” for the IP you picked. Like this:

Important Note: Manipulating IPs and configuring firewalls can pose security risks for those unfamiliar with the process. Opening ports is a significant security concern. While I’m not an expert in this field, so I cannot provide further information on this, I suggest that using a VPN is preferable to port forwarding.

Testing

Now it’s time for testing!

PC_JS

-

Connect the Theta to the PC (

PC_JS); -

Execute the JS script in

PC_JS; -

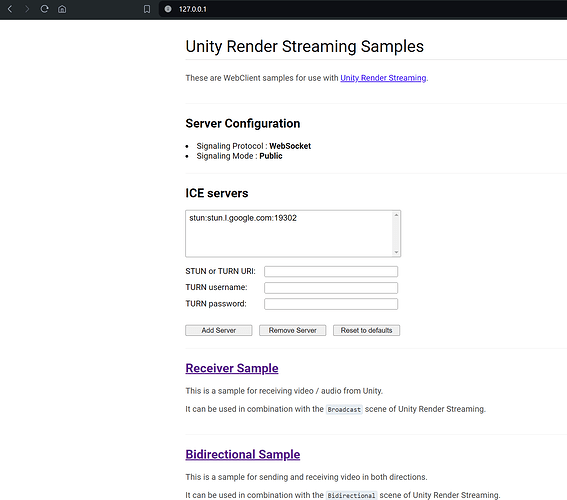

Open the browser in

PC_JSand connect to: http://127.0.0.1. This will connect you to the signaling server.

-

This is the default page (you can change it, we will go over that in a bit). Select the Bidirectional Sample.

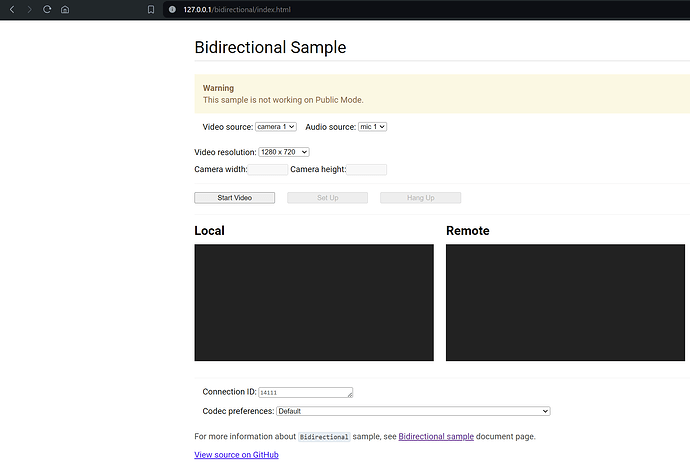

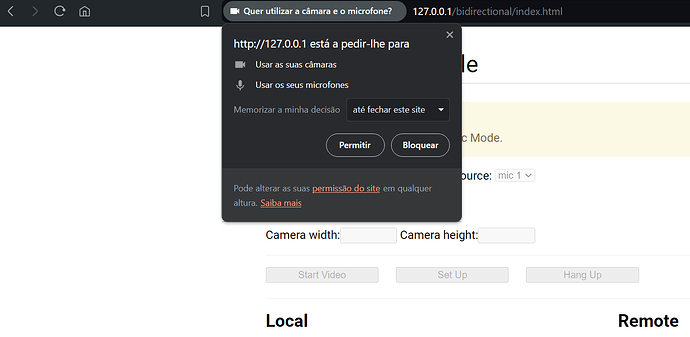

-

If no cameras, and or microphones, are detected the list will only show “camera 1”, and or “mic 1”. If that happens, don’t worry, click “Start Video”. Now you will be asked to give permissions to acesse the camera and the microphone. Allow it and reload the page.

-

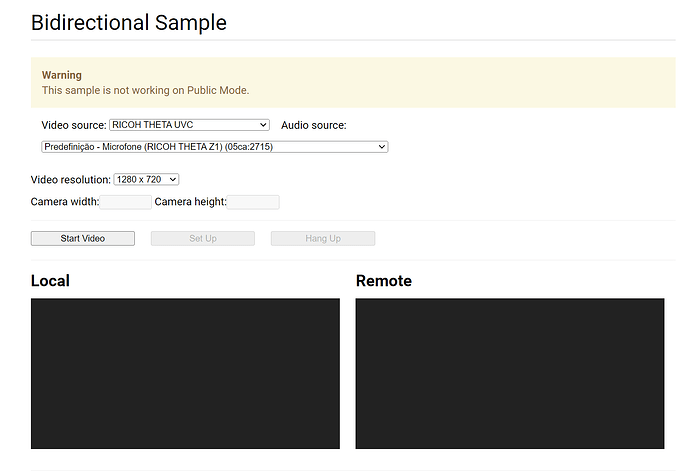

Now the cameras and microphones will display properly.

-

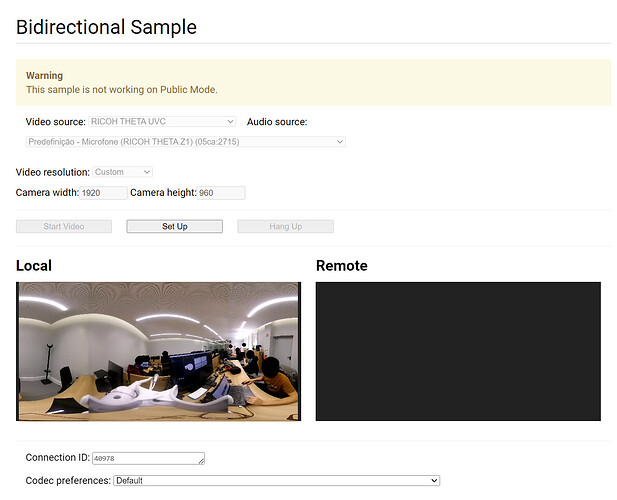

Set the correct resolution (you can pick custom): 1024x512, 1920x960 or 3840x1920.

-

Press “Start Video”, the Local video should start:

-

Now input you Connection ID, if you change the code in Unity, it should be 00000.

-

Press Setup (the connection will not start since we still have to run Unity).

Unity

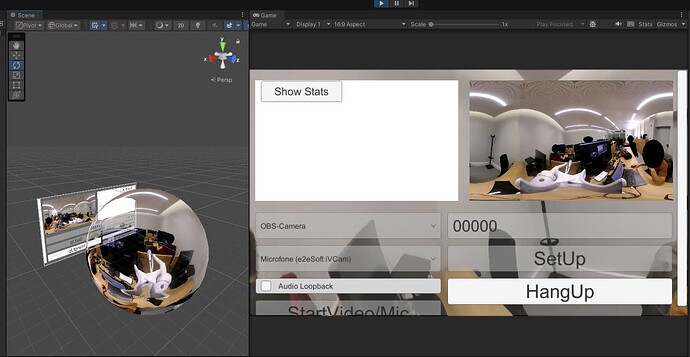

- Press Play;

- Because this is bidirectional, you can pic a camera and a microfone in the Unity side;

- Press Start Video/Mic;

- Now, if you change the code to force the connection channel 00000, you just need to press SetUp, if not, input your channel and press SetUp.

- Now you can disable the Canvas in the inspector (or add a button to do that) so you can clearly see the sphere.

Final Notes

- Customizing the web application involves JavaScript scripting and HTML. While I’m not an expert in this area, you can find detailed guidance on the Unity page: Customize web application.

- I’m exploring the implementation of this in VR. If anyone needs assistance, feel free to reach out, and I can create a separate post to address specific VR-related queries.

- Once I make progress on customizing the WebApp and adapting the solution to run on a Raspberry Pi or other portable devices, I’ll provide updates in a subsequent post.

I believe I addressed everything in this post, but if someone finds any problems or notices something that I didn’t cover well enough, please let me know so I can update the post.