Introduction

A community member suggested that I would get better results from image enhancement software if I used dual-fisheye images. As the RICOH THETA Stitcher was just released as a standalone stitcher, I thought it was a good time to retest Gigapixel AI and see if I could stitch an enhanced dual-fisheye image.

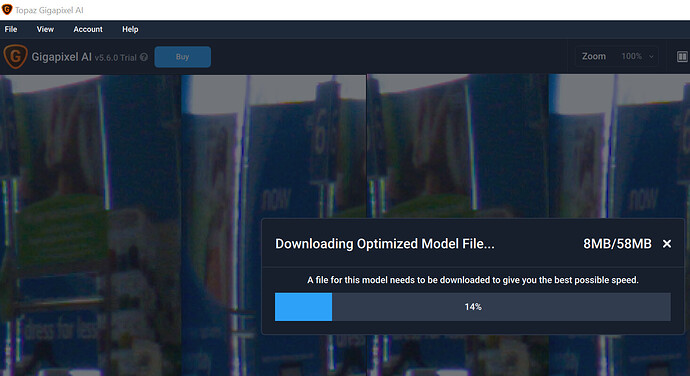

Loading 47MB DNG file into Gigapixel AI.

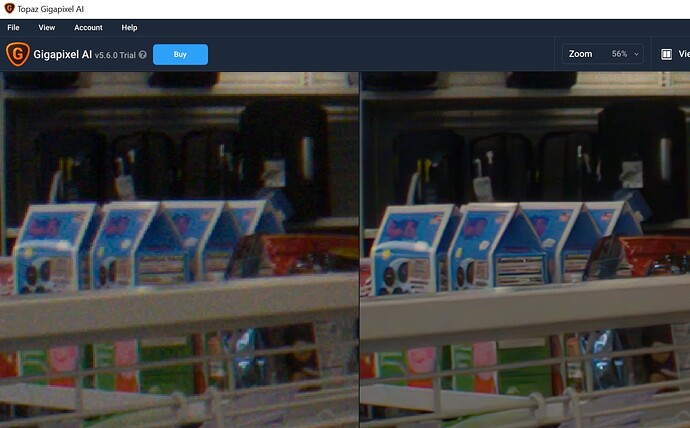

- 4x scale

- remove blur max

- suppress noise max

Required a 58MB download of a model file for 4x scale

Significantly clearer.

some clarity improvements and some incorrect text.

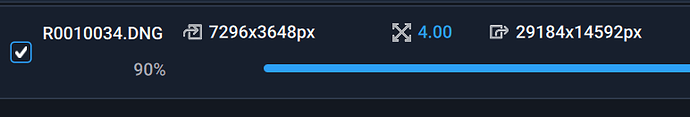

Output Enhanced DNG

exported as 260MB JPG image with some lighting artifacts

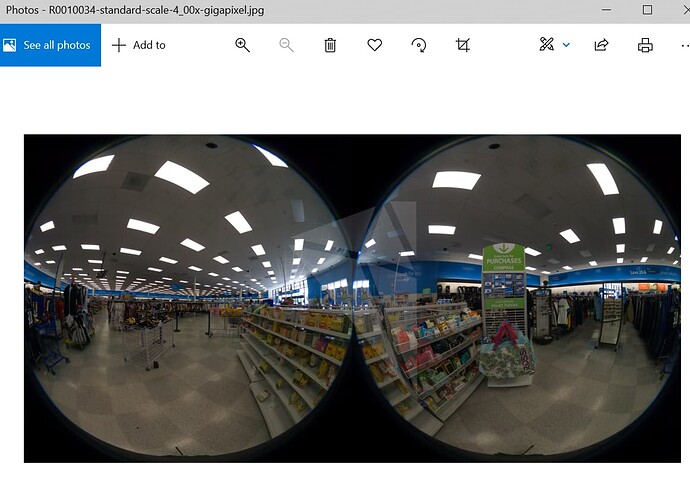

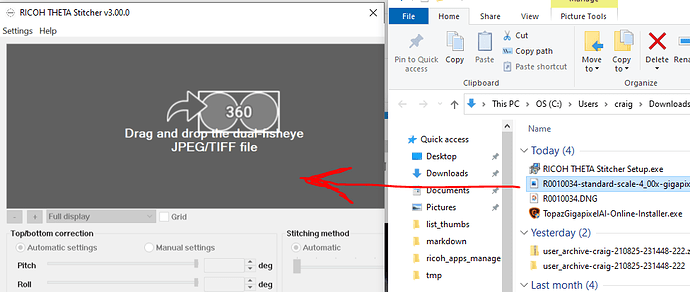

Stitching

Using new standalone RICOH THETA Stitcher version 3.0.0, released Aug 24, 2021.

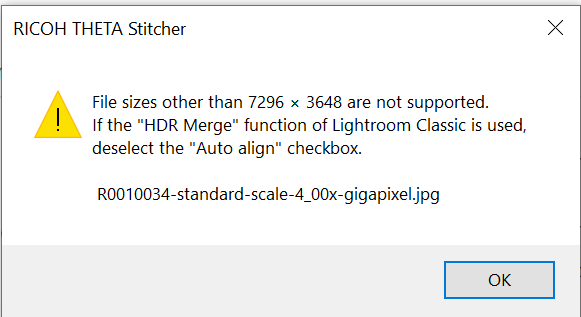

Failed to stitch. ![]()

Scale Back Down

Somewhat defeats the purpose. But, let’s see. We’ve come this far.

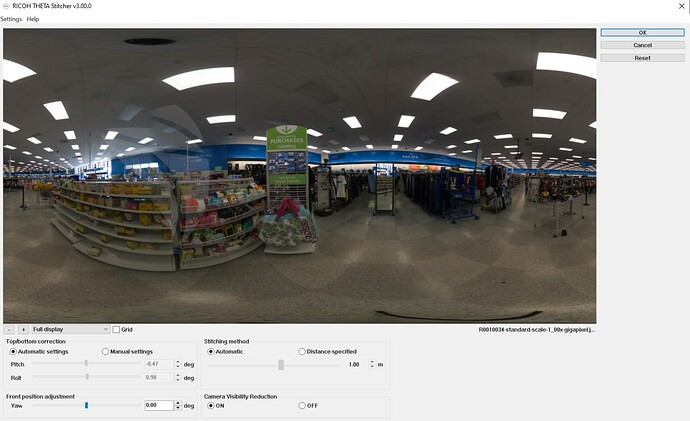

Stitching Again

Some artifacts from lighting.

Summary Results

Gigapixel AI image enhancement shows great promise. However, it is tricky to eliminate the lighting artifacts in my test image. Likely, this can be improved with practice. The original DNG is here . Please post your results if you can get a better image.

I was not able to stitch the enhanced image with the RICOH THETA Stitcher as the size needs to be the original size of the DNG, 7296x3648.

As portions of the images look significantly better, it’s worth doing another test in the future.

Updates from the community.

Because Gigapixel AI causes artifacts at zenith and nadir, it’s better to run it on the unstitched fisheye images then stitch in Ptgui.

Gigapixel AI images will probably not be accepted automatically by Ptgui so you’ll have to manually set up your first stitch.

Seems like an inordinate amount of effort to try to make a silk purse.