hi,

PTGui can be used to create a correcte, close to perfect stitching. Saving that project file and importing it into Mistika VR is the key here.

Mistika VR is able to stitch good the video that I record in my plugin in dual fisheye mode AND also adds stabilization, also it supports h.265 mp4 that comes from my plugin.

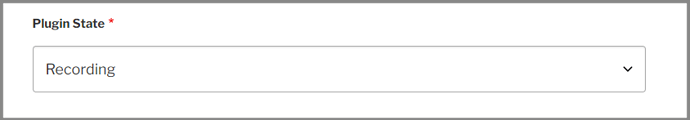

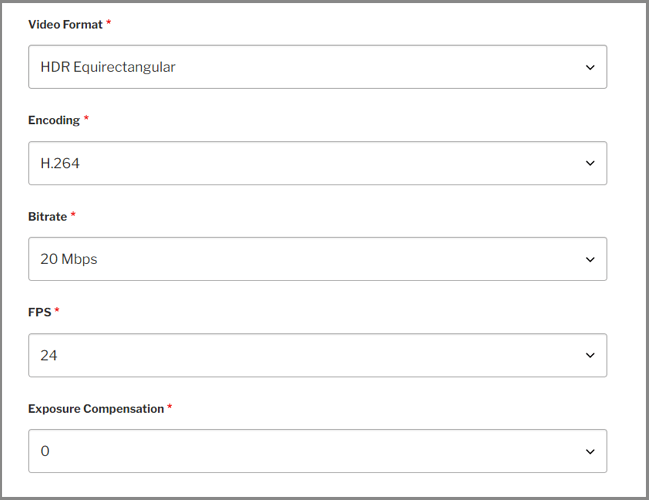

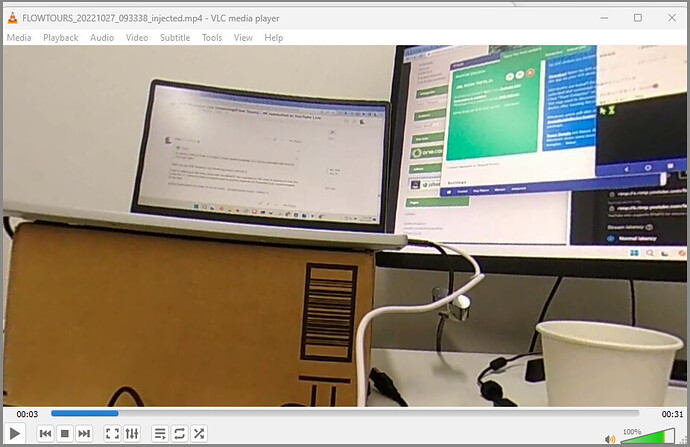

MY plugin can save in 24FPS in equirectangular mode, but the video looks exactly like during live stream. Clearly, can set video bitrate to 50mbps in h.265 it looks quite good. BUT stitching in camera has issues, also ricohs algorithm is used by me when rendering to equirectangular, but in HDR mode, there was bigger stitching issue, so I did some opengl operations to modify every frame a bit, but it’s still not soo good. I was thinking to implement a custom stitching mechanism, but still 24FPS may not be enough to some users.

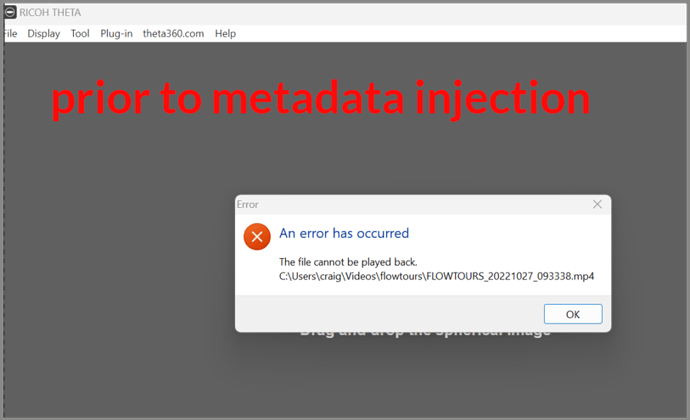

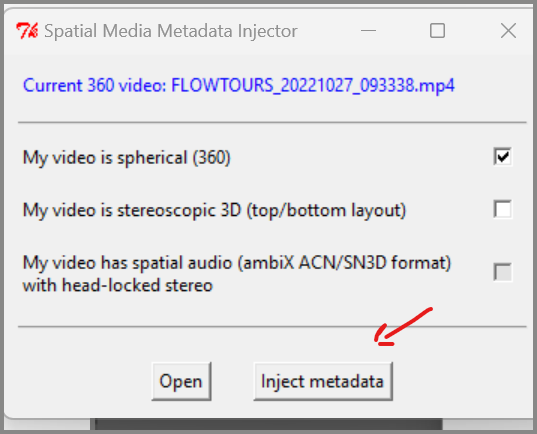

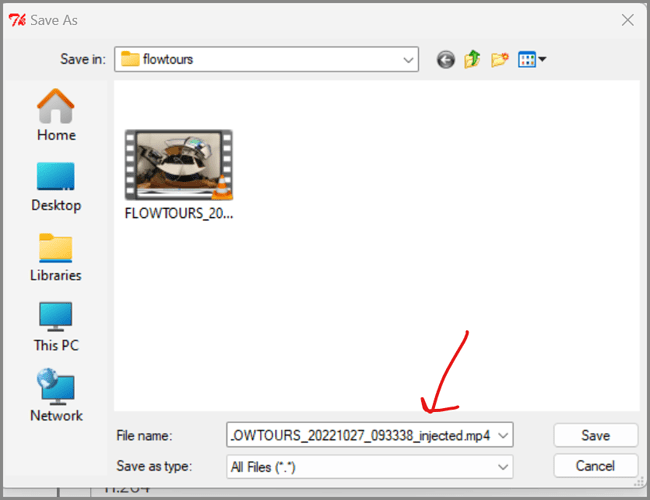

Another option could be to get from RICOH some details about what is required to store in mp4 to be able to stitch it with Theta desktop app directly. I mean to stitch the mp4 that is recorded by my plugin.

I did some investigation and in theory I could store metadata on the fly, but not sure how would that affect FPS… Also I couls save even gyroscop and accelerator data, but not sure about the format used by Theta Desktop app. btw, does it use gyro and accell data? I think yes, like it does some kind of stabilization, isn’t it?

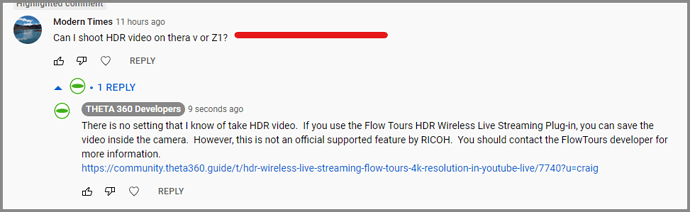

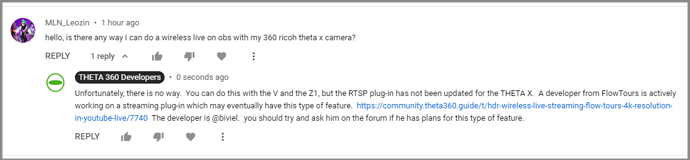

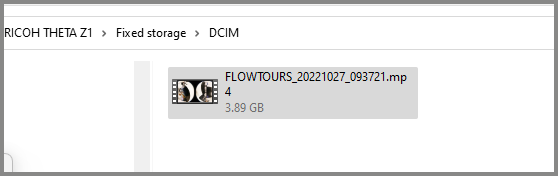

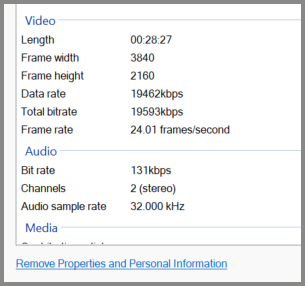

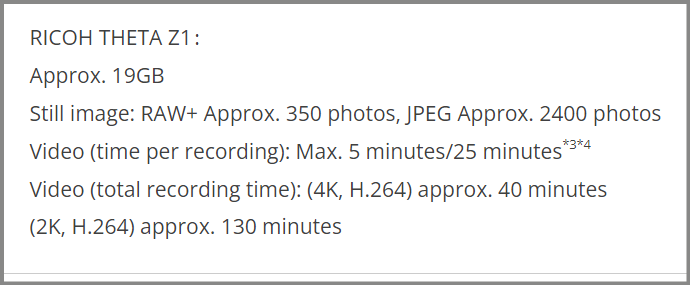

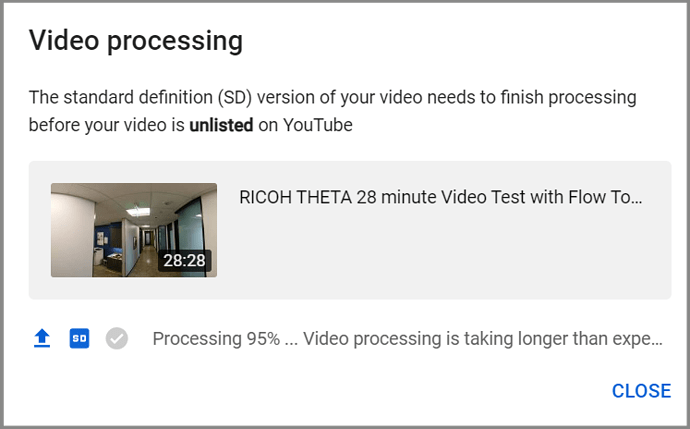

Clearly with my plugin I’m able to save record in HDR mode, and Ricohs internal recording can’t do that, it’s a huge quality improvement, especially indoor, but outdoor too when it’s not so sunny day. Also I’m able to record for an hour even or more, especially if h.265 is used as encoding.

The problem with Mistika VR is that it cost 60-80USD for a month and process is also a bit complex at the first time. I tried PTGui and was able to get quite good results…