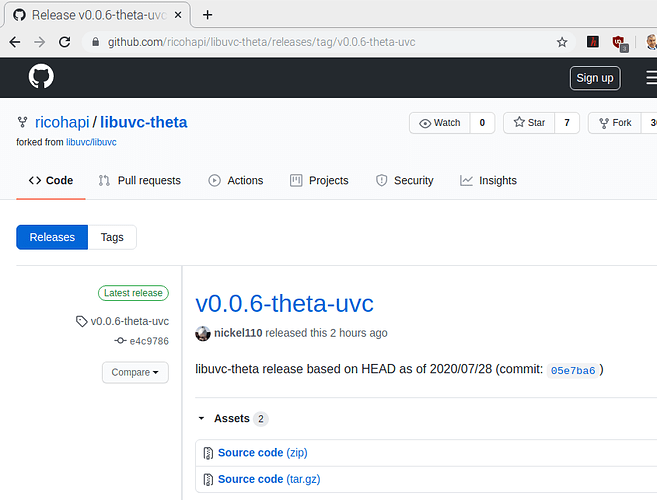

v0.0.6-theta-uvc released 2 hours ago. I am trying to compile on a Raspberry Pi. I will report back.

Related topics

| Topic | Replies | Views | Activity | |

|---|---|---|---|---|

| Ricoh Theta V: Livestreaming with Jetson Xavier, ROS, OpenCV, NUC | 37 | 6610 | September 15, 2021 | |

| Troubleshooting v4l2loopback Issues on Jetson with Ubuntu 20.04 in ARM Environment | 9 | 1145 | January 22, 2024 | |

| Linux Live Streaming Quick Start on Ubuntu x86 - How to Build libuvc for RICOH THETA V and Z1 | 8 | 4863 | February 9, 2022 | |

| Linux 20.04.06 LTS: The THETA Z1 live streaming | 10 | 129 | August 13, 2024 | |

| Getting errors while live streaming from Theta Z1 + Jetson Nano to opencv | 26 | 674 | April 25, 2024 |