thank you ,it is ok,and libusb need above 1.0.9;

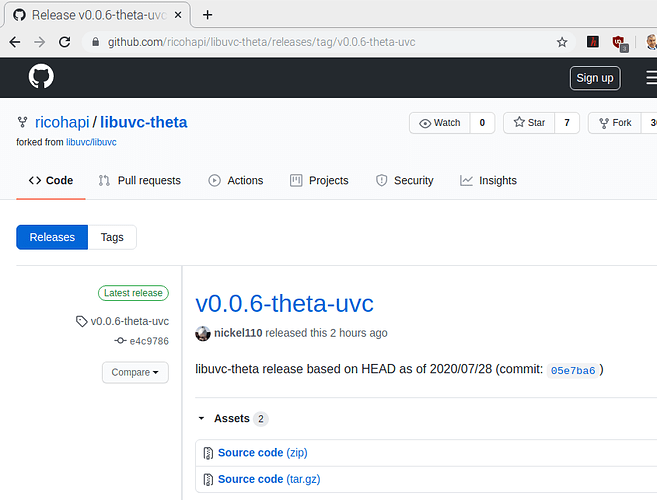

v0.0.6-theta-uvc released 2 hours ago. I am trying to compile on a Raspberry Pi. I will report back.

thanks,I have try. it is OK。

by the way,Can i get livestreaming by usb on android?

I want try control ThetaV by usb on android.

Thank you for uploading the video. It works for me now. The issue earlier was that I build the master branch instead of theta_uvc.

Also, I would like to ask if it is possible to make the Ricoh camera be detected as a video device. In that way, I can directly use it in ROS, OpenCV, or other robotics/computer vision applications.

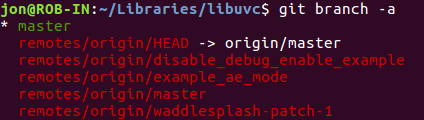

Hi, I didn’t write the sample code or the patch, but I’ve seen the THETA Z1 accessed from /dev/video0 on Linux. I’m trying to get this to work myself. I’ll share what I know and let’s work together on a solution that we can post here.

I’ve seen the following work on Linux:

- gst-launch-1.0 from command line accessing /dev/video0

- Yolov3

- openpose

Here’s what I’ve seen work but can’t get to work myself.

Note that omxh264dec is specific to NVIDIA Jetson. x86 needs to use vaapih264dec or msdkh264dec (I think. I don’t have it working)

sudo modprobe v4l2loopback

python3 thetauvc.py | gst-launch-1.0 fdsrc ! h264parse ! queue ! omxh264dec ! videoconvert ! video/x-raw,format=I420 ! queue ! v4l2sink device=/dev/video0 sync=true -vvv

./darknet detector demo cfg/coco.data cfg/yolov2.cfg yolov2.weights

in openpose/build/examples/openpose

openpose.bin --camera 0

I’m now working on an x86 Linux. I was able to build the driver and demo on a Raspberry Pi 3, but I was not able to run the demo. It would hang with no error messages. It might be possible with a Raspberry Pi 4.

I am going through the information for Intel Video and Audio for Linux to try and get a better fundamental understanding.

I don’t know what v4l2loopback does, but I’ve built the kernel module and I’ve installed it.

It did create a video device on my system. The first device is my webcam.

$ ll /dev/video*

crw-rw----+ 1 root video 81, 0 Jul 28 18:21 /dev/video0

crw-rw----+ 1 root video 81, 1 Jul 30 10:21 /dev/video1

This section from the README.md of the v4l2loopback site seems promising, but I don’t have it working at the moment.

the data sent to the v4l2loopback device can then be read by any v4l2-capable application.

If you get something working, please post. It looks super-promising, but I can’t access it from a /dev/video at the moment.

Hi, thanks for the reply. I will take a look into it and see if I can make it work.

First of all, great work everyone, I am loving these forums.

I have a Raspberry pi 4, a Jetson Nano and a Jetson Xavier

I would like to stream vidio from my Ricoh V or Z1 to these devices and apply CV, tensorflow, code etc.

IDeally the video would just show up as dev/video0, so frames can be easily grabbed in my Python CV enviroment. I can do this in Windows, however, the max resolution seems to be 1920 - 1080. I cannot do it at all on these devices. I got very exited to see the anouncement for the linux driver on July 29th. I would like to organize notes here and help but together an easy-to-follow, guide on how to get this to work. I think this would open up a world of possibilities for the broader community. So I have the following questions:

- Should I even attempt this on my Raspi 4?

- Has anyone gotten de Ricoh V/Z1 to show up as dev/video0?

- Has anyone been able to grab 4K frames in the python CV enviroment on any OS?

If we can get this don together, I will sepnd time organizing a easy to follow tutorial with approriate credits to anyone who helped.

Hi Craig, I got pretty far along on my RPi 4 until the make in gst. I get the following error:

pi@raspberrypi:~/Documents/ricoh/libuvc/libuvc-theta-sample/gst $ pwd

/home/pi/Documents/ricoh/libuvc/libuvc-theta-sample/gst

pi@raspberrypi:~/Documents/ricoh/libuvc/libuvc-theta-sample/gst $ ls

gst_viewer.c Makefile thetauvc.c thetauvc.h

pi@raspberrypi:~/Documents/ricoh/libuvc/libuvc-theta-sample/gst $ make

Package gstreamer-app-1.0 was not found in the pkg-config search path.

Perhaps you should add the directory containing `gstreamer-app-1.0.pc'

to the PKG_CONFIG_PATH environment variable

No package 'gstreamer-app-1.0' found

cc -c -o gst_viewer.o gst_viewer.c

gst_viewer.c:38:10: fatal error: gst/gst.h: No such file or directory

#include <gst/gst.h>

^~~~~~~~~~~

compilation terminated.

make: *** [<builtin>: gst_viewer.o] Error 1got it to work!!! but the latency on my Raspi is 20 ~seconds.

I installed some of the gstreamer stuff.

$ sudo apt-get update -y sudo apt-get install -y libgstreamer1.0-dev

$ sudo apt install gstreamer1.0-plugins-bad gstreamer

$ sudo apt install libopenal-dev libsndfile1-dev libgstreamer1.0-dev libsixel-dev gstreamer1.0-plugins-bad gstreamer1.0-plugins-base libgstreamer-plugins-base1.0-dev libgstreamer-plugins-bad1.0-dev

$ sudo apt-get install libgstreamer-plugins-bad1.0-dev gstreamer1.0-plugins-good@Jaap This is great!! I know @codetricity was testing on a Raspberry Pi 3, and I think was having some issues. This looks like good info on gstreamer.

Thank you, I got the Z1 to stream as well on the Raspi 4. Same delay it seems more than 20 seconds. I will try my Jetson Nano tomorrow. The camera is not showing up under dev/video0. I wonder how I can grab frames and bring them into python to start computer vision applications. Any advise?

It may be that the Jetson Nano has more memory or other hardware advantages compared to the Raspberry Pi 4. I don’t know this, I’m just guessing. I’d be interested to hear how it goes with your tests and if there’s an improvement in the latency.

I was unable to use the libuvc-theta and libuc-theta-sample on the Raspberry Pi 3. The platform is too slow. What was the configuration of your Raspberry Pi 4? How much RAM?

I have seen it running on the Jetson Nano with a single 4K live stream with low latency.

I have seen the demo running on Jetson Xavier with 2 4K stream simultaneously.

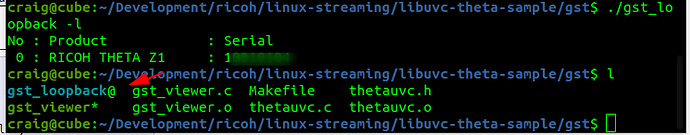

There was an update to the README about a gst_loopback file and an update to the code.

gst/gst_loopback

Feed decoded video to the v4l2loopback device so that v4l2-based application can use THETA video without modification.

CAUTION: gst_loopback may not run on all platforms, as decoder to v4l2loopback pipeline configuration is platform dependent,

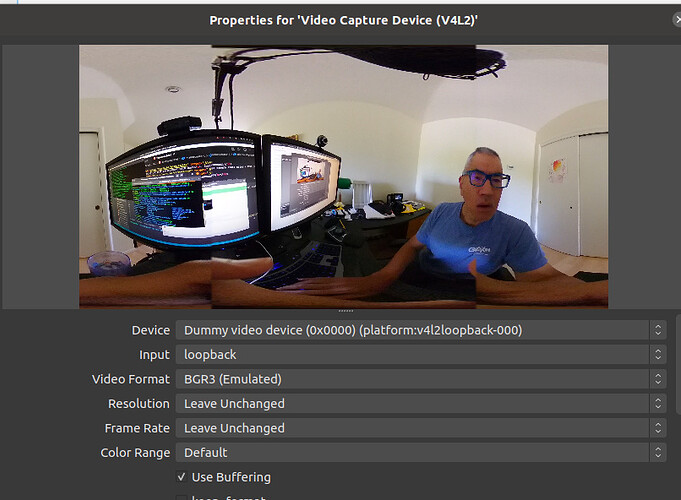

Examples with VLC and OBS on /dev/video*

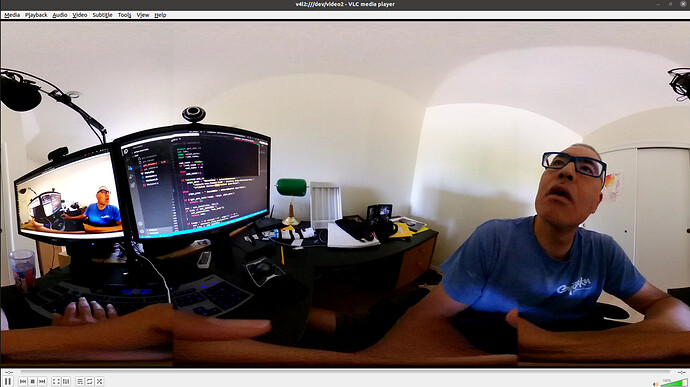

I am having issues with the frames stopping, but at least I have the video stream working. I think I need to specifically set the framerate and resolution. I’ll keep looking into this. If anyone has it working smoothly with something like OpenCV, please post your settings.

running with VLC on /dev/video2

running with OBS

update 2018 Aug 17

I heard from the developer that it may be easier to get the v4l2loopback working with an iGPU or with an NVIDIA Jetson. These are the two things he’s tested. I just ordered a Nano to test the loopback with the Nano.

I’m also trying to organize information on using the driver. I’ll put some articles that I’m looking at:

Online Meetup to Discuss Linux Live Streaming

Sept 1, 10am PDT

Signup

https://theta360developers.github.io/linux-streaming-meetup/

Agenda

- Introduce Oppkey, .guide, THETA camera

- THETA Live Streaming in General

- THETA Live Streaming on Linux

- USB cable

- Wi-Fi RTSP

- Wi-Fi motion JPEG

- libuvc explanation

- gst-streamer demo (already built) and explanation

- v4l2

- v4l2loopback and /dev/video*

- Current status problems and welcome contributions

- Does not work on Raspberry Pi 3. Raspberry Pi 4 shows 20 second latency

- Frames hang after about 100 to 200 frames on /dev/video*

Signup Now

I’ll be running the online meetup and will be presenting both a good overview of the tools and techniques immediately available, plus digging down into specifics around the newly available libuvc library and what that means for THETA developers.

If you’re already working with live streaming or you’re just interested in finding out what’s possible, this will be a great learning opportunity.

Also, we’ll provide ample opportunity to ask questions and talk about your current setup, if you’re interested in sharing. Let’s all join in and help grow the community!

Signup Now!

Watching the replay right now. Nice view! Sorry I missed it live!

Okay I am going to loop through my various Linux environments to see what works. I will keep editing this post. I am using the Ricoh Theta Z1 for all of these tests, my Ricoh Theta V doesnt turn on anymore and I have a hard time getting it to charge (this is an issue with the V that needs to be acknoledged by Ricoh).

VM (VMware)

Umbuntu 64, (18.04.5), Intel(R) Core™ i5-8250U CPU @ 1.60GHz

This would allow quick prototyping on a existing windows or iOs machine. I tried going through the steps installed everything successfully. I am getting the following error when running ./gst_viewer

./gst_viewer: error while loading shared libraries: libuvc.so.0: cannot open shared object file: No such file or directory

Raspberry Pi 4 Model B,

Rev 1.1 4 gb version, Debian 10.4 (Buster).

I got the ptc_viewer to run but the delay is more than 30 seconds, at low frame rates. I know there is an 8 Gb RAM version. I am not sure if that will make a difference. I think life USB streaming on any Pi might be a bit too much!

NVDIA Jetson Nano

Ubuntu 18.04, 4 GiB RAM, NVIDIA Tegra X1, 64 bit

This is the Nano with the DLINANO Deepstream image installed. This worked well. I initially got the error

dlinano@jetson-nano:~/Documents/ricoh/libuvc/libuvc-theta-sample/gst$ ./gst_viewer

./gst_viewer: error while loading shared libraries: libuvc.so.0: cannot open shared object file: No such file or directory

I was able to resolve this with (I will try this on my VM since I got the same error there)

sudo /sbin/ldconfig -v

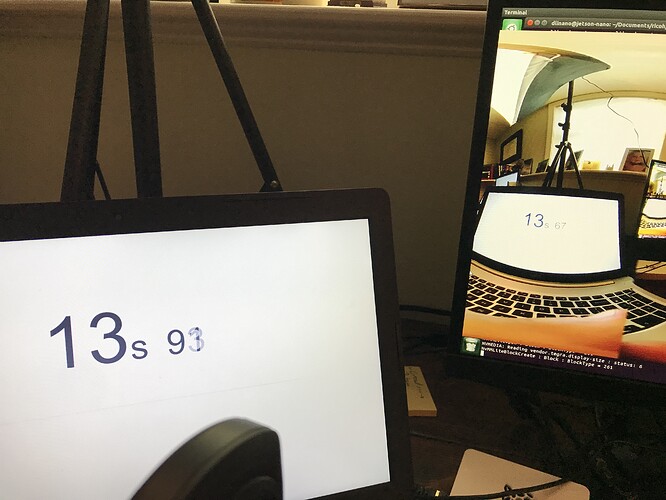

The delay is negligible, life streaming a stopwatch, I get 260 ms delay.

NVIDIA AGX XAVIER

Ubuntu 18.04, specs

I got everything installed

jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ ./gst_viewer

start, hit any key to stop

Opening in BLOCKING MODE

NvMMLiteOpen : Block : BlockType = 261

NVMEDIA: Reading vendor.tegra.display-size : status: 6

NvMMLiteBlockCreate : Block : BlockType = 261

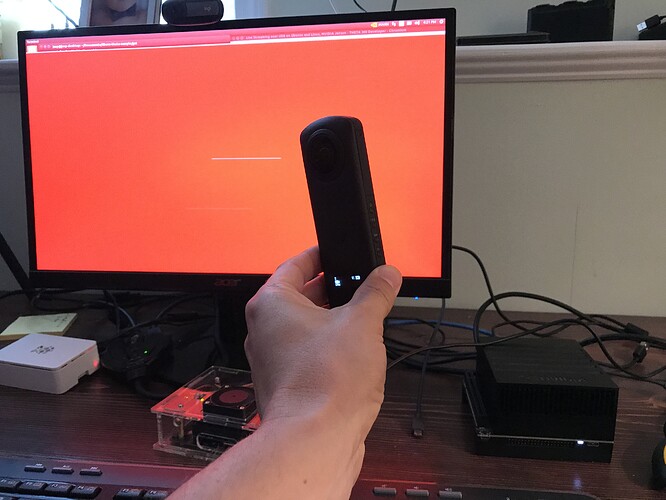

However, I don’t see the streamed feed appear. Instead, I see a red screen with some small striped across.

for the current session, you can set the library path with

export LD_LIBRARY_PATH=/lib:/usr/lib:/usr/local/lib

to make it permanent,

go into /etc/ld.so/ and add the path if it already doesn’t exist, then run ldconfig.

Thought, it appears you have that sorted out.

Like you, I have the streamed feed on NVIDIA Jetson Nano. I am making a video about this process now.

Update: I have the THETA streaming over /dev/video* with low latency.

I’ll make a video first of the process, then add some additional explanation of the problem with the gstreamer vaapi plugin and the hardware decoder of the nvidia GPU with nvidia proprietary driver.

On Jetson Xavier, auto plugin selection of the gstreamer seems to be not working well, replacing

“decodebin ! autovideosink sync=false”

to

“nvv4l2decoder ! nv3dsink sync=false”

will solve the problem.