Hello everyone ![]() ,

,

I am trying to set up the Ricoh Theta V in the Jetson Xavier via USB connection to use it with ROS and OpenCV.

- ROS Melodic

- Ubuntu 18.04

- Jetson Xavier

- OpenCV 4.5.3

So far:

- ptpcam - is working

- libuvc-theta - is working

- libubv-theta-sample - I have problem with gst_loopback, but gst_viewer is working

gst_loopback

gst_loopback

So far, gst_viewer is working great, but when I run gst_loopback, I am getting the following error:

start, hit any key to stop

Error: Internal data stream error.

stop

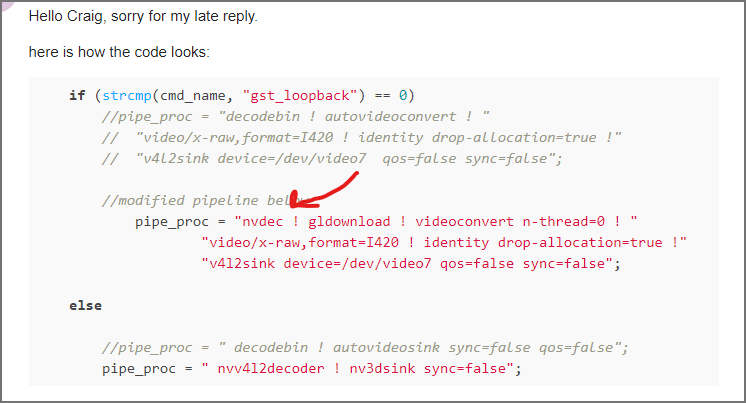

From the gst_viewer.c file, I edited the following, and the error is still there.

if (strcmp(cmd_name, "gst_loopback") == 0)

// original pipeline

// pipe_proc = "decodebin ! autovideoconvert ! "

// "video/x-raw,format=I420 ! identity drop-allocation=true !"

// "v4l2sink device=/dev/video2 qos=false sync=false";

//

//modified pipeline below

pipe_proc = "nvdec ! gldownload ! videoconvert n-thread=0 ! "

"video/x-raw,format=I420 ! identity drop-allocation=true !"

"v4l2sink device=/dev/video2 qos=false sync=false";

else

//pipe_proc = " decodebin ! autovideosink sync=false qos=false";

pipe_proc = “nvv4l2decoder ! nv3dsink sync=false”;

ROS and OpenCV

ROS and OpenCV

To use the Ricoh Theta V with ROS, I installed ROS Melodic and OpenCV 4.5.3 (currently the latest version). Additionally, I tried the following packages and plugins:

- gscam

- gstreamer

- cv_camera - didn’t work for me

- libuvc_camera - didn’t work for me

- video_stream_opencv - I will test it once I get the gst_loopback working, but the NUC is working great, so it looks promising in the Jetson Xavier

- ros_deep_learning - still in progress

Ricoh Theta V

Ricoh Theta V

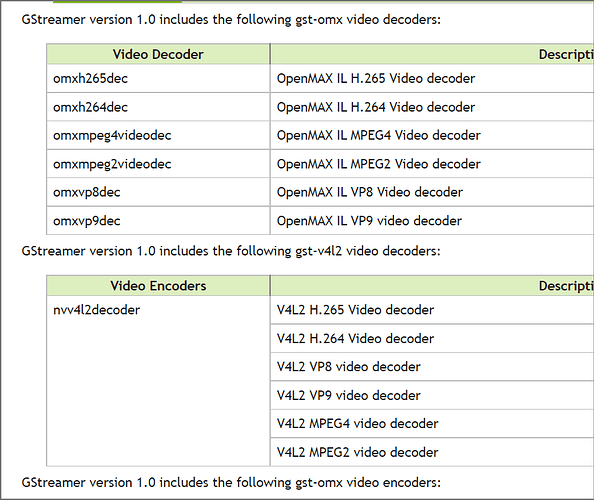

Ricoh Theta V uses UVC1.5 and H264 streaming.

Notes

Notes

I will keep trying to make it work and keep posting. But if someone has the answer on how to fix this or has experience with the Ricoh Theta V, Jetson Xavier, and ROS, please feel free to share your knowledge. We will be happy to hear your input.