Nice!!! Way to go. Thanks for the report back. Very motivational.

I tested the Nano with OpenCV Python.

This test script works. I have v4l2loopback installed. I will document more tomorrow. I think I made some video screen captures of the process to get it work. I say, “think,” because I decided to use gstreamer to make the videos screen captures and I’m not that familiar with gstreamer.

import numpy as np

import cv2

cap = cv2.VideoCapture(0)

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# Our operations on the frame come here

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Display the resulting frame

cv2.imshow('frame',gray)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

cv2.destroyAllWindows()

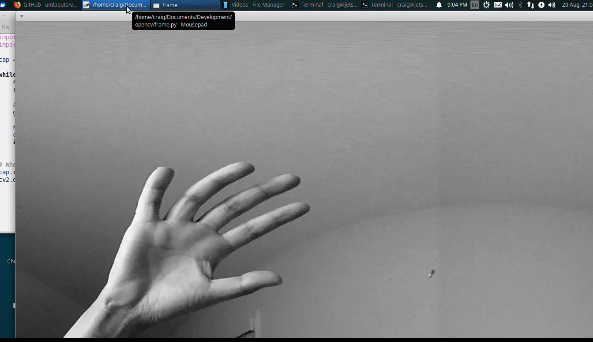

This is a frame from the output of cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) running on the video from a THETA V. It’s a big frame and this is only a small part of the shot.

Update 2020 08 21 9:23am PDT

The following was added to the site below:

https://theta360.guide/special/linuxstreaming/

- screencast build on of libuvc on Jetson Nano

- explanation of why the demo is failing on Rasbperry Pi 2, 3, and 4 (in 4K) and will likely continue to fail due to hardware limitations

- explanation of problems with x86 Linux with discrete graphics card and two possible workarounds (I still need to test this on different platforms)

- tip to optimize NVIDIA Jetson video

- instructions to get video to work on NVIDIA Xavier

Update 2020 08 21 9:51am PDT

- new video on building and loading the v4l2loopback to expose /dev/video0 (when only one camera is connected)

I’m working on more documentation and videos at the moment.

Reminder: Fully Online Meetup on this topic on Sept 1 at 10am PDT