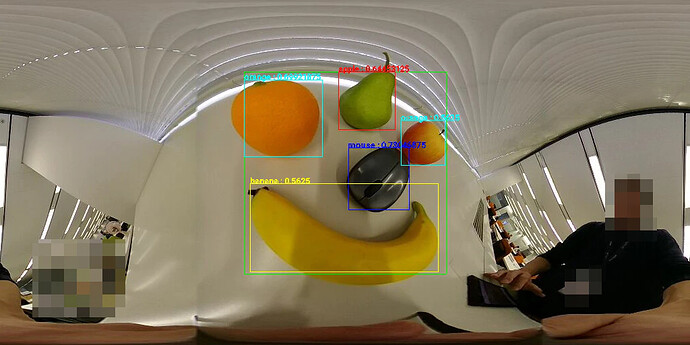

Community developer KA-2, recently published an article in Japanese on using TensorFlow Lite inside the RICOH THETA for object recognition.

This is a followup to his previous articles on using LivePreview with the THETA.

Both of the articles above come with sample code on GitHub.

I’ll summarize key points of KA-2’s most recent article. If you want to develop plug-ins, there is no charge. However, you must register for the plug-in partner program to unlock your camera and put it in developer mode.

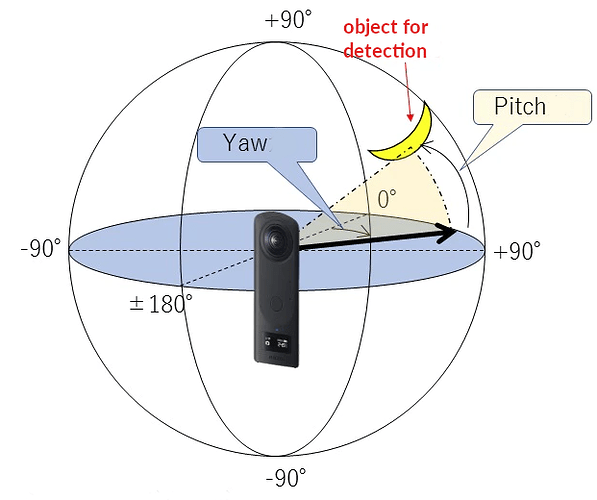

In his new article, KA-2 explains how to track a specific object in a horizontal direction using the THETA’s local coordinate system.

- graphic representation of Yaw

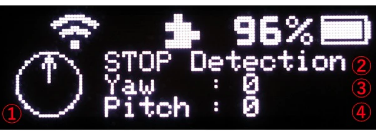

- status of object detection

a. ** Lock-On! **

b. - can’t find -

c. STOP Detection - Yaw in angle degrees

- Pitch in angle degrees

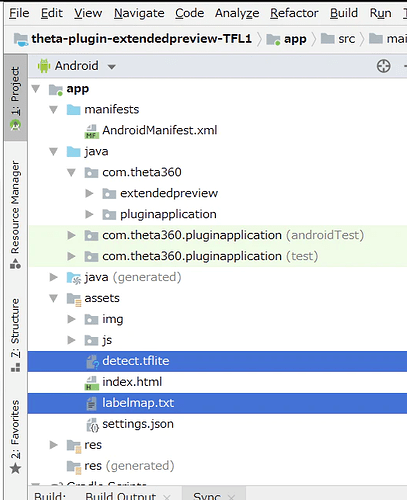

MainActivity.java

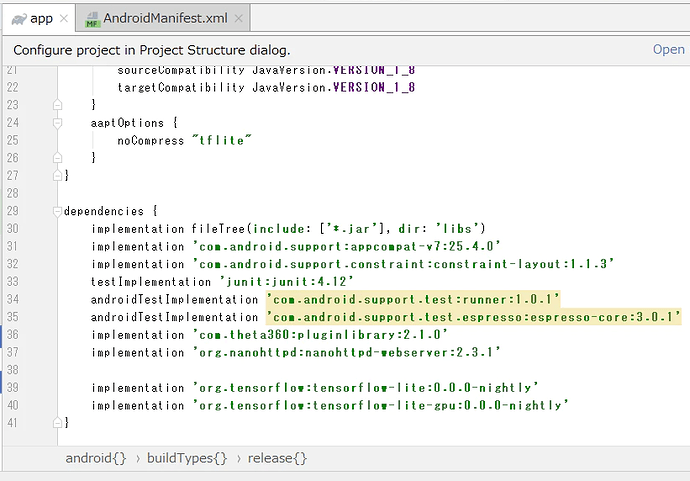

TensorFlow Lite

Object Processing

Using 300x300 pixel.

///////////////////////////////////////////////////////////////////////

// TFLite Object detection

///////////////////////////////////////////////////////////////////////

final List<Classifier.Recognition> results = detector.recognizeImage(cropBitmap);

Log.d(TAG, "### TFLite Object detection [result] ###");

for (final Classifier.Recognition result : results) {

drawDetectResult(result, resultCanvas, mPaint, offsetX, offsetY);

}

Using the Result

double confidence = Double.valueOf(inResult.getConfidence());

if ( confidence >= 0.54 ) {

Log.d(TAG, "[result] Title:" + inResult.getTitle());

Log.d(TAG, "[result] Confidence:" + inResult.getConfidence());

Log.d(TAG, "[result] Location:" + inResult.getLocation());

}

Thread Priority

public void imageProcessingThread() {

new Thread(new Runnable() {

@Override

public void run() {

android.os.Process.setThreadPriority(android.os.Process.THREAD_PRIORITY_BACKGROUND);

}

}).start();

}

Limitations

Operation was limited due to heat build-up. With a Z1, continuous operation of 20 to 30 minutes was achieved. The V could achive 10 to 15. The Z1 has better heat dissipation and a metal body. Tests were done with ambient temperature of around 20 to 22C.

Results

Using LivePreview WebAPI, tracking bananas in horizontal position with TensorFlow Lite Object Detection using a 1024x512 equirectangular format continuous frames was sustainable at 6fps. Sometimes 7fps could be achieved.

At 640x320, 8fps could be achieved. However, small objects such as bananas could not be recognized unless the object is held close to the camera at around 10cm.

The human recognition rate was high. Using pose estimation, it seems possible to create a camera for party shots such as when a person throws their hands up in the air for a “hooray” shot.

Potential Applications

Taking a shot for documentation or commemoration would work. However, long-term continuous operation is not feasible unless you reduce the frequency of recognition and use the WebUI only during debugging. For longer-term use, you must implement a system to turn the object recognition system on and off.

Reference

Full code examples are in the original Japanese article.