We’re closer than you think.

In the movies, VR transports people to a world filled with sights, sounds, and touch. As software developers and hardware engineers, we know that world is far away. Or, is it?

theta360.guide interviewed Professor Yasushi Ikei of Tokyo Metropolitan University about TwinCam Go, his research project that transmits vision, sound, and motion over wide-area networks. Professor Ikei has an Engineering Ph.D. from Tokyo University and conducts research on virtual reality technology, especially tactile displays, wearable / mobile computing connected with new human interface technology, and research on ubiquitous computing.

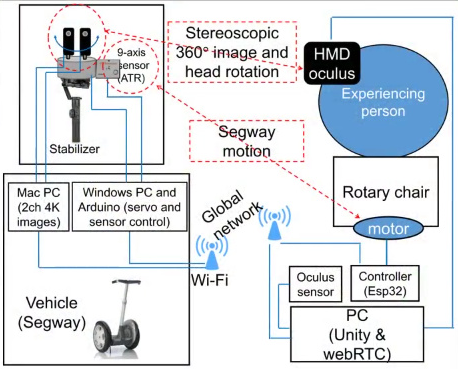

In our earlier article on TwinCam Go, we described the functionality of the “Real-Time Sharing of Vehicle Ride Sensation with Omni-Directional Stereoscopic Camera and Rotary Chair.

In our interview with Professor Ikei, we focused on techniques his team is using with the THETA V to reduce latency. Latency reduction is important to reduce motion sickness, or “VR sickness.”

theta360.guide: What are the advantages of using THETA Vs as a part of the TwinCam Go system?

Professor Ikei: The THETA V provides omnidirectional high quality live streaming. The 360 video stream reduces the perceived delay of images even if a communication delay exists. The person viewing the scene can turn their head and the scene will move because of the omnidirectional video. Actually, stereo vision (binocular parallax) is delayed.

theta360.guide: Are there other techniques you use to reduce “VR sickness”?

Professor Ikei: Yes, there are many. For example, the discrepancy between vision and bodily sensation is suppressed by using a body rotation feedback related to the motion of the Segway (acceleration and rotation).

theta360.guide: What Type of Video Compression are You Using?

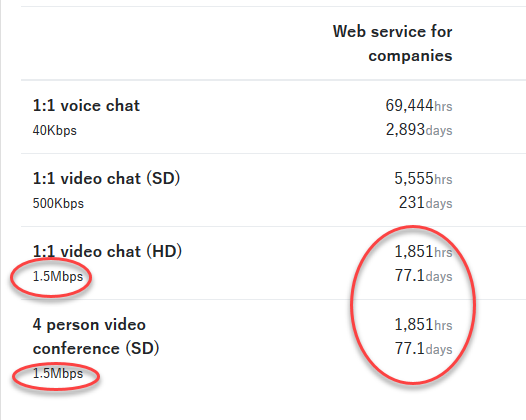

Professor Ikei: A high compression ratio encoding is preferable. However, processing power of the PC on the segway side is a problem for continuous heavy encoding load. We used VP9. However, its cpu load is very high to reach to the limit of the PC. About 3 Mbps at 4K, 30fps when the camera is placed on a desk. The image is reduced to about 3K resolution by automated control of the webRTC when the Segway moves.

theta360.guide: Are you still faced with latency challenges?

Professor Ikei: We have not faced with the latency problem because it is covered by our system design. The data compression is done by VP9 encoding in the webRTC.

theta360.guide: What about latency between the 2 THETA Vs? How is synchronizing the 2 cameras handled?

Professor Ikei: The latency difference between two images is small enough for our application at this time. No synchronization is applied.

theta360.guide: You mentioned high CPU load. What is the hardware configuration? Are you using VP9 hardware acceleration? I heard there was some VP9 hardware acceleration support in Intel Skylake, Nvidia Maxwell or later, AMD Fiji and Polaris. Maybe this is just for the decoder support?

Professor Ikei: We used MACbook pro14.3 15inch with Intel Core i7 2.9GHz. Maybe Skylake in which VP9 encoding is accelerated. On the user side, Core i9-7900X, 3.30GHz and two GTX 1080Ti are used for rendering the omnidirectional video in the UNITY with Oculus CV1. The chair is controlled by ESP32 with a motor driver amp, at this moment.

theta360.guide: Are you still using VP9? Have you looked into using AV1? What about hardware-based compression for HEVC?

Professor Ikei: Yes. We picked VP9 because Unity embedded browser requires it. But we are still searching for a better solution. We have not looked into AV1 since it may require more CPU load, and not be stable since it is very new. That is the case with HEVC. However, we are interested in these new codecs, and would like to use them if they are suitable for our system.

theta360.guide: What is the protocol you use to transmit the Segway position to the chair? Is there a standard?

Professor Ikei: Segway position is not used at this moment. The orientation (rotation angle) of the Segway is transmitted to the chair through the WebRTC data channel. That is an in-house protocol.

theta360.guide: Why did you choose WebRTC over RTMP or other protocols. Does WebRTC have lower latency compared to RTMP? What library are you using for WebRTC?

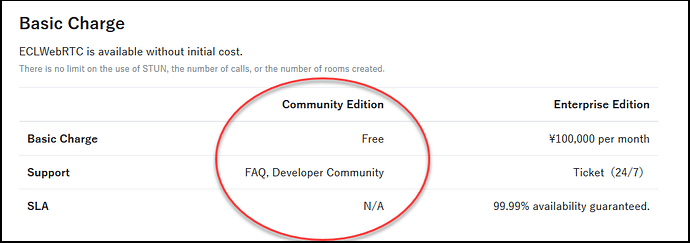

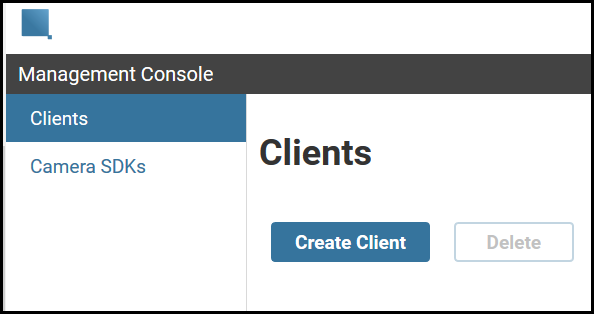

Professor Ikei: We used the webRTC since it has lower latency based on the P2P, and RTP on the UDP. Skyway by NTT Communications is used for an easy implementation of webRTC.

theta360.guide: What are you using to transmit audio?

Professor Ikei: The audio is also through webRTC media channel. We used a stereo channel with a microphone on each THETA V. Four channel sound cannot be used in live format because of the specification of the camera. We will extend it to a spatial audio in the future.

Note from theta360.guide: The THETA plug-in API does not support streaming video over a USB cable, so that does not look like a viable solution.

theta360.guide: How do you get the video stream into the VR Headset?

Professor Ikei: For the reception of the image, we used the webRTC and an embedded browser in the UNITY. The embedded browser and the AVpro are commercial products, so that the assets are not opened for free use.

theta360.guide: Segway can spin in a full circle - Will the viewer in the chair also spin? You’re built some ratio between the movement of the Segway and the movement of the chair?

Professor Ikei: The chair rotates at different angle from the Segway. It is a part of the research.

theta360.guide: How important is adding the kinesthetic sensation to the chair? Does this help limit nausea? Or does it just help increase the illusion of “immersion”?

Professor Ikei: It has some effects in reducing the motion sickness, however the evaluation experiment is not finished. Yes, it also increases the sense of immersion.

Additional Information

- THETA V stream is started by pressing the button on the camera. They are not starting the stream automatically with software

- The THETA V is powered from the USB cable, similar to standard live streaming techniques to YouTube or HTC Vive. The TwinCam Go team sometimes experiences power loss of the THETA V during long demonstrations.

- The code is not available for public use at this time.

- The system is not available for purchase

Next Steps for THETA Developers

- Join the RICOH THETA Plug-in Partner Program

- Join community.theta360.guide and tell us about your project