Hi Craig, thank you. How do I do this. Which file do I change, do I need to recompile. (I am kinda new to all of this). That’s why I need to get frames into my safe python environment ASAP

The dev/video part would be great. I would be off to the races!

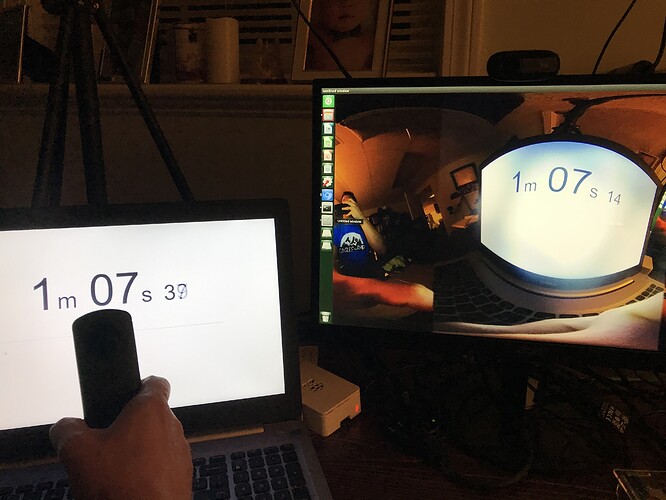

I got the tip from the developer in Japan. I don’t have access to an Xavier, though I wish I did. ![]() The guy that gave me the tip has access to a Nano and Xavier and it is running on both.

The guy that gave me the tip has access to a Nano and Xavier and it is running on both.

I believe it is the line below for the gst_viewer, but I have not tested it due to lack of hardware. ![]() However, I’m pretty happy with this new NVIDIA Jetson Nano, so that should keep me occupied for a while.

However, I’m pretty happy with this new NVIDIA Jetson Nano, so that should keep me occupied for a while.

I have it working on NVIDIA Jetson Nano. I just the dev kit today. I will try and document the process tomorrow.

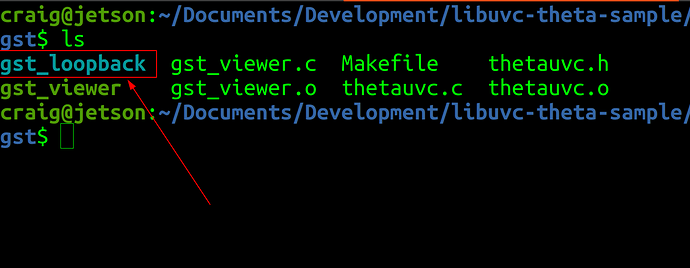

If you’re eager, grab this code:

After you install it, follow the directions in the README, then edit the code of the v4l2sink device= to match your video device, likely /dev/video0 unless you have another camera on the Jetson.

"v4l2sink device=/dev/video0 sync=false";

You can see the devices on your Jetson with

ls -l /dev/video*

Most likely, you will only have /dev/video0 listed unless you’re running two cameras.

I believe that the Nano can likely only handle one THETA 4K stream, but the Xavier can handle two simultaneous streams.

I’ll try and document more tomorrow, but as you’re in the middle of your testing, thought I would share what I know thus far.

Thanks Craig, I will give it a try tomorrow, I’m in the Boston area btw. I’m also putting together some script file to do some of this stuff.

It worked!!!

> > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ sed -n 192p ./gst_viewer2.c > pipe_proc = " decodebin ! autovideosink sync=false"; > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ sed -i '192s/.*/“nvv4l2decoder ! nv3dsink sync=false”/' ./gst_viewer2.c > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ sed -n 192p ./gst_viewer2.c > “nvv4l2decoder ! nv3dsink sync=false” > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ sed -i '192s/.*/pipe_proc = “nvv4l2decoder ! nv3dsink sync=false”/' ./gst_viewer2.c > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ sed -n 192p ./gst_viewer2.c > pipe_proc = “nvv4l2decoder ! nv3dsink sync=false” > jaap@jaap-desktop:~/Documents/libuvc-theta-sample/gst$ make

Nice!!! Way to go. Thanks for the report back. Very motivational.

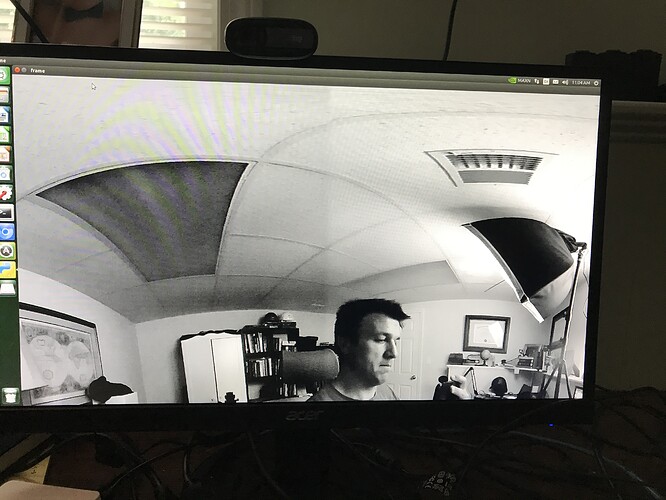

I tested the Nano with OpenCV Python.

This test script works. I have v4l2loopback installed. I will document more tomorrow. I think I made some video screen captures of the process to get it work. I say, “think,” because I decided to use gstreamer to make the videos screen captures and I’m not that familiar with gstreamer.

import numpy as np

import cv2

cap = cv2.VideoCapture(0)

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# Our operations on the frame come here

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Display the resulting frame

cv2.imshow('frame',gray)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

cv2.destroyAllWindows()

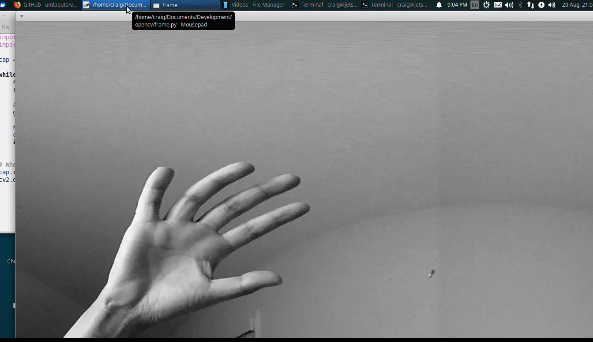

This is a frame from the output of cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) running on the video from a THETA V. It’s a big frame and this is only a small part of the shot.

Update 2020 08 21 9:23am PDT

The following was added to the site below:

https://theta360.guide/special/linuxstreaming/

- screencast build on of libuvc on Jetson Nano

- explanation of why the demo is failing on Rasbperry Pi 2, 3, and 4 (in 4K) and will likely continue to fail due to hardware limitations

- explanation of problems with x86 Linux with discrete graphics card and two possible workarounds (I still need to test this on different platforms)

- tip to optimize NVIDIA Jetson video

- instructions to get video to work on NVIDIA Xavier

Update 2020 08 21 9:51am PDT

- new video on building and loading the v4l2loopback to expose /dev/video0 (when only one camera is connected)

I’m working on more documentation and videos at the moment.

Reminder: Fully Online Meetup on this topic on Sept 1 at 10am PDT

Okay Craig, here is what I did on my Xavier.

I cloned an installed v4I2loopback.

I ran

modprobe v4l2loopback

Then I created the python file you showed.

jaap@jaap-desktop:~/Documents/ricoh/ricoh_python_examples$ ls -l /dev/video*

crw-rw----+ 1 root video 81, 0 Aug 21 10:50 /dev/video0

I get the following error trying to run the python scrip:

jaap@jaap-desktop:~/Documents/ricoh/ricoh_python_examples$ python3 ricohPyViewer_01.py

[ WARN:0] global /home/nvidia/host/build_opencv/nv_opencv/modules/videoio/src/cap_gstreamer.cpp (1757) handleMessage OpenCV | GStreamer warning: Embedded video playback halted; module v4l2src0 reported: Internal data stream error.

[ WARN:0] global /home/nvidia/host/build_opencv/nv_opencv/modules/videoio/src/cap_gstreamer.cpp (886) open OpenCV | GStreamer warning: unable to start pipeline

[ WARN:0] global /home/nvidia/host/build_opencv/nv_opencv/modules/videoio/src/cap_gstreamer.cpp (480) isPipelinePlaying OpenCV | GStreamer warning: GStreamer: pipeline have not been created

VIDEOIO ERROR: V4L2: Could not obtain specifics of capture window.

VIDEOIO ERROR: V4L: can’t open camera by index 0

Traceback (most recent call last):

File “ricohPyViewer_01.py”, line 11, in

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

cv2.error: OpenCV(4.1.1) /home/nvidia/host/build_opencv/nv_opencv/modules/imgproc/src/color.cpp:182: error: (-215:Assertion failed) !_src.empty() in function ‘cvtColor’

What do you think I did wrong?

[edit], I just tried on my Jetson Nano and I am getting the same error

Are you running gst_loopback from the sample app and did you edit the device to point to dev/video0 in the source of the sample app? Your questions are useful as I am making a video about this process and it reminds me to add info into the video. Thanks.

Do I need to run gst_loopback and then run the Python script?

[edit]

It worked. I ran

dlinano@jetson-nano:~/Documents/ricoh/libuvc/libuvc-theta-sample/gst$ ./gst_loopback

start, hit any key to stop

Opening in BLOCKING MODE

NvMMLiteOpen : Block : BlockType = 261

NVMEDIA: Reading vendor.tegra.display-size : status: 6

NvMMLiteBlockCreate : Block : BlockType = 261

Then I ran you python code opening a new terminal and it works!

I definitely notice some increase in lag. I will run some tests tonight comparing speeds and lag in python / open CV scripts with the Jetson Nano and Xavier

I have the lag with the Python cv2 lib. However, if I run vlc on /dev/video0 on the Jetson nano, there is no lag. Thus, I suspect the lag might be related to the simple python test script. I’m not sure. Just confirming that my situation is the same as yours. Thanks for the update and congratulations on making so much progress, so quickly. ![]()

In addition to vlc, I tested the v4l2src with gst-launch and the latency is low. I haven’t looked at the cv2 lib yet.

gst-launch-1.0 -v v4l2src ! videoconvert ! videoscale ! video/x-raw,width=1000,height=500 ! xvimagesink

Devices on Nano.

$ v4l2-ctl --list-devices

Dummy video device (0x0000) (platform:v4l2loopback-000):

/dev/video0

Tested lower latency with OpenCV using cv2 Python module.

This one just resizes the video, but it does show the processing with OpenCV is usuable, though slower than with C.

import cv2

cap = cv2.VideoCapture(0)

# Check if the webcam is opened correctly

if not cap.isOpened():

raise IOError("Cannot open webcam")

while True:

ret, frame = cap.read()

frame = cv2.resize(frame, None, fx=0.25, fy=0.25, interpolation=cv2.INTER_AREA)

cv2.imshow('Input', frame)

c = cv2.waitKey(1)

if c == 27:

break

cap.release()

cv2.destroyAllWindows()

compare to gst-launch using v4l2src. You can adjust the size of the video window to do the same size test.

gst-launch-1.0 -v v4l2src ! videoconvert ! videoscale ! video/x-raw,width=1000,height=500 ! xvimagesink

I also had latency when using Jetson nano, but it was due to high CPU usage.

When I set the power mode of Jetson nano to MaxN of 10W, the latency became small.

Thanks. I have the nvpmodel set to 0, which is the 10W mode. I have the 5V 4A 20W barrel connector power supply.

$ sudo nvpmodel --query

NVPM WARN: fan mode is not set!

NV Power Mode: MAXN

0

craig@jetson:~$

I do not have a fan on the CPU. There may be thermal throttling. Do you have a fan on your CPU?

Also, do you have any luck using C++ OpenCV applications on the Jetson? Do you have any advice?

$ g++ -L/usr/lib/aarch64-linux-gnu -I/usr/include/opencv4 frame.cpp -o frame -lopencv_videoio

/usr/bin/ld: /tmp/ccPItXPw.o: undefined reference to symbol '_ZN2cv8fastFreeEPv'

//usr/lib/aarch64-linux-gnu/libopencv_core.so.4.1: error adding symbols: DSO missing from command line

collect2: error: ld returned 1 exit status

craig@jetson:~/Documents/Development/opencv$

I’d like to compare the C++ and Python module performance, but I can’t seem to build a C++ program.

$ cat frame.cpp

#include "opencv2/opencv.hpp"

#include "opencv2/videoio.hpp"

using namespace cv;

int main(int argc, char** argv)

{

VideoCapture cap;

// open the default camera, use something different from 0 otherwise;

// Check VideoCapture documentation.

if(!cap.open(0))

return 0;

for(;;)

{

Mat frame;

cap >> frame;

if( frame.empty() ) break; // end of video stream

imshow("this is you, smile! :)", frame);

if( waitKey(10) == 27 ) break; // stop capturing by pressing ESC

}

// the camera will be closed automatically upon exit

// cap.close();

return 0;

}

craig@jetson:~/Documents/Development/opencv$

I am running Jetpack 4.4.

I would like to get a demo such as open pose or other running. Do you have a suggestion?

This article indicates that I can’t use open pose on Jetpack 4.4 due to move to CuDNN 8.0 and lack of support in caffe for this newer version of CuDNN.

Question Update: 8/26/2020

I’m now trying to use cv2.VideoWriter on the Jetson, but there seems to be a general problem with writing to file. If anyone has it working, please let me know the technique.

This guy indicated that he had to recompile OpenCV from source. I have not been able to source compile OpenCV on the Jetson Nano.

OpenCV Video Write Problem - #2 by DaneLLL - Jetson TX2 - NVIDIA Developer Forums

Several other people are having problems.

Update on OpenCV 4.4 with cuda

I managed to compile OpenCV 4.4 on the nano and install it.

>>> import cv2

>>> cv2.__version__

'4.4.0'

>>> cv2.cuda.printCudaDeviceInfo(0)

*** CUDA Device Query (Runtime API) version (CUDART static linking) ***

Device count: 1

Device 0: "NVIDIA Tegra X1"

CUDA Driver Version / Runtime Version 10.20 / 10.20

CUDA Capability Major/Minor version number: 5.3

Total amount of global memory: 3956 MBytes (4148391936 bytes)

GPU Clock Speed: 0.92 GHz

Max Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536,65536), 3D=(4096,4096,4096)

Max Layered Texture Size (dim) x layers 1D=(16384) x 2048, 2D=(16384,16384) x 2048

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 32768

Warp size: 32

Maximum number of threads per block: 1024

Maximum sizes of each dimension of a block: 1024 x 1024 x 64

Maximum sizes of each dimension of a grid: 2147483647 x 65535 x 65535

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and execution: Yes with 1 copy engine(s)

...

Compute Mode:

Default (multiple host threads can use ::cudaSetDevice() with device simultaneously)

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 10.20, CUDA Runtime Version = 10.20, NumDevs = 1

This repo worked.

I have a 12V fan blowing on the heat sink. The Nano is supposed to use a 5V fan, but the only fan I had in my drawer was this 12V one. It works so far.

I would like to know if it is possible to synchronize the live streaming from two ricoh theta Z1 in a linux distribution? Whether its possible to give an external trigger to initiate live streaming. Synchronization would become important for any stereo vision applications.

Thanking you in advance.

You can initiate live streaming with the USB API. With an Jetson Xavier, you can run 2 4K streams from the THETA.

What is needed to synchronize the streams? Is there a working system you have that use two normal webcams (not THETA) on /dev/video0 and /dev/video1

I am also working on some python Gstreamer projects. Is the code thetauvc.py available somewhere? I may help it on Linux.

Thanks.

Usually, in multicamera systems, you can have an external physical device, sometimes the camera itself to send an external physical signal to initiate a capture. This would ensure that the capture occurs at the same time instant. Even if I use a USB API, I believe absolute synchronization can only be guaranteed from a real-time operating system but not with normal Ubuntu distribution. Such synchronized data is a necessity for multi-view applications like for finding correspondence to estimate disparity and eventually depth. Any slight change in the instance of capture could possibly affect the algorithm output.

I was using two theta S cameras in Ubuntu system, which essentially gets detected as /dev/video0 and /dev/video1. But we were not able to achieve absolute synchronization. We tried to reduce latency and improve synchronization using VideoCaptureAsync class in OpenCV along with ApproximateTimeSynchronizer in ROS. However, this does not achieve absolute synchronization as we have no control over the camera to initiate a capture.

I do not think that the solution in this thread with libuvc-theta will be able to meet the requirements for VideoCaptureAsync.

Ideas for further searching:

- look for anyone using a libuvc (not libuvc-theta) with syncrhonization. Can a UVC 1.5 camera provide the synchronization signal?

- look another project that uses two physical Android devices into another computer. The THETA V/Z1 run Android 7 internally. You may be able to write your own pipeline inside the camera

The V/Z1 plug-in also supports NDK. The internal OS is Android on SnapDragon ARM from Qualcomm.

Can you use two stereo cameras from another system for disparity and depth and then use a third camera (the THETA) for the 360 view and data.

Although using three cameras (2 not-THETA synchronized, and 1 THETA) is more complex, it may be easier to implement.

It seems that you can use two cheap cameras with the Jetson Nano and either a specialized board or an Arduino for the external signal.

It is possible to use the THETA for general surrounding information and the specialized dual-camera synced setup (not-THETA) for the detailed depth and distance estimation? The 360 view might provide your system with clues on what to focus on the stereo cameras on.

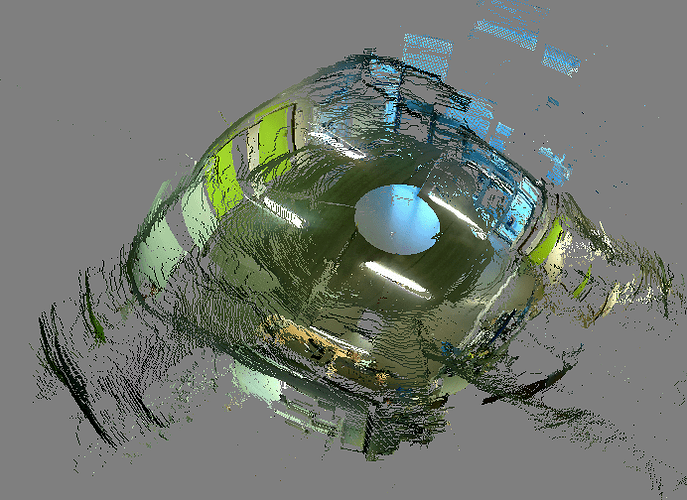

The reason we are interested in specifically using Ricoh cameras as stereo setup is to use the entire 360 view provided by the camera to estimate depth. This I can only achieve with omnidirectional cameras such as Ricoh. The below figure shows such a 3D reconstruction that we obtained from two ricoh theta S cameras.

We hope to improve the quality by using the higher resolution offered by THETA Z1 and also my properly synchronizing the images.

In the video you uploaded, the camera hardware has the facility to provide external trigger and obtain very good synchronziation (of the order of nanosecond). For Ricoh camera, I do not see any such hardware facility. But, the USB API you mentioned would help in synchronization. Since it is essentially a PTP cam we should be able to synchronize the clocks (of the order of microsecond). Right now, I have contacted another Engineer who is more familiar with the protocol. If I find a solution, I will surely share with the community.

Also, do you know if Ricoh cameras are global shutter or rolling shutter?