This is tough as I can’t replicate the problem.

As a test, can you go into the bios of the laptop and disable the gtx2060 discrete GPU and use the onboard Intel Core i7-10875H integrated GPU using Intel UHD Graphics 630?

What’s working

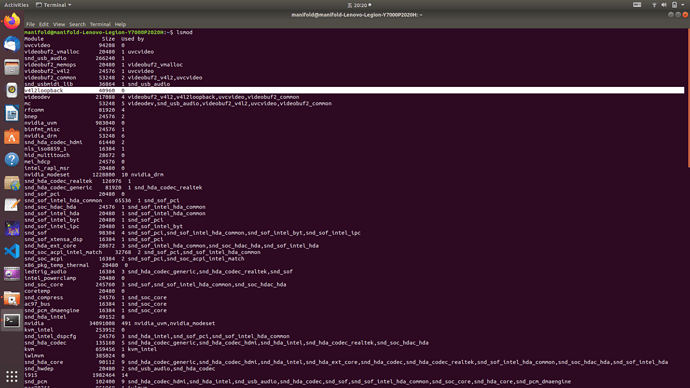

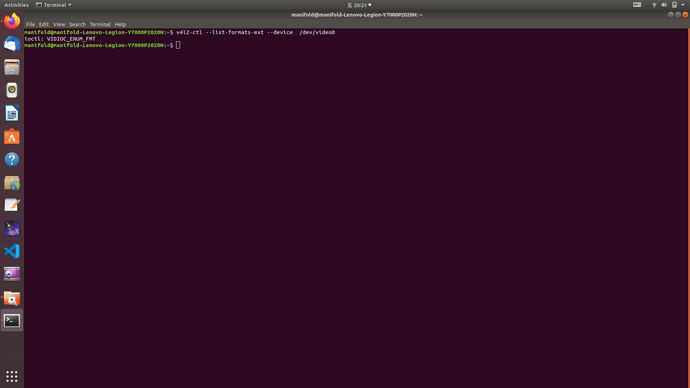

You seem to have the correct device ID in streaming mode.

libuvc-theta-sample/thetauvc.c at f8c3caa32bf996b29c741827bd552be605e3e2e2 · ricohapi/libuvc-theta-sample · GitHub

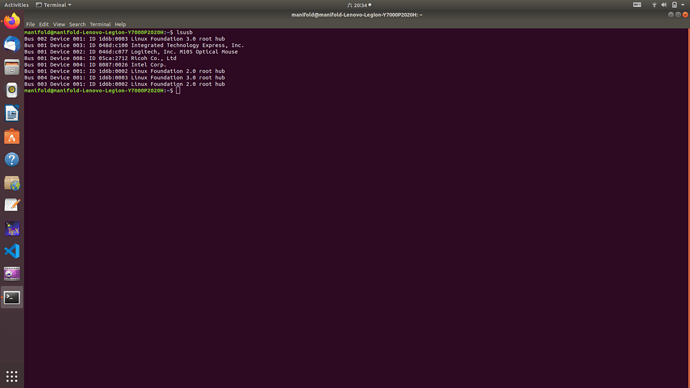

manifold test system

for future reference

Ubuntu18.04

cpu:i7-10875H

RAM:16G

GPU:gtx2060

confirmed that laptop does not have a integrated webcam on /dev/video0.

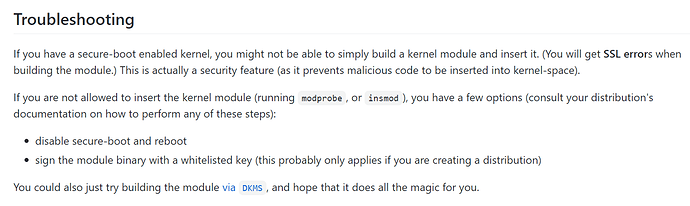

If you’re still stuck

There’s another possible path for you to solve this. However, I have not tested the solution below yet. If you test it, please report back.

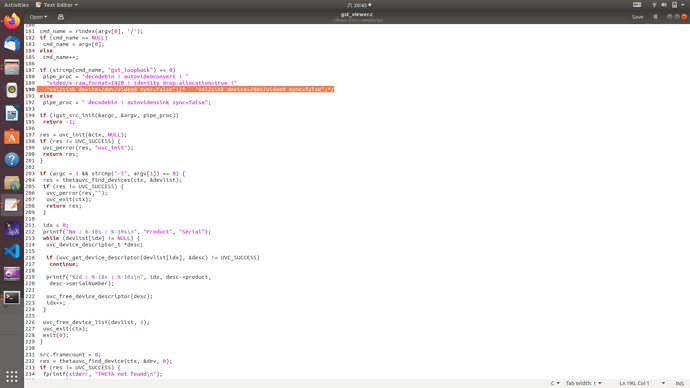

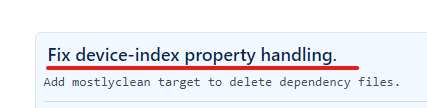

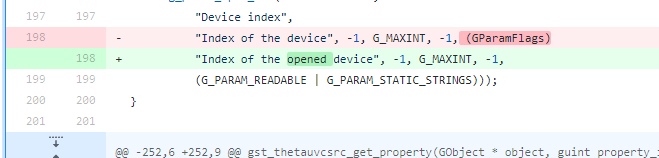

A community member has developed a GStreamer plug-in for the THETA.

If OpenCV is built with gstreamer backend enabled, VideoCapture::open() accepts the gstreamer pipeline description as its argument like

VideoCapture cap;

cap.open("thetauvcsrc ! h264parse ! decodebin ! videoconvert ! appsink");

opencv/cap_gstreamer.cpp at 1f726e81f91746e16f4a6110681658f8709e7dd2 · opencv/opencv · GitHub

you can capture image directly from gstreamer.

Please note that the standard image format on OpenCV is “BGR” which is not supported by most hardware assisted colorspace converter plugins, thus, you have to use software converter.

Since recent OpenCV accepts I420 or NV12 as input format for VideoCapture, you can capture without color conversion and convert using OpenCV if necessary.

If you decide to pursue this, it’s possible that gstreamer could be used with the ROS OpenCV camera driver if OpenCV is built with the gstreamer backend enabled.

-

Makefile in the gstthetauvc does not have “install” target. Please copy the plugin file (gstthetauvc.so) into appropriate directory. If you copied it into directory other than gstreamer plugin directory, you have to set the directory to the GST_PLUGIN_PATH environment variable.

-

The plugin has several properties to specify resolution and THETA to use(if multipe THETA are connected to the system).

Run “gst-inspect-1.0 thetauvcsrc” for detail.

-

For Ubuntu 20 and Jetson users, OpenCV packages from Ubuntu official or nvidia have gstreamer backend.

-

For Ubuntu 18 users, official OpenCV packages do not have gstreamer backend and the version(3.2) is too old to use with the gstreamer. If you want to use on Ubuntu 18, build the latest OpenCV from the source, or AT YOUR OWN RISK, you can use unofficial OpenCV binary packages for Ubuntu 18 like

opencv-4.2 : “cran” team