First, thank you for posting your progress. We are an independent site that receives sponsorship from RICOH. If you have time, please send @jcasman and me a description of your project, either by DM on this forum or email (jcasman@oppkey.com). I understand that it may be proprietary and you may not be able to disclose it. We would like to pass the information on to our contacts at RICOH to show how people use live streaming. If there is a potential for high-volume industrial use, it may impact RICOH’s product decisions in the future.

Second, I think it’s important for us to be candid when people are evaluating the camera in prototyping. It’s a great consumer camera that streams 4K or 2K 360 video. It is widely used in commercial applications and is durable.

However, most of the commercial applications have a human that views the output. For industrial use, the camera has many features that may not be used, thus you’re paying for features that are unused. This is fine for prototyping and low-volume systems, but may become a problem at higher volumes.

Here’s a summary of some shortcomings for large-scale industrial use:

- Camera handles three colors. For object detection, you may need only one color

- Rolling shutter. Most cameras, including the THETA, use this, but more expensive industrial cameras use global shutter

- Camera has a battery, increasing weight and possibly heat. You cannot easily bypass or take out the battery. For the THETA V, the camera will slowly drain while you are streaming, giving you a continuous stream time of less than 12 hours (though there are some workarounds)

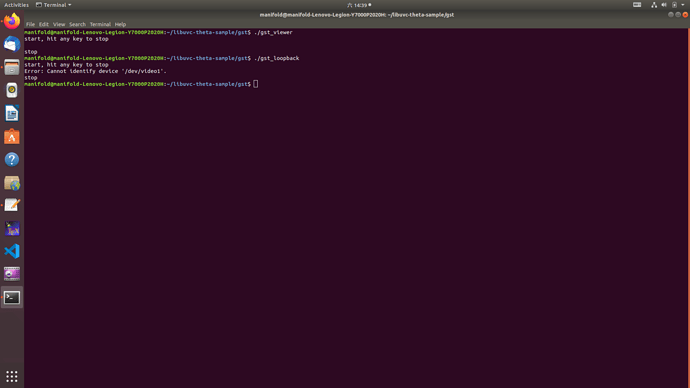

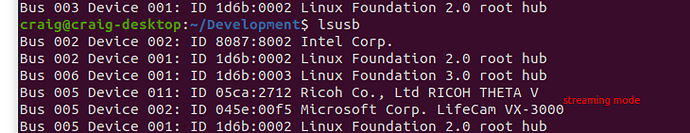

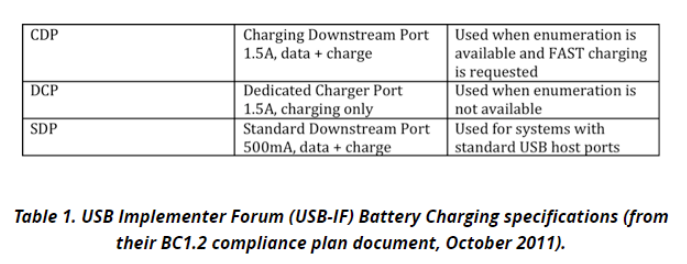

- In most cases, you will need to manually press the power button on the camera to start the stream. Though, you can power cycle the USB port of the board the camera is plugged into programmatically to trigger the camera to turn on remotely

The upside is that the camera produces a nice 2K or 4K equirectangular stream with nice stitching and good quality at a reasonable cost for prototyping. It’s also widely used, so there are more examples of people using it.

It’s theoretically possible to buy two component units of Sony IMX219 image sensors with very wide angle lenses and process each camera sensor independently. Various manufacturers make those sensors with CSI cables and they sell for under $50 in low volume. Though, that would be a bigger project and depends on how accurate you need the stitching and how fast an external board needs to stitch it. The advantage of the THETA is that the internal Snapdragon board inside the camera does very nice stitching inside the camera before it outputs the stream.

Note on Sensor Overlay Data

If the sensor is a point sensor, such as a radiation or invisible spectrum wave, the sensor data is in a sphere. It is likely easier to work with the THETA S with two dual-fisheye streams. Otherwise, you will need to de-fish the equirectangular frame to match your sensor data.

)

)