thanks, i’ll give this a try. i have both nanos and a xavierNX available to me. i’m attempting to run on a NX at present.

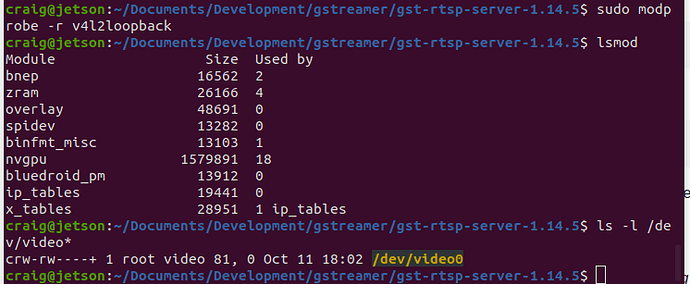

also, at what stage does /dev/video* show up? does the loopback driver do that?

This is handled by v4l2loopback. Short README documentation is here

After you do these steps for v4l2loopback then the /dev/video0 will be visible.

$ make

$ sudo make install

$ sudo depmod -a

$ sudo modprobe v4l2loopback

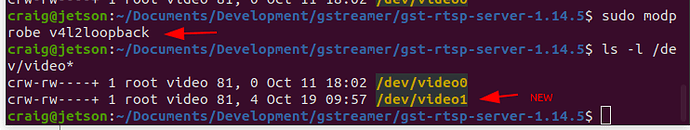

In my system, I have an existing video camera on /dev/video0 for testing, so I am going to attach the RICOH THETA camera to /dev/video1

The first line in the example below removes the module so that I can show how to insert the module.

Insert v4l2loopback to make /dev/video* appear

$ sudo modprobe v4l2loopback

$ ls -l /dev/video*

crw-rw----+ 1 root video 81, 0 Oct 11 18:02 /dev/video0

crw-rw----+ 1 root video 81, 4 Oct 19 09:57 /dev/video1

In community tests, gstreamer was not able to automatically detect the decoder and needed the specific decoder and sink. The line below should also be usable on the Nano, so you should be able to use the same test for both Nano and Xavier.

"nvv4l2decoder ! nv3dsink sync=false"

The Xavier is better for testing the THETA. If you are going to use the Nano, you may want to change the line below to THETAUVC_MODE_FHD_2997. I am using a Nano in my tests. However, this is mainly because I don’t have budget for a Xavier as RICOH isn’t providing funds for this type of test equipment. If you apply AI processing to the stream, you may have a better experience with Xavier, especially if you need the high resolution frames for detection.

I haven’t seen it for a while.

Have we found a way to live stream in ThetaV on Ubuntu 16.04LTS?

Does your application not run on Ubuntu 18.04? What error do you get with ubuntu 16.04?

Hi all,

For me, the output freezes.

gst_viewer works fine.

for the first couple of seconds, that gst_loopback runs the output is normal

VLC, OpenCV and FFmpeg all can receive the stream from video2 normally for a couple of seconds.

Then it freezes and only updates every couple of seconds.

I also coupled it to ROS before using Gscam and to VLC using the rtsp-server example someone above showed. This gave the same result. From the ROS messages, I can see it first receives consistent messages and then only every couple of seconds.

I use Ubuntu 18.04 on a ThinkPad P1 Gen 2.ifconfig

Processor: Intel® Core™ i7-9750H CPU @ 2.60GHz × 12,

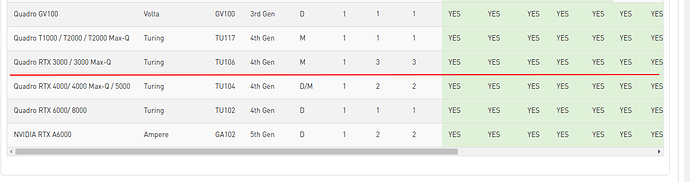

Graphics: Nvidia Quadro T1000/PCIe/SSE2.

As far as I can tell am running the exact code from the examples.

I am new to Gstreamer, V4l2 and MTP though, so maybe I just oversaw something obvious.

What do you think the issue might be? I suspect something along the lines of memory or buffer handling and that maybe the pipeline gets overloaded somewhere, but I do know how to check for this.

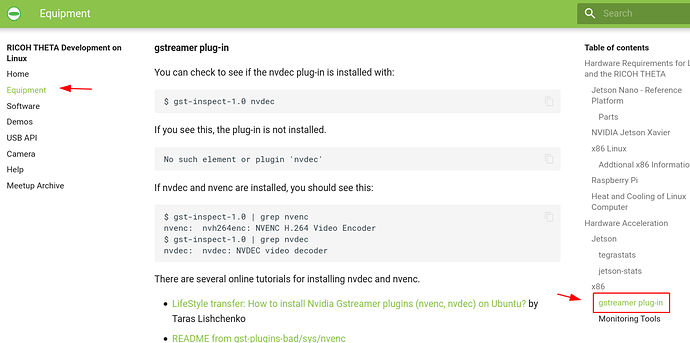

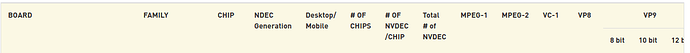

Look into ensuring that gstreamer is using hardware acceleration, not software acceleration.

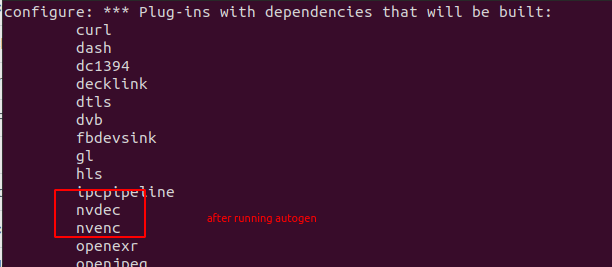

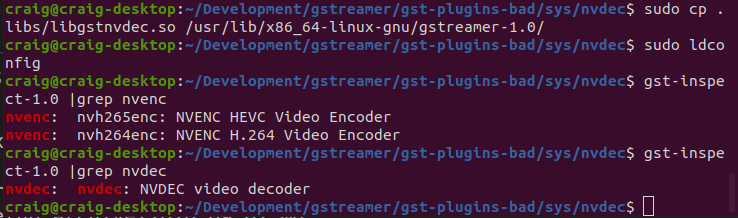

Do you have the nvdec plug-in installed which is part of the gstreamer-plugins-bad source (not binary) distribution.

What is the output of this:

gst-inspect-1.0 nvdec

These articles may help:

gst-plugins-bad/sys/nvenc/README at 1.14.5 · GStreamer/gst-plugins-bad · GitHub

http://lifestyletransfer.com/how-to-install-nvidia-gstreamer-plugins-nvenc-nvdec-on-ubuntu/

Install NVDEC and NVENC as GStreamer plugins · GitHub

You have quite a nice graphics card that supports everything.

I just added additional information on using nvdec with gstreamer to the main linux streaming community doc available here.

I also ran the compilation test on a fresh x86 machine.

$ NVENCODE_CFLAGS="-I/home/craig/Development/gstreamer/gst-plugins-bad/sys/nvenc" ./autogen.sh --disable-gtk-doc --with-cuda-prefix="/usr/local/cuda"

The process is involved. I don’t think that the nvdec plug-in is needed on all systems. I’m not sure on which systems it is needed on.

Updated Oct 22 Afternoon

Do you have qos=false in the pipeline? It’s in the source code for gst_viewer.c.

This this first, if you haven’t already.

if (strcmp(cmd_name, "gst_loopback") == 0)

pipe_proc = "decodebin ! autovideoconvert ! "

"video/x-raw,format=I420 ! identity drop-allocation=true !"

"v4l2sink device=/dev/video0 qos=false sync=false";

Hello sir, I am trying to do a stream with 2 of my theta V on my ubuntu 20 computer, I followed all the steps you did with the jetson nano BUT with my computer directly. I have a lot of troubles, as I only get one frame every ~20 seconds…

Did you face this problem ? or have any idea on how to fix it please?

I did it with only one single camera and got that result, I think if I try to do it with a stereo my computer will crash. please I need your help.

Thank you!

Try with a single camera first. On x86, did you change the qos of the gstreamer pipeline in gst_viewer.c to false:

if (strcmp(cmd_name, "gst_loopback") == 0)

pipe_proc = "decodebin ! autovideoconvert ! "

"video/x-raw,format=I420 ! identity drop-allocation=true !"

"v4l2sink device=/dev/video0 qos=false sync=false";

Please confirm that on x86, you’re testing gst_viewer, not gst_loopback as the problem with gst_loopback could be related to v4l2loopback.

If you run, gst_viewer and the frame freezes, the qos=false may help.

On x86, include the output of glxinfo -B

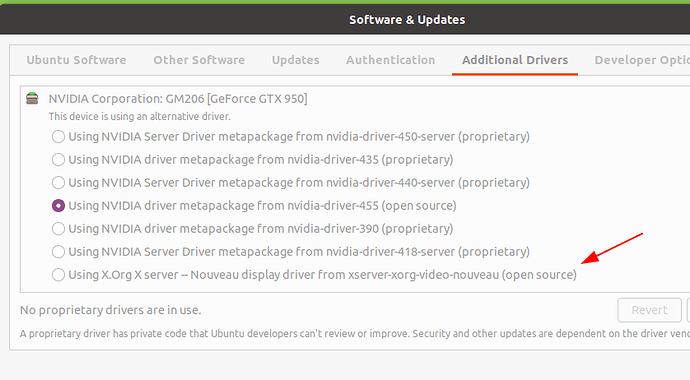

Include the part that has your graphics card and video driver on Ubuntu 20.0. My video driver is NVIDIA 455 on x86.

$ glxinfo -B

name of display: :1

display: :1 screen: 0

direct rendering: Yes

Memory info (GL_NVX_gpu_memory_info):

Dedicated video memory: 2048 MB

Total available memory: 2048 MB

Currently available dedicated video memory: 1633 MB

OpenGL vendor string: NVIDIA Corporation

OpenGL renderer string: GeForce GTX 950/PCIe/SSE2

OpenGL core profile version string: 4.6.0 NVIDIA 455.23.05

OpenGL core profile shading language version string: 4.60 NVIDIA

OpenGL core profile context flags: (none)

OpenGL core profile profile mask: core profile

OpenGL version string: 4.6.0 NVIDIA 455.23.05

It is likely that hardware acceleration is not working properly in gstreamer.

You can try the X.Org driver. This is easy to try. In software and updates, just click on the X.Org X Server and see if it works.

If it still doesn’t work, you can try to disable or remove the NVIDIA graphics card on your system and use the integrated GPU on your Intel processor.

If it still doesn’t work, you can try to compile the nvdec gstreamer plug-in, but the process is involved.

The Jetson Nano is likely using the onboard NVIDIA hardware decoding of the H.264 stream and the x86 computer is likely getting stuck with software decoding.

If you run, nvidia-smi on the x86 machine, does it show the GPU utilization increasing when you start the stream?

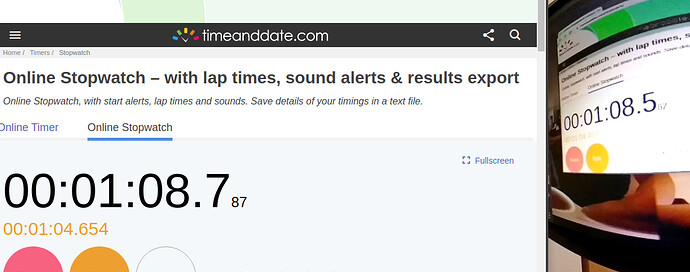

Update on using nvdec

I’ve been tweaking the pipeline using nvdec and have managed to reduce latency by 100ms on my computer. That is 8.787 on the foreground screen capture and 8.567 on the video from the theta. Appears to be 220ms delay to get it to the screen on my Ubuntu 20.04 system.

Thank you,

By adding “qos=false” it is now working, with 550-600 ms delay.

I also installed the nvdec nvenc plugins, I am unsure it they are being applied right now as I am unsure on how decodebin chooses the decoders.

However, “nvidia-smi” does show usage of 20-30 % though.

I prepared a document for the meetup at the link below that shows nvdec pipeline options.

https://theta360developers.github.io/theta-robotics-meetup/

You get immediate access after you put in an email.

nvdec pushes it to the gpu. You can get the opengl direct to the screen from the gpu without going into system memory.

I do not think that decodebin automatically chooses nvdec. I think you need to specify nvdec in the pipeline.

@Mehdi_Zayene, saw your comment on YouTube about saving to file. I tested this on x86 and can save to file using the v4l2loopback with both VLC and OBS. I haven’t figured out how to save to file directly with gstreamer. Maybe someone on this forum knows how to save it directly to file from within gst_viewer.c

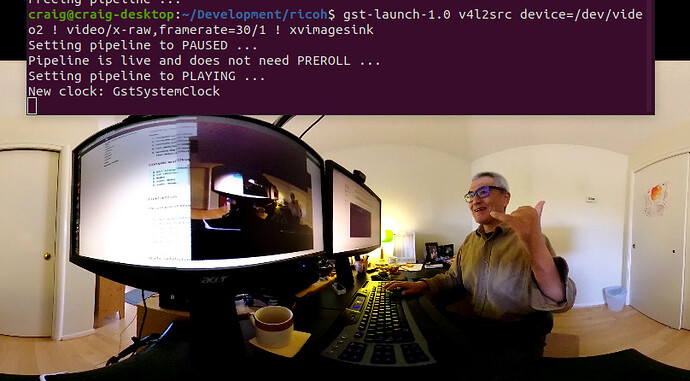

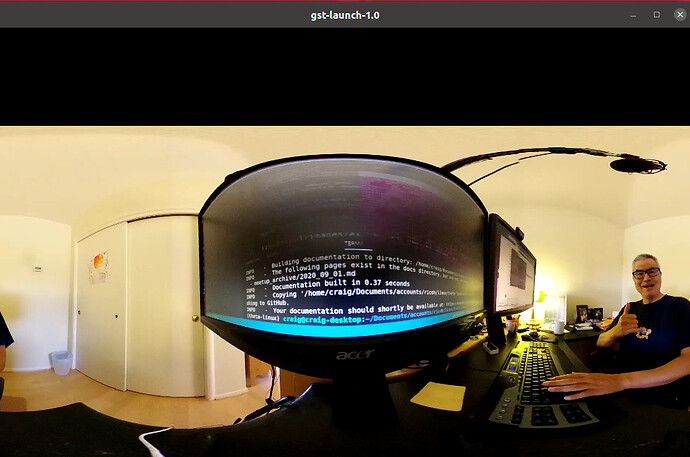

example gst-launch pipeline

$ gst-launch-1.0 v4l2src device=/dev/video2 ! video/x-raw,framerate=30/1 ! xvimagesink

Setting pipeline to PAUSED ...

Pipeline is live and does not need PREROLL ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

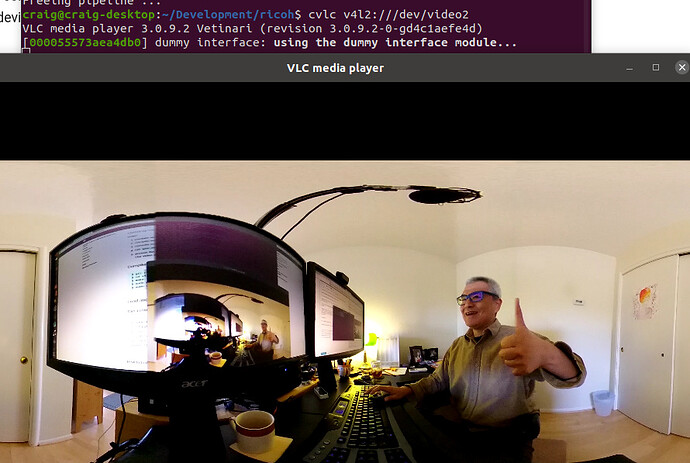

Example with VLC

using command line vlc, cvlc.

$ cvlc v4l2:///dev/video2

VLC media player 3.0.9.2 Vetinari (revision 3.0.9.2-0-gd4c1aefe4d)

[000055573aea4db0] dummy interface: using the dummy interface module...

Example using v4l2-ctl to show resolution and video output

I’ve modified the source to stream 2K video.

$ v4l2-ctl --list-formats-ext --device /dev/video2

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture

[0]: 'YU12' (Planar YUV 4:2:0)

Size: Discrete 1920x960

Interval: Discrete 0.033s (30.000 fps)

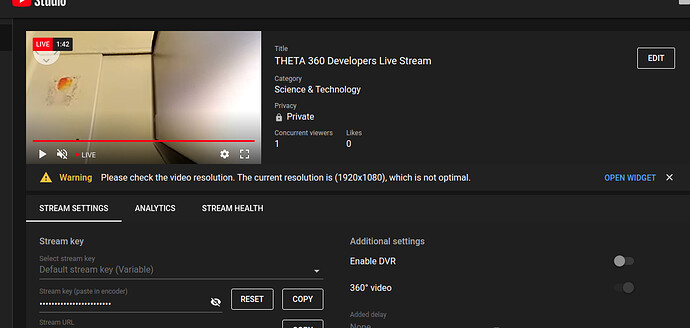

Stupidity on my part, realised that gst_loopback was set to /dev/video1 by default, changing that to /dev/video0 meant that FFmpeg then works if you set the input device to /dev/video0. Streaming to YouTube working. If anyone is interested this is the FFmpeg command

ffmpeg -f lavfi -i anullsrc

-f v4l2 -s 3480x1920 -r 10 -i /dev/video0

-vcodec libx264 -pix_fmt yuv420p -preset ultrafast

-strict experimental -r 25 -g 20 -b:v 2500k

-codec:a libmp3lame -ar 44100 -b:a 11025 -bufsize 512k

-f flv rtmp://a.rtmp.youtube.com/live2/secret-key

Will need some work on optimisation but gives me what I need.

Wow, this is fantastic! Thank you for posting this. Geez, you really know ffmpeg. Way to go.

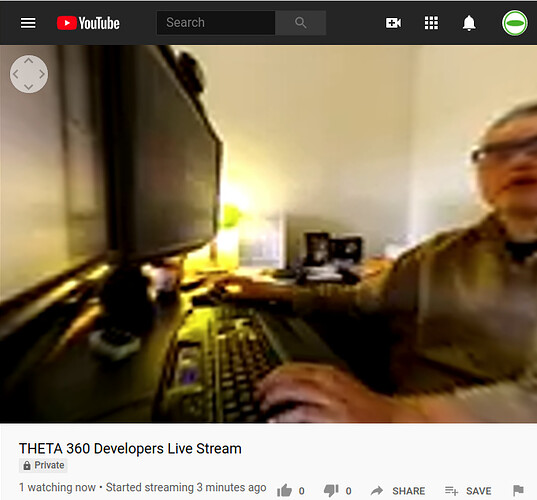

Got it working with 360 navigation. Thanks.

Also, as I don’t normally use ffmpeg, I couldn’t figure out what many of the options mean.

I’m using the simplified command below.

What does -strict experimental -r 25 -g 20 do?

ffmpeg -f lavfi -i anullsrc -f v4l2 -s 1920x960 -r 10 -i /dev/video2 \

-vcodec libx264 -pix_fmt yuv420p \

-b:v 2500k \

-codec:a libmp3lame -ar 44100 -b:a 11025 -bufsize 512k \

-f flv rtmp://a.rtmp.youtube.com/live2/$SECRET_KEY

I’ve cut down the stream resolution to preserve my daughter’s Zoom classes.

It’s to do with the audio encoder. Youtube expects an audio stream, I haven’t looked at audio yet, so in fact they are redundant here and can be left out.

Thanks again for you help. I’m putting this into the community documentation and providing attribution to you. I feel many people want to do this.

Are you streaming from Linux to YouTube in order to use low-cost Jetson devices? Or, are you choosing Linux because you can more easily control the stream with AI or remote management?

Just wondering as I feel that Linux is very flexible, but curious as to why people are so interested in this platform for streaming.

Ahh this is just step 1 and the Jetson is just a cheap device to get something working. However I was intrigued as to how much it would do. I need to use FFmpeg as I have some other built in encoders that do some special stuff when it comes to processing the video. So this is the first stage of the pipeline. YouTube is just a for free test environment.

The Jetson does however open up the possibility of a simple portable 360 streaming device though.

Thanks for the help and the great webinar yesterday. Using the v4l2loopback capability and thetaV loopback example, here are 2 example gstreamer pipelines to grab the video:

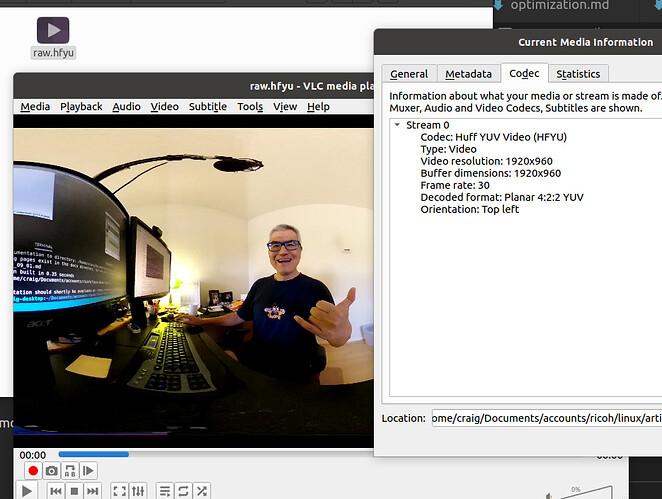

As a lossless huffman encoded raw file:

gst-launch-1.0 v4l2src device=/dev/video99 ! video/x-raw,framerate=30/1

! videoconvert

! videoscale

! avenc_huffyuv

! avimux \! filesink location=raw.hfyu

And with default h.264 encoding on a Jetson:

gst-launch-1.0 v4l2src device=/dev/video99 ! video/x-raw,framerate=30/1

! nvvidconv

! omxh264enc

! h264parse ! matroskamux \! filesink location=vid99.mkv

Pro tip, when in install v4l2loopback, use the video_nr option to create the video device somewhere high so it does not get displaced by PnP of other cameras.

Many people may not realize is that UDP is lossy and TCP does retransmissions which will cause additional latency. I haven’t looked at the characteristics of the theta V stream, but if it is not constant bit rate, there will be network nano-bursts. (I’m talking about bursts at timescales shorter than 1ms) These bursts can overflow buffer space causing packet losses. For TCP connections, there is a retry-retransmission protocol that is a normal part of flow control, but will increase packet jitter and latency.

Fantastic!

I added your great contribution to the documentation.

Tested the first pipeline on x86 and will test on Jetson after I reinstall the OS. I messed up my v4l2 system on the Jetson a little while ago and need to reinstall JetPack.

If anyone else wants to try this, VLC can play the Huffyuv format.

https://wiki.videolan.org/Huffyuv/

Thanks for posting the pipeline. @Mehdi_Zayene was asking about how to save the stream to file from a Jetson on a YouTube video comment thread.

For the H.264 file, this is the pipeline I’m using on my x86 system.

$ gst-launch-1.0 v4l2src device=/dev/video2 ! video/x-raw,framerate=30/1 ! autovideoconvert ! nvh264enc ! h264parse ! matroskamux ! filesink location=vid_test.mkv

This time, I tested it with gst-launch-1.0 playbin.

gst-launch-1.0 playbin uri=file:///path-to-file/vid_test.mkv