I do not have a solution, but I can replicate the problem. I will keep trying.

I have the same problem that you do.

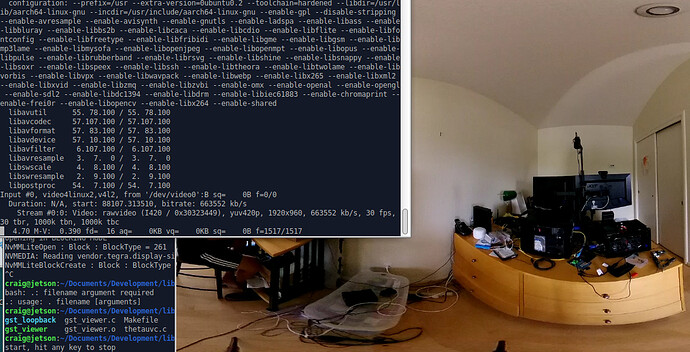

The only thing I got working is that ffplay works with THETA on /dev/video0

$ ffplay -f v4l2 /dev/video0

ffplay version 3.4.8-0ubuntu0.2 Copyright (c) 2003-2020 the FFmpeg developers

built with gcc 7 (Ubuntu/Linaro 7.5.0-3ubuntu1~18.04)

configuration: --prefix=/usr --extra-version=0ubuntu0.2 --toolchain=hardened --libdir=/usr/lib/aarch64-linux-gnu --incdir=/usr/include/aarch64-linux-gnu --enable-gpl --disable-stripping --enable-avresample --enable-avisynth --enable-gnutls --enable-ladspa --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librubberband --enable-librsvg --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvorbis --enable-libvpx --enable-libwavpack --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzmq --enable-libzvbi --enable-omx --enable-openal --enable-opengl --enable-sdl2 --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-chromaprint --enable-frei0r --enable-libopencv --enable-libx264 --enable-shared

libavutil 55. 78.100 / 55. 78.100

libavcodec 57.107.100 / 57.107.100

libavformat 57. 83.100 / 57. 83.100