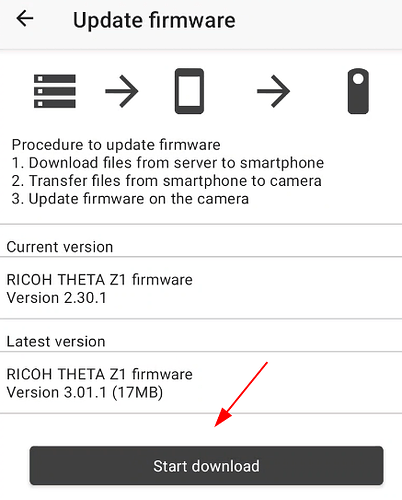

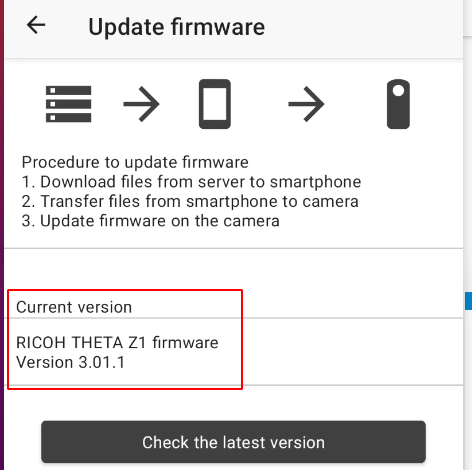

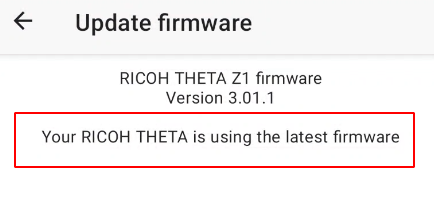

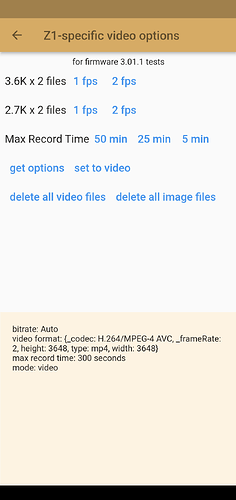

A Major version update to RICOH THETA Z1 firmware was released on June 27, 2023. The new firmware 3.01.1 adds changes to video, including new formats for video.

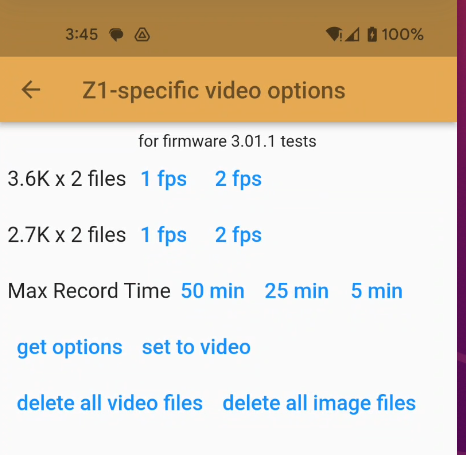

The four new formats are:

{"type": "mp4","width": 3648,"height": 3648, "_codec": "H.264/MPEG-4 AVC", "_frameRate": 2} *1

{"type": "mp4","width": 3648,"height": 3648, "_codec": "H.264/MPEG-4 AVC", "_frameRate": 1} *1

{"type": "mp4","width": 2688,"height": 2688, "_codec": "H.264/MPEG-4 AVC", "_frameRate": 2} *1

{"type": "mp4","width": 2688,"height": 2688, "_codec": "H.264/MPEG-4 AVC", "_frameRate": 1} *1

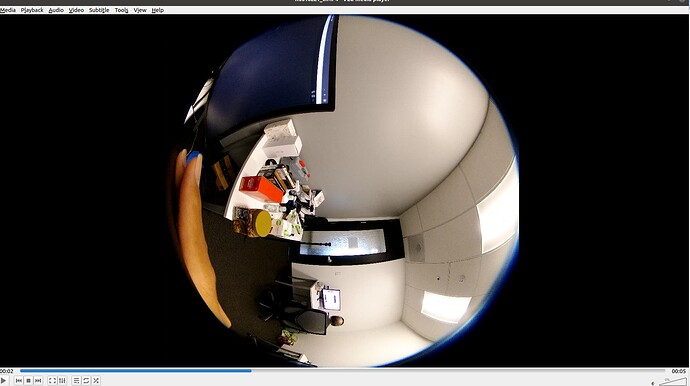

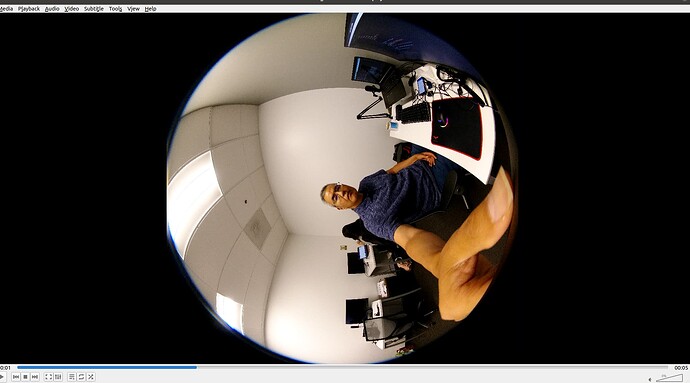

The new mode outputs two fisheye video for each lens. The MP4 file name ending with _0 is the video file on the front lens, and _1 is back lens. This mode does not record audio track to MP4 file.

You can also set the new video to 3,000 seconds or 50 minutes. These are low framerate video formats intended for frame extraction. There is no audio track on the video files.

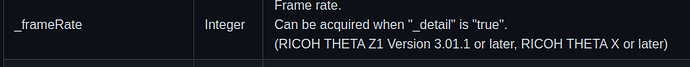

The listFiles command is also updated to show the framerate of the video file.

The USB API and the Bluetooth API have also been updated.

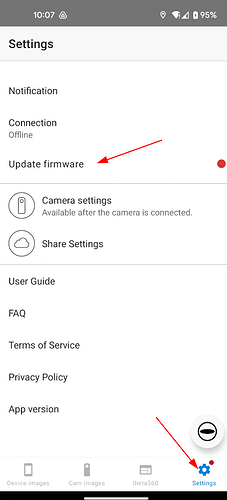

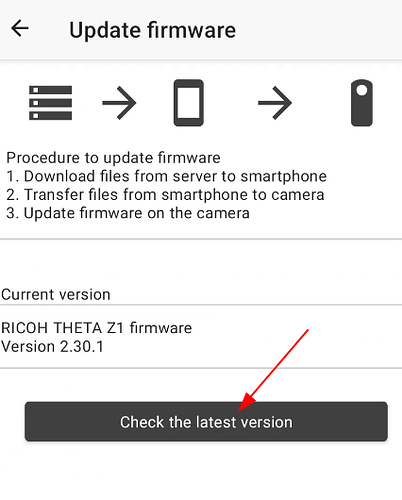

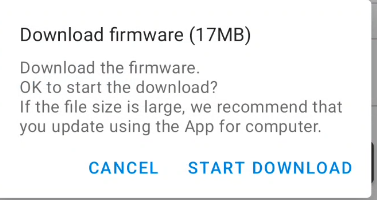

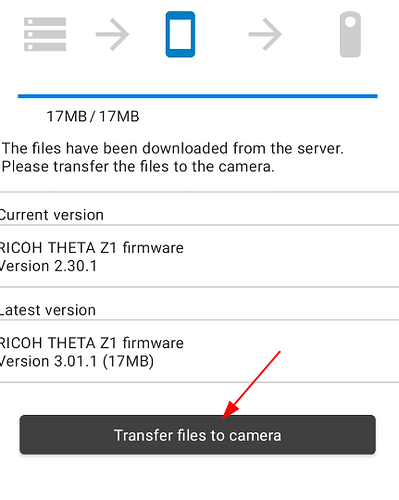

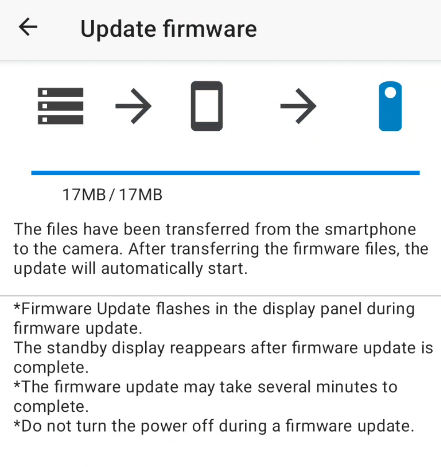

upgrade process

You can use either a mobile phone or a Windows or Mac desktop to update the firmware. This example shows a mobile phone.

camera flashes, reboots, shows firmware upgrade

New Feature Testing

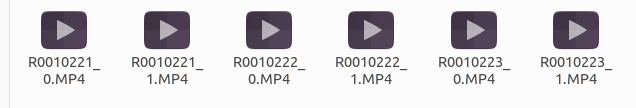

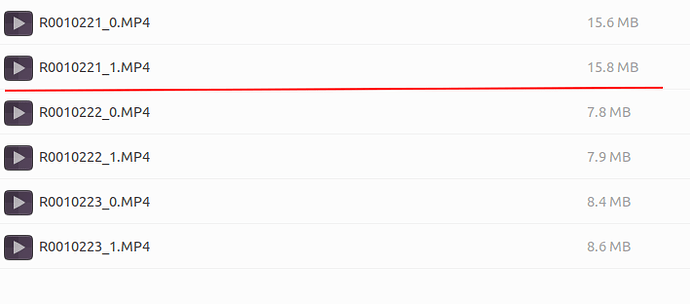

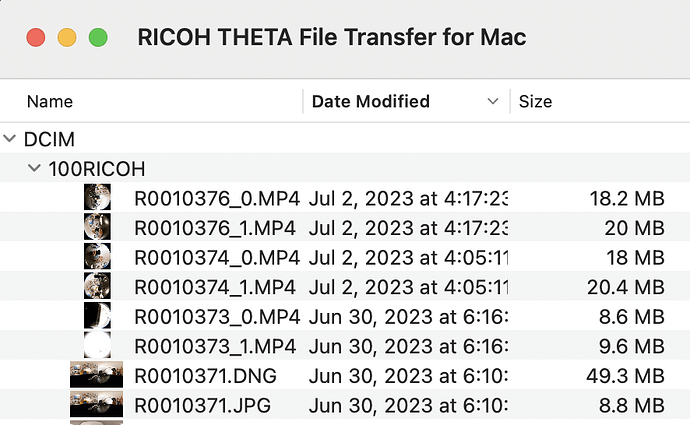

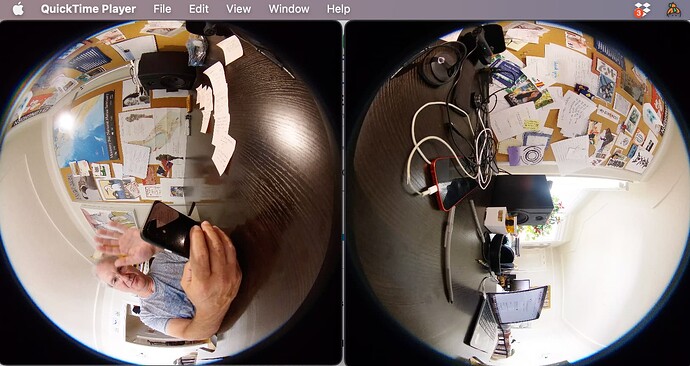

The new video format produces two video files for every video.

each video is a single fisheye

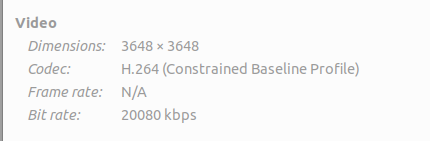

The default bitrate is 20MB for each video.

The file size is pretty small for these 5 second test clips.

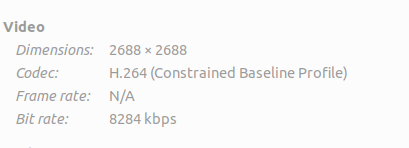

Above the red line, the files are 3.6K. Below the red line, the files are 2.7K

Sample Videos

https://drive.google.com/file/d/10Lvt3wRM9-wMunqK755PkRSKcvlJ8BI7/view?usp=sharing,

https://drive.google.com/file/d/1LNmrf40puLKuNqoqM7tgPqjF6OkiorU8/view?usp=sharing,

https://drive.google.com/file/d/1SuAyUScthYw7nxZhBn1KklUPzYKROunn/view?usp=sharing,

https://drive.google.com/file/d/1qGS6WgBNBAgfsod83zLjCwmomef4MNTg/view?usp=sharing,

https://drive.google.com/file/d/1wqtrl-IFfhEerS_6PT1xV4_2DZ0LRCu_/view?usp=sharing,

https://drive.google.com/file/d/1x5tybPCXZGiObPYlZOdEn_AEHPFJJiPU/view?usp=sharing,

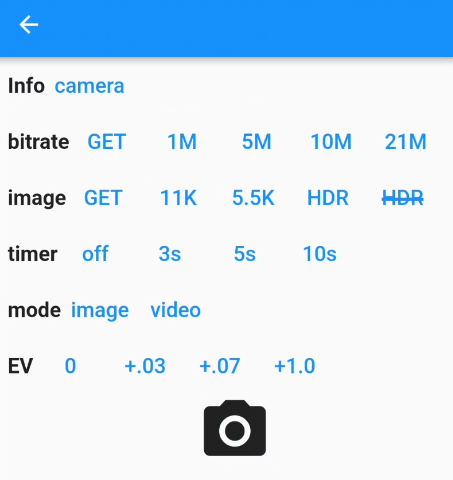

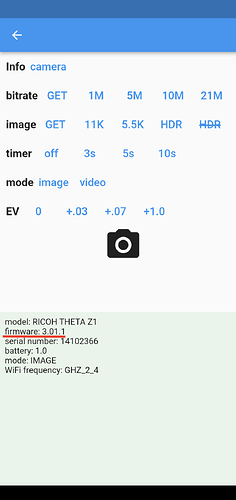

Android Demo app

We’re working on this app for testing. If you want to download the test version, send @jcasman a note

The app can also set image bitrate.