Independent developer Ichi Hirota built a THETA V plug-in to capture dual-fisheye images. The video below shows the fresh results of his work along with tips to do this yourself.

This is really cool info on using the THETA V plug-in technology to do something you normally can’t do. Pulling off images as dual fish eye, to save time between pictures and, who knows? maybe do your own stitching. The video includes info not in the official docs yet, figured out by @codetricity of theta360.guide and THETA V developer Ichi Hirota in Cupertino. Really useful!

Someone should mod the plug-in and test the time needed to take pictures between shots. If we can get it below 4 seconds, it would be cool. As a test, set up a loop inside of the plug-in and try to take a picture every 3 seconds, then keep reducing the time between shots.

Maybe Ichi has done more tests?

I’m asking Ichi, we’ll see if he has time (see what I did there?) to test this. Would love to see if we can decrease the time between pictures.

Ichi commented that the time between shots is less than 1 second. He’s using it for bracketing images.

Wowowowowow! Very exciting, could be really powerful improvement for industry usage, time-lapse, lots more.

Here’s a video from Ichi where you can clearly hear the shutter going very fast at about 1 sec per picture.

Jesse and I met up with Ichi Hirota yesterday to get a demo of his fully functional plug-in for rapid bracketing shooting and post processing of the dual fisheye images to allow stitching. We also learned that interested parties can license the stitching library from Ichi.

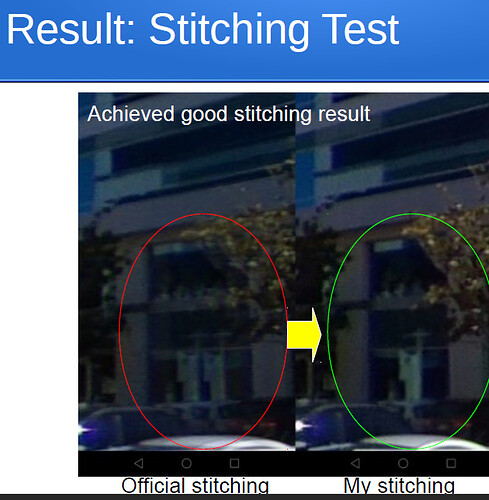

Stitching Images in Post-Production

The stitching quality in post-production is comparable to the quality of the Ricoh images. There are three options for stitching the dual-fisheye images for post-production:

- mobile app (shown below)

- inside the THETA V (tested, but not available to the public)

- custom options such as desktop or server stitching (need to discuss with Ichi)

Estimating Lens Distortion Information

With Ichi’s application, each camera needs to be configured one time and the lens distortion estimates need to be saved to a file. The configuration takes about 30 seconds per camera. A person needs to click on three sets of points. In the future, this can be saved to a plug-in.

Stitching Results

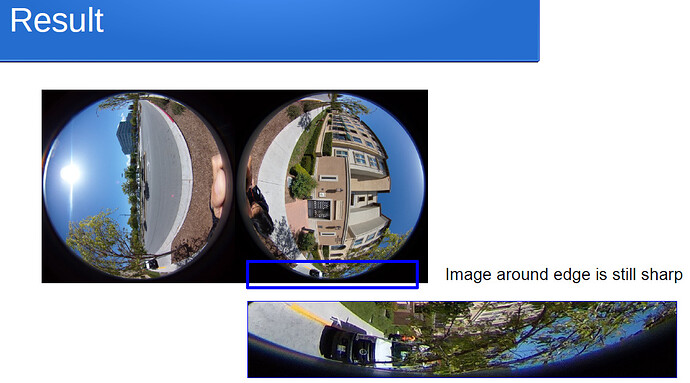

Dual-fisheye Edge

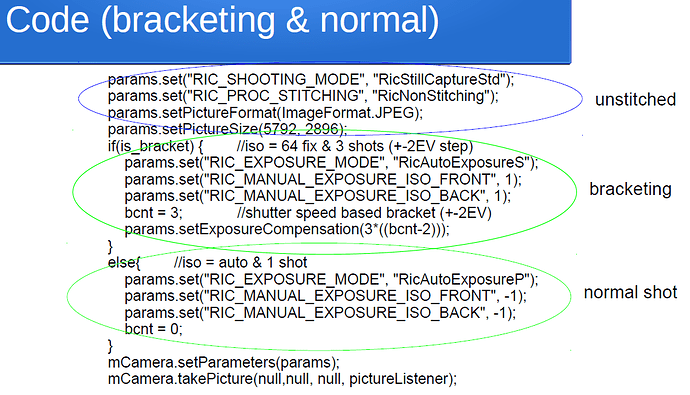

Code Snippet

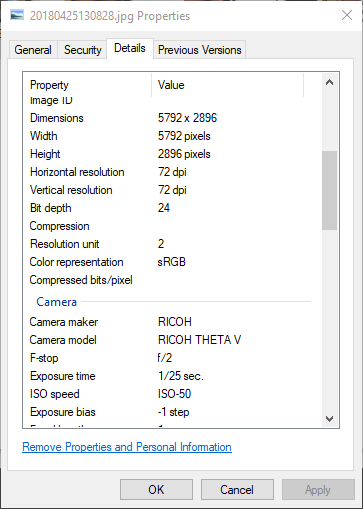

Sample Image

Image details

Fun Meeting with Developers

Additional Information

Hi, I’m trying this, but setting that image size makes mCamera.takePicture(null,null,mPicture) throw a IllegalStateException, defish configuration seems to work though  camera2 API is still unavailable right?

camera2 API is still unavailable right?

camera2 API is unavailable.

I know a guy using Camera.PictureCallback

Working code snippet below from Ichi Hirota. I can’t post the full project as the code is not mine. I would need to contact the author

private void nextShutter(){

//restart preview

Camera.Parameters params = mCamera.getParameters();

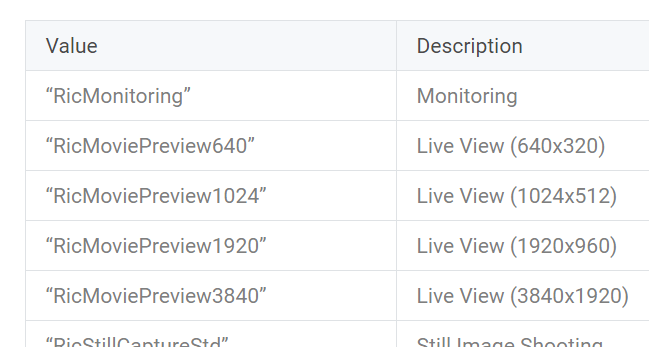

params.set("RIC_SHOOTING_MODE", "RicMonitoring");

mCamera.setParameters(params);

mCamera.startPreview();

//shutter speed based bracket

if(bcnt > 0) {

params = mCamera.getParameters();

params.set("RIC_SHOOTING_MODE", "RicStillCaptureStd");

params.setExposureCompensation(3 * ((bcnt - 2)));

bcnt = bcnt - 1;

mCamera.setParameters(params);

Intent intent = new Intent("com.theta360.plugin.ACTION_AUDIO_SHUTTER");

sendBroadcast(intent);

mCamera.takePicture(null, null, null, pictureListener);

}

else{

Intent intent = new Intent("com.theta360.plugin.ACTION_AUDIO_SH_CLOSE");

sendBroadcast(intent);

}

}

private Camera.PictureCallback pictureListener = new Camera.PictureCallback() {

@Override

public void onPictureTaken(byte[] data, Camera camera) {

//save image to storage

Log.d("onpicturetaken", "called");

if (data != null) {

FileOutputStream fos = null;

try {

String tname = getNowDate();

String opath = Environment.getExternalStorageDirectory().getPath()+ "/DCIM/100RICOH/"+tname+".JPG";

Log.d("save", opath);

fos = new FileOutputStream(opath);

fos.write(data);

fos.close();

registImage(tname, opath, mcontext, "image/jpeg");

} catch (Exception e) {

e.printStackTrace();

}

nextShutter();

}

}

};Wow, nice, it works great now. The only thing is that I still can’t see the preview. I also tried making my own GL renderer but even passing my SurfaceTexture with setPreviewTexture can’t make it work, onFrameAvailable is never called (although it works great in my S7). Maybe some parameter in the camera that I’m not setting correctly? I don’t know if there is a list of available parameters.

Note that I have not tried the parameters below.

Please post your code snippet with some explanation about the device (probably Samsung S7) you’re displaying the Live View on. I have not tried setPreview from the Android Camera class.

That’s exactly what I needed, thank you! I’m not displaying the preview in the device, I just wanted to try to record photo/video adding some effects with openGL shaders.

3810x1920 is the biggest resolution I can get, right?

yes, that is the maximum resolution right now. If you are successful, please post a code snippet. This is all new and so we’re all trying to figure things out together.

Would this be possible to do with the WiFi control? I am thinking about upgrading to the Theta V for this functionality alone. Being able to shoot photos every second would really improve the timelapse project I am working on!

It doesn’t work with WiFi control right now. If this is your primary reason for buying it, you should wait until people do more tests. I personally have not been able to communicate with the plug-in app from WiFi, but it may be possible in the future.

Full code from Ichi Hirota plug-in is now included in the Plugin Development Community Guide

It’s best to use the main document link above as the links to individual sections may change. Right now, the direct link to the dual-fisheye example is:

Thanks @codetricity, I’ll test this plug-in near future. But I want to see anyother’s test. Live preview can work on the mode?

Regards,

Toyo

Live streaming should work with plug-in mode and we should be able to control the stitching in live streaming plug-in mode. However, I have not tested this yet.

This is very cool, and as @xwindor says would be great help for time-lapse.

I wonder if it would be possible to pull the images off the camera via WIFI while this app shoots photos.