I’ve been using Jetpack 4.6, which corresponds to L4T R32, Revision 6.1.

cat /etc/nv_tegra_release

# R32 (release), REVISION: 6.1

I recently met with a community member running L4T R32, Revision 7.1 and having problems with RICOH THETA X firmware 2.10.1.

To replicate the test with the newer version of Jetpack, I’m going first take a baseline test with Jetpack 4.6. I’ll then update this post with the install of Jetpack 4.6.4 (newest for Nano).

In this example, I’ve renamed ptpcam to theta in order to test the modified and unmodified versions of ptpcam on the same Jetson.

theta --info

THETA Device Info

==================

Model: RICOH THETA X

manufacturer: Ricoh Company, Ltd.

serial number: '14010001'

device version: 2.10.1

extension ID: 0x00000006

image formats supported: 0x00000004

extension version: 0x006e

ls

gst_loopback gst_viewer.c Makefile thetauvc.h

gst_viewer gst_viewer.o thetauvc.c thetauvc.o

craig@jetpack-4:~/Development/libuvc-theta-sample/gst$ ./gst_loopback

start, hit any key to stop

Opening in BLOCKING MODE

NvMMLiteOpen : Block : BlockType = 261

NVMEDIA: Reading vendor.tegra.display-size : status: 6

NvMMLiteBlockCreate : Block : BlockType = 261

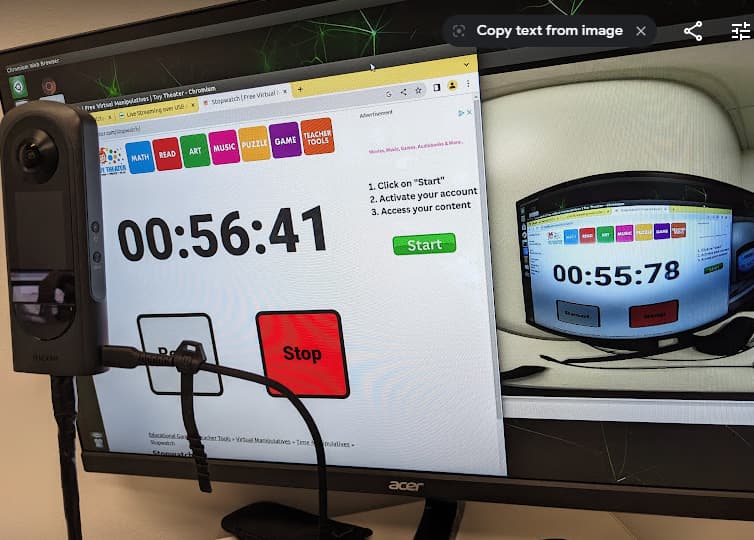

latency

At 4K, latency is 630ms.

I believe that the top/bottom correction of the live stream can be disabled with this api

This would likely reduce latency.

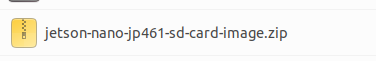

install jetpack

Using etcher with this file.

There appears to be another install method using the SDK manager.

SDK Manager | NVIDIA Developer

I decided to use etcher.

after upgrade

R32 release is now higher. Previous was 6.1.

cat /etc/nv_tegra_release

# R32 (release), REVISION: 7.1

/usr/local/lib seems like it will load.

/etc/ld.so.conf.d$ cat libc.conf

# libc default configuration

/usr/local/lib

from home directory

mkdir Development

cd Development/

git clone https://github.com/ricohapi/libuvc-theta.git

cd libuvc-theta/

mkdir build

cd build/

cmake ..

-- Could NOT find JPEG (missing: JPEG_LIBRARY JPEG_INCLUDE_DIR)

-- Checking for module 'libjpeg'

-- No package 'libjpeg' found

-- Looking for pthread_create in pthreads - not found

sudo apt install libjpeg-dev

sudo apt install doxygen

rerun cmake …

cmake ..

-- libusb-1.0 found using pkgconfig

-- Found JPEG: /usr/lib/aarch64-linux-gnu/libjpeg.so

-- Found JPEG library using standard module

-- Building libuvc with JPEG support.

-- Configuring done

-- Generating done

-- Build files have been written to: /home/craig/Development/libuvc-theta/build

make

sudo make install

[ 45%] Built target uvc_static

[ 90%] Built target uvc

[100%] Built target example

Install the project...

-- Install configuration: "Release"

-- Installing: /usr/local/lib/libuvc.so.0.0.6

-- Installing: /usr/local/lib/libuvc.so.0

-- Installing: /usr/local/lib/libuvc.so

-- Installing: /usr/local/include/libuvc/libuvc.h

-- Installing: /usr/local/include/libuvc/libuvc_config.h

-- Installing: /usr/local/lib/libuvc.a

-- Up-to-date: /usr/local/include/libuvc/libuvc.h

-- Up-to-date: /usr/local/include/libuvc/libuvc_config.h

-- Installing: /usr/local/lib/cmake/libuvc/libuvcTargets.cmake

-- Installing: /usr/local/lib/cmake/libuvc/libuvcTargets-release.cmake

-- Installing: /usr/local/lib/cmake/libuvc/FindLibUSB.cmake

-- Installing: /usr/local/lib/cmake/libuvc/FindJpegPkg.cmake

-- Installing: /usr/local/lib/cmake/libuvc/libuvcConfigVersion.cmake

-- Installing: /usr/local/lib/pkgconfig/libuvc.pc

-- Installing: /usr/local/lib/cmake/libuvc/libuvcConfig.cmake

use ldconfig

ldconfig -v

/usr/local/lib:

libuvc.so.0 -> libuvc.so.0.0.6

install libuvc-theta-sample

modified version

git clone https://github.com/codetricity/libuvc-theta-sample.git

overkill. install everything related to gstreamer.

sudo apt-get install libgstreamer1.0-0 gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-libav gstreamer1.0-doc gstreamer1.0-tools gstreamer1.0-x gstreamer1.0-alsa gstreamer1.0-gl gstreamer1.0-gtk3 gstreamer1.0-qt5 gstreamer1.0-pulseaudio libgstreamer-plugins-base1.0-dev

modify gst_viewer.c for single camera on nano.

if (strcmp(cmd_name, "gst_loopback") == 0)

pipe_proc = "decodebin ! autovideoconvert ! "

"video/x-raw,format=I420 ! identity drop-allocation=true !"

"v4l2sink device=/dev/video0 qos=false sync=false";

make

cd gst/

make

error

./gst_viewer

works as expected

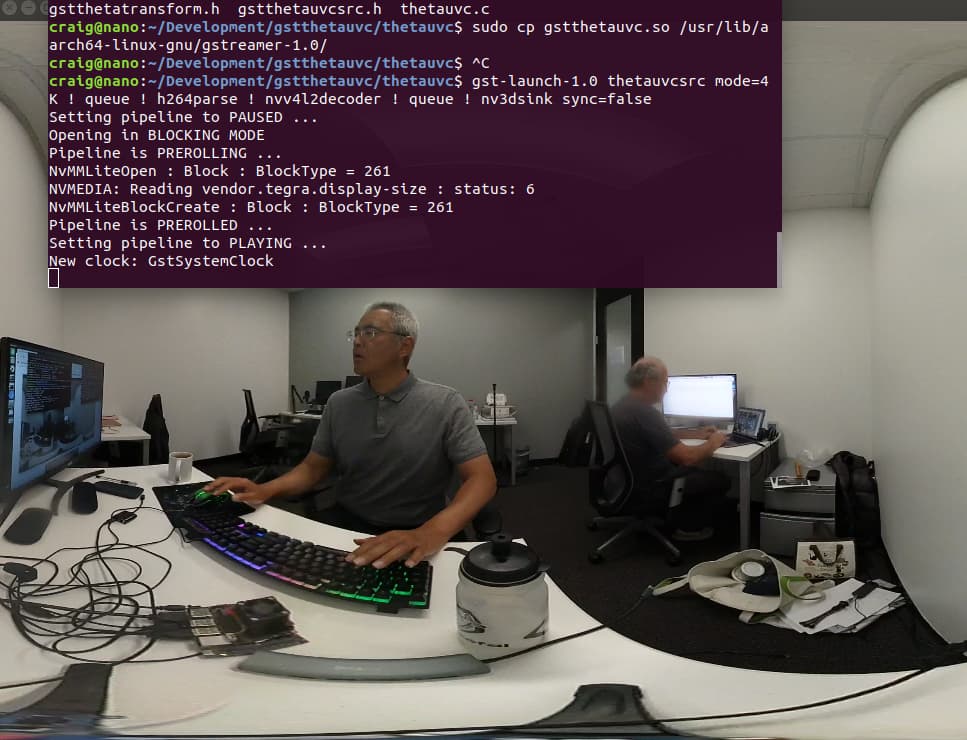

install gstthetauvc

git clone https://github.com/nickel110/gstthetauvc

Cloning into 'gstthetauvc'...

cd gstthetauvc/thetauvc

make

sudo cp gstthetauvc.so /usr/lib/aarch64-linux-gnu/gstreamer-1.0/

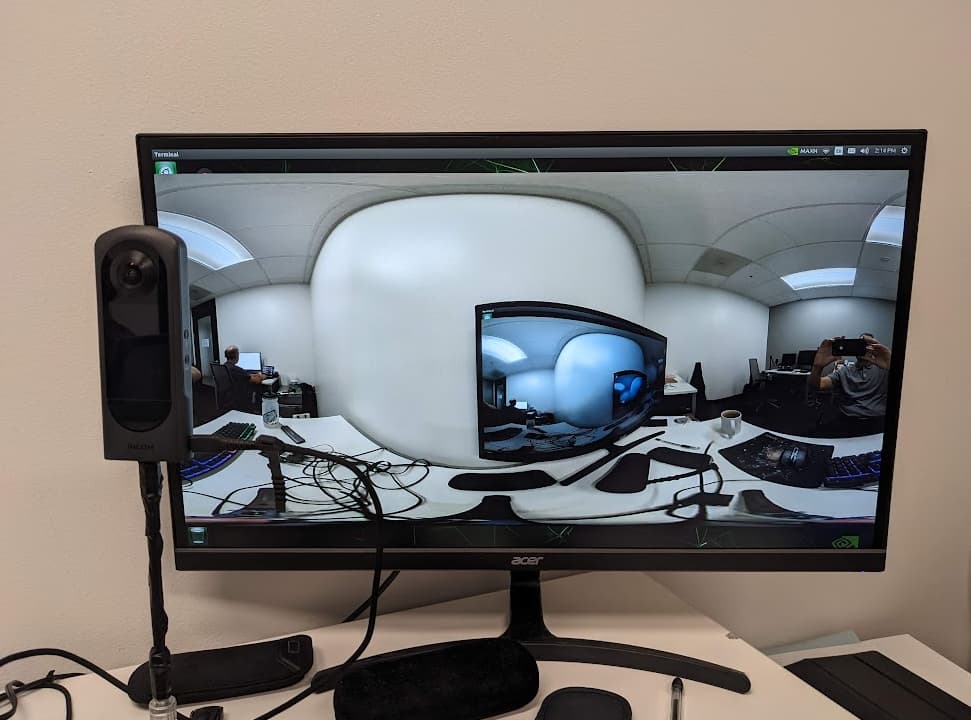

gstthetauvc test

gst-launch-1.0 thetauvcsrc mode=4K ! queue ! h264parse ! nvv4l2decoder ! queue ! nv3dsink sync=false

Setting pipeline to PAUSED ...

Opening in BLOCKING MODE

Pipeline is PREROLLING ...

NvMMLiteOpen : Block : BlockType = 261

NVMEDIA: Reading vendor.tegra.display-size : status: 6

NvMMLiteBlockCreate : Block : BlockType = 261

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

opencv test

pip install opencv-python